This is one of our first posts to share practices we have adopted in our own development within CLM as part of Continuous Delivery. The presented techniques are used to control software evolution and to improve the quality of our code base. Since there is a variety of IBM and Open Source tools we are using in our development we focus here on the practices and techniques rather than on a particular tool.

Rational Team Concert has a large and complex code base that is changed by numerous developers every day. While we as a team control the quality of every code snippet that is delivered, one of our biggest concerns is that any of the changes could break other parts in the program. Every developer can only keep a certain amount of information in mind when changing the source code. In the Tracking & Planning team, we have adopted a set practices – our safety net – that detects newly introduced breakages as early as possible.

Test in isolation

One essential part of the safety net is unit testing. Unit tests can be run fast and thus frequently to detect accidental breakage in our products. Moreover, unit testing helps us to establish a feedback loop for interacting with a functionality and to shape its design when we implementing it.

In unit tests we attempt to exercise a program unit in isolation. We intentionally ignore all dependencies to other system parts, e.g, a running server, a database connection or UI elements. In the test, we have to create collaborating objects for direct or indirect input, before we can execute the unit under test and validate its outcome. In more complex programs, it is often difficult to create all collaborating objects, because they again depend on other objects, needed to provide input to method calls or to access object state.

Mock with care

A great help when creating and maintaining collaborating objects is mocking. A mock object can be seen as a dummy object that has no state and no behavior. It can be created without establishing any further dependencies, and expected return values from method calls can be provided with stubs. A stub defines a canned value that is returned from a method call instead of computing it from collaborators. Using stubs we can create isolated behavior in unit tests in a simple way and reduce the test creation effort significantly.

We’re using Mockito as mocking framework. It has shown to be the mocking library with the least distractions. Besides its very good DSL (domain specific language), it maintains the standard test structure (assemble, act, assert), and allows for under-specification of behavior in mock objects. Moreover, with Mockito we can stub out some of the genuine behavior in the real object and also verify behavior in cases where actual effects are difficult to access.

For example, consider a converter for attributes that converts every attribute into a data transfer object (DTO). If we want to test that conversion we would implement several tests for various attributes that create an attribute, call the converter, and validate the returned DTO. Each test would have to contact the server to fetch the attribute configuration before any attribute can be converted properly. Using Mockito, we can mock the configuration with mock(Object) and stub it into the services as indirect input to the test object with when(Object).thenReturn(Object).

@Test public void convertAttributeWithTimeSpentConvertsAllProperties() throws Exception {

Attribute expected= (Attribute)IAttribute.ITEM_TYPE.createItem();

expected.setAttributeType(AttributeTypes.DURATION);

expected.setIdentifier(TIME_SPENT);

expected.setDisplayName("Time Spent");

IWorkItemConfiguration config= new ProcessConfigurationManager(fAuditableServer);

IAuditableCommonProcess auditProcess= mock(IAuditableCommonProcess.class);

when(fAuditableServer.getProcess(any(IProcessAreaHandle.class), any(IProgressMonitor.class))).thenReturn(auditProcess);

when(fWorkItemServer.getWorkItemConfiguration()).thenReturn(config);

AttributeDTO actual= fTestObj.convertAttribute(expected);

assertEqualsAttribute(expected, actual);

}

In this unit test we create an attribute of type Duration, provide an expected configuration and stub it with two when() calls into the mocks. With Mockito’s built-in matchers any(Class) we prevent over-specification in the stub arguments. Instead of passing in the actual parameter value, Mockito allows for using Matchers that enable the stub only if its constraints are met.

This way we entirely eliminate dependencies to a running server. The test exercises the full attribute conversion with no need for real data in a database. Its isolated setup saves a lot of time and complexity. With just a few lines of mock code, this test went from a heavyweight integration test to a true unit test. Using matchers instead of actual parameter values we can prevent over-specification in the mocks as it has shown to be real threat to maintainable mock tests that can easily lead to testing of custom mock behavior, instead of testing the genuine program behavior.

Measure in context

When writing new tests it is interesting to understand which parts of the program are not exercised by any test. Test coverage tools can help to identify these parts and to assess whether you should write tests for them. A test coverage tool instruments the (byte) code, i.e., it adds extra instructions to capture what is executed, before it actually runs the tests for a program. Test coverage can be measured on different levels. While instruction coverage is more accurate then e.g. line coverage, it also requires a more complex instrumentation.

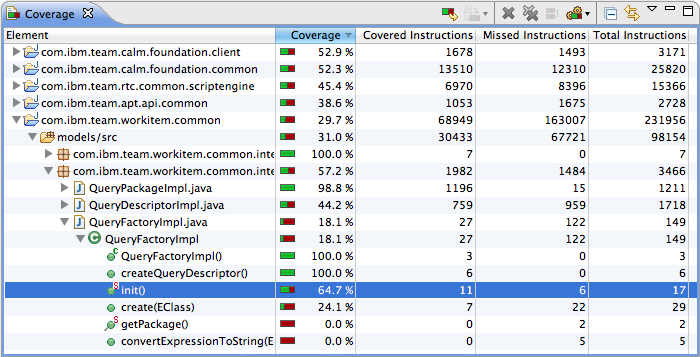

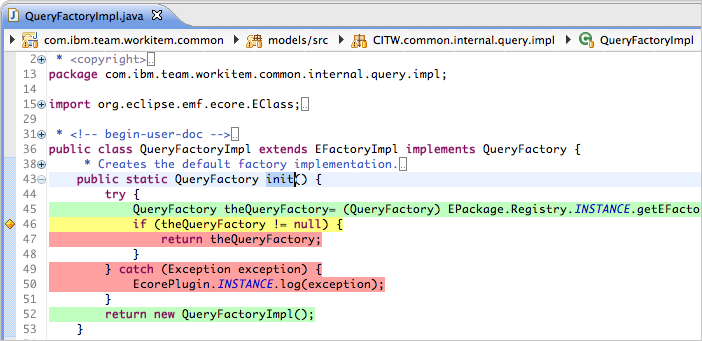

We’re using EclEmma as coverage tool for Java. It is accurate, as it instruments every byte code instruction and calculates branch coverage for conditional statements (e.g., if-else, switch). EclEmma is also fast and runs from within the Eclipse workbench like any other JUnit launch. After you ran your tests, it shows a Coverage View that summarizes your test coverage as shown above. EclEmma does not require specific changes to your project setup, but you can limit the analysis scope. Once you have run EclEmma it augments your source code with coverage information directly, as shown below. Spotting untested but important functionality becomes an easy task when browsing your code base.

With test coverage information in every developer’s workspace and in the continuous builds we are able to focus our test development efforts on areas with repeating regressions and new complex functionality. While fixing a defect it becomes obvious to the developer what program parts have to be covered by new tests that accompany the fix.

Try it yourself

We assembled a guide on how to unit test complex software programs. It helps to differentiate unit testing from integration testing practices and provides pragmatic guidelines on when to write new unit tests. It introduces mocking techniques and explains best practices that help to create maintainable mock objects. The guide also shows how test coverage can be used within your workspace and in continuous builds to identify untested program parts, using EclEmma.

You can find the complete guide in the Jazz.net wiki.

Jan Wloka

Developer, Jazz Tracking & Planning

Great post and the wiki guide is very helpful. Jan, have you found that your team has adjusted their coding practices after using the Mockito for unit testing? Aka, have you found that certain patterns are preferable because they are more unit testable? Did that influence the way you organize or create API’s? E.g. I know some teams have web ui widgets totally tied to rest calls whereas others decided to have a cleaner separation so they can unit test them without a server connection.

Hi Chris, a couple of things have changed. For example, we learned quickly that explicit dependencies (in object constructors) are good, and very handy when it comes to instantiation in different places. We also tend to build smaller objects with limited responsibilities. They can be tested more easily, but also used more frequently. Overall nothing really ground-breaking, except that it became harder to get away with bad coding, because even with Mockito it’s more difficult to mock many implicit dependencies or verify indistinct outcome. Objects that can be setup in a simple unit test with all its dependencies tend to be simpler to use in production code as well. Clear assertions in the tests document object behavior with small examples and help to reflect on designed API. This awareness helps also with bigger design decisions across the team w.r.t. to future maintainability. In all recent contributions, e.g. in the Web client, we made sure that UI is separated from program logic and REST calls completely. This way, program logic and server communication can be tested directly in a JavaScript VM with no need for a complete browser stack.

Does that answer your questions?