In a recent blog post, Pete Steinfeld casually mentioned that over the past year the time to build the full Collaborative Lifecycle Management (CLM) pipeline was cut in half, but didn’t elaborate on how that reduction was accomplished. Since I was involved with analyzing and improving the pipeline, I thought I would share, at a high level, how we achieved most of that increase in end-to-end build time by applying standard computer pipeline practices to the build pipeline to increase parallelism.

I’ve spent much of the past eight years working on builds and build related tooling and find solving problems related to supporting continuous delivery to be quite interesting. I also spend a fair amount of time involved in globalization and localization processes and was the creator of IBM’s internal pseudo translation tool. Currently I’m working on supporting continuous delivery for some exciting new applications. Most often I don’t create new tools, but rather come up with ways to integrate existing tools in new ways; this focus on integration has spread into my personal life and this post was inspired while I was playing with the kids and thinking about work . . .

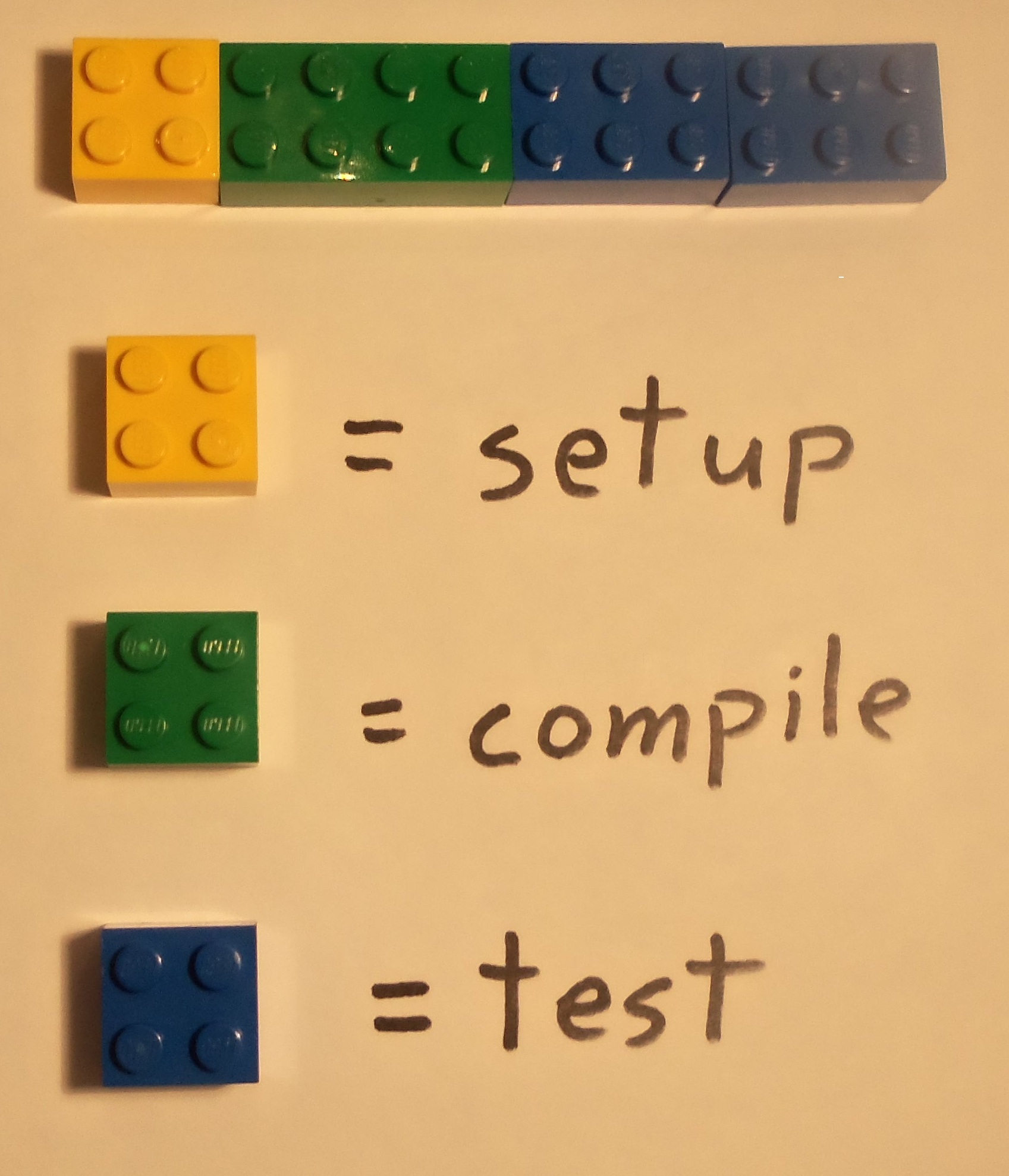

In a simple build scenario, there is an initial setup which includes fetching the source from source control. Next the source code is compiled and packaged up. Finally, the compiled code is tested and validated. These three stages are shown in the timeline below:

“In computing, a pipeline is a set of data processing elements connected in series, where the output of one element is the input of the next one.” (1) When there are multiple builds, the brute force pipeline for three builds would look like this:

Running the builds end-to-end is a simple approach and is easy to implement. However, when one build depends on another, it really only depends on the packaged artifacts and not the tests. So instead of waiting for the previous build to run tests, a dependent build can start as soon as all of the required artifacts have been published. This makes the pipeline look like this:

By overlapping the builds, the individual build time remains the same, but the end-to-end time is reduced dramatically. However, we can do better by slowing down the individual builds.

Back in school when I was learning about assembly programming, I was introduced to the NOP instruction. The NOP (which stands for no operation) essentially tells the CPU to do nothing. Initially I didn’t understand the purpose of telling the CPU to do nothing when it could be doing something, but soon saw how inserting a NOP in the right place could allow greater parallelism when pipelining. So even though each individual step took longer because of the NOP, the entire pipeline finished sooner.

Just as the NOP can speed up a pipeline of assembly language instructions, causing a build to wait until the right moment can speed up a build pipeline. Even when the compile depends on the artifacts from another build, the setup does not depend on the artifacts so the setup can happen before dependent artifacts are built. The time required to setup up and compile can vary substantially from build to build, so after the setup completes we insert our NOP—we wait until the artifacts we need are ready before continuing. With this added wait, an individual build looks like this:

With this wait the elapsed time from start to finish for an individual build is a bit longer. The resulting pipeline looks like this:

In the first build, there is no dependency so no wait is required (or it can be viewed as a wait that takes no time). The second and third builds begin setup as soon as the setup of the previous build completes and then the builds wait until the compilation can start. This waiting enables greater overlap of the builds. Even though the individual builds can take longer, the time it takes to build the full pipeline is reduced.

One of the best ways to speed up a build is to do more in parallel. By applying best practices used for assembly language pipelines to our build pipeline, we were able to increase parallelism and reduce end-to-end build time substantially and this made things easier for the release engineering team. However, as Pete pointed out, it didn’t benefit developers as much as desired–in my next post I plan on describing an approach adopted by some builds to allow developers to to run fast personal builds to verify they can deliver their changes.

Nathan Bak

Software Engineer, Master Inventor

IBM Rational

1 Pipeline (computing). In Wikipedia. Retrieved 15 August 2013 from http://en.wikipedia.org/wiki/Pipeline_(computing).

Hi Nathan – great blog!

One thing that comes to mind is the impact of test phase on dependent build. In your blog you have assumed that the test phase of a build has no impact on a dependent build. There could be tests though which if failed could render the build not fit to be used (and thus the dependent builds need not be kicked off).

One approach to get around this might be to split the testing to say 2 phases with the tests that affect the dependent build to run first and only if they pass is the dependent build kicked off.

BTW, Nifty suggestive use of NOP:-)

Very nice blog post Nathan. Of course this is yet another example of where thinking about the dependencies between software artifacts pays handsome dividends. The challenge, as always, is getting developers to think about dependencies and how to build loosely coupled and highly cohesive components that can be built and tested independently. It is my experience that software developers, and managers alike, favor building monolithic software! It reminds me of the manager that told me once, “If it ships together, package it together!”, which is the antithesis of good software engineering!

Rajeev, we have unit tests that take seconds to run–we do wait for those to make sure the build is “good enough”. The integration and system tests take minutes or hours to run and those are the tests for which we do not want to wait.

Nathan – great blog post. Great points and I really like the use of the legos to bring it to life. I look forward to your next post.