The following is in continuation of the work I described in my previous blog posting at Improving throughput in the deployment pipeline. In my earlier post, I briefly described how the IBM Jazz Collaborative Lifecycle Management (CLM) project team is developing a deployment and test execution pipeline that is allowing us to identify key product issues early in our development cycle. In this post I would like to take you a little deeper in terms of what goes on behind the scenes, how we record the progress/statuses from the various stages making up this pipeline and how we notify our teams about failures and issues when they occur.

However, before I continue, I would like to disclaim that these practices are not the best-of-the-breed and we are still evolving them through new lessons learnt by utilizing this continuously running pipeline. As part of these retrospectives, there are a number of key areas of improvement that we have identified and are gradually trying to make intelligent investments into each of these. Some of them include improving our framework of automated tests – both, in terms of content as well as packaging/structuring them for execution in the pipeline, increasing code coverage by tests, reducing cultural disparities and conflicting objectives, eliminating differences in development methodologies, reducing expensive overheads and revamping our build process. However, like all mature development organizations, we are constantly finding ourselves peeling through multiple layers of good and bad habits we have embraced through the years, which we have assumed to be our adoption of industry best practices.

SOURCE OF THE PIPELINE RIVER

The CLM Continuous Deployment pipeline starts when either a member of the CLM Release Engineering team manually triggers what is called an “orchestrated” CALM-All build or it happens to be 7.30 PM ET on a Mon/Tue/Wed/Thu/Sun.

Why no CALM-All builds on Friday or Saturday?

Some of it is traditional. Before we had fully automated builds, someone from our release engineering team always had to be “on-call” when builds ran every day of the week, pretty much babysitting their outcome. This meant that failures from Friday night builds poured into Saturday and demanded immediate fixes. The same became true for Saturday night builds and every other day of the week. Since it was never in the Jazz team’s blood to have people work during “off-normal” hours, we decided to turn off Friday and Saturday night builds. The benefit was realized more recently when we started using Fridays for planning activities for the upcoming week and reserved Saturday/Sunday for extremely critical, server-side maintenance activities.

The CALM-All build is a layered build that compiles and packages the 4 core components making up the CLM application stack: Jazz Foundation, Change and Configuration Management, Requirements Management and Quality Management. At the end of the packaging phase, it creates an IBM Installation Manager repository which all the CLM Continuous Deployment builds (called “deployment” builds henceforth) use for installing and setting up CLM in an application server environment. It kicks off six deployment builds that set up the newly built “application-under-test” on the “server-under-test” and prepare these systems as targets for automated tests.

ROLE OF A DEPLOYMENT BUILD

The deployment builds are Jazz JBE-based, command-line build definitions and are designed to leverage virtualization capabilities of the IBM Workload Deployer (IWD), where our system topology patterns are cataloged. The deployment build holds a reference to one and only one virtual system pattern that gets provisioned in the IWD cloud. It uses the pattern’s topology to identify the various physical infrastructure pieces (application servers, database servers, proxy servers etc) that make up the overall CLM environment. At runtime, it installs the appropriate middleware applications on each of the infrastructure nodes. As an example, in one of our deployment build definition that provisions a two-server enterprise topology (calm.all.continuous.delivery.enterprise.two_tier), WebSphere Application Server v8.0.0.3 is installed on the host acting as the application server while DB2 v9.7 is installed on the host identified as the database server. These virtual system patterns also contain various script packages that alter the host system’s configuration, based on the runtime values provided by the deployment build. Some of the runtime values include references to a CLM Installation Manager Repository, the WebSphere version to be used, LDAP server and user properties, whether JTS setup must be run or not, should Money-That-Matters sample projects be created or not etc. At provision time, the script packages read the configuration properties provided to them, put down the CLM bits at appropriate file system locations, connect the applications to their corresponding database components, modify the runtime behavior of the hosted applications and eventually start the server process.

ROLE OF A TEST EXECUTION BUILD

All deployment builds also hold a build property (called “clmTestExecutionBuildId”) that contains the ID of a “test execution” build. It uses this property’s value to trigger the test execution build request and passes along all the relevant deployment-side information to it using Jazz build properties. These typically include the fully-qualified-domain-name (FQDN) of the deployed application server/database server hosts (“systems-under-test”), the context root and ports of the “application-under-test”, the FQDN of any test agents that may have been provisioned and any runtime arguments the test framework needs whose core knowledge may be available to the deployment build.

The test execution build then runs and executes automated tests against the “application-under-test” as identified by its build property values. It then reports test execution status by setting the passed/failed status bit on its build result and holds a fine-grained record of each tests’ results, including generated logs, screenshots and error dialogs.

Why do we need two build definitions?

Some of you may question the need to have two separate builds, one for deployment and another for test execution and why we chose not to combine the two? The short and perhaps the most logical answer is that we always wanted to have a loosely coupled deployment and test execution environment where the latter was not dependent on how the target environment was set up, whether it was through our continuous deployment effort or using some manual steps as initiated by a developer. We wanted to support a situation where developers could run the same set of pipeline tests against systems that were not provisioned by the pipeline. By combining the two, we’d have prevented the development community from executing tests against existing test environments that they owned. This technique also worked well with our plans for future, where we want to adopt IBM UrbanCode’s uDeploy product as our deployment technology of choice. By keeping the two loosely coupled we will be able to swap out our existing IBM SmartCloud Continuous Delivery (SCD) based implementation while retaining the investment we have made towards improving the various test execution frameworks and its assets.

BIRTH OF A MULTI-STAGE PIPELINE

Earlier when I spoke about the deployment builds and how they are kicked-off by the CALM-All build, I deliberately hid an important piece of information around the mechanism we have adopted for recording the pipeline’s progress and status for each of its phases.

While developing the CLM pipeline, we reached a point where we were extremely successful in running one-off deployments followed by test execution against provisioned environments. We developed a number of such pairs for each of the test domains we wanted to support and were able to provide manual triggering mechanisms for each such pair. In DevOps lingo, these could essentially be called single-stage deployments, but not a pipeline by any standards!

The use case we had envisioned was to have a number of deployments running in parallel or based on some pre-determined condition such as the status of the previous stage, followed by tests that targeted those environments – a true “Multi-Stage” pipeline. For a fully-automated and completely unmanned execution sequence, we wanted a mechanism where we could generically record a reference to a deployment/test execution build as soon as it started. When it ended, we wanted to capture its overall status. And, if it failed, we wanted to notify a member from our development and test community about it so that corrective action could quickly be taken against the failures. Longer-term, we wanted each of these data points to contribute towards a better understanding of how well we are doing in terms of containing changes, improving overall product quality and maintaining a steady course towards delivering high-quality software, release after release.

Out of the box, SCD did not provide any mechanism or guidance for creating such a multi-stage pipeline. There were some theoretical ideas on how it could be possible but nothing concrete to demonstrate. We brainstormed among our development and build teams and came up with a Jazz build participant based solution. If you are unfamiliar with Jazz build participants, don’t worry; think of them as pre- or post-operation triggers of Java code snippets associated with a build definition that would execute every time the Jazz build ran. Also, as their names suggest, the pre-participant code runs at the start of the build while the post-participant code runs when it completes.

TRACKING PIPELINE PROGRESS THROUGH A “BUILD ITEM”

Proposal

For our solution we decided to develop two Jazz build participants, a pre- and a post-, for recording progress and execution status of a given pipeline stage in an RTC work item called “The Build Item”.

- The pre-participant would record a reference to the currently running execution stage at the start of its build. It would also add a work item approval on its behalf but would leave the approval in a “Pending” state.

- At build completion time, the post-participant would record the overall status of the execution stage. Based on the status, it would move the approval as “Approved” or “Rejected”. If the execution failed, the participant code would notify the team responsible for the results.

A single build item would be associated with one-and-only-one CALM-All build and it would become the place where information about all pipeline-based executions, as related to the CALM build being tracked, would be recorded. At its heart, it would be like any regular RTC work item and would allow our development, test and release engineering teams to have conversations and collaboration around the referenced build’s stability and other critical issues affecting pipeline stages. It would also become a bulletin board of useful information such as infrastructure problems, product defects affecting tests, referenced items from other repositories, as they relate to the referenced build. Finally, it would have a well structured workflow and contain an audit trail of all pipeline events as they occurred. The approval data would allow us to generate reports and other metrics around release stability and ship readiness of products.

Implementation

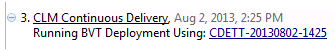

In our implementation, each deployment and test execution build gets a runtime property whose value holds a reference to the build item tracking the current “build-under-test”. The build item is created within the CALM-All build and the orchestrator code ensures that references to it are passed down to all continuous deployment builds. As soon as an execution phase starts, the pre-participant code on a given deployment/test execution build runs and records a comment like the following in the referenced build item:

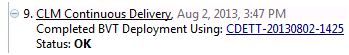

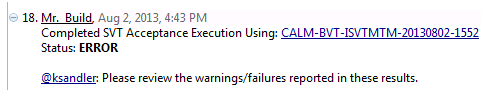

As mentioned earlier, it also adds a work item approval for itself but leaves it in a “Pending” state. Later when the execution completes, the post-participant code runs and records the following comment in the build item:

For successful executions, it moves the approval added by the pre-participant code to an “Approved” state. However, when the execution fails, the following is instead added to the build item and the approval is moved to a “Rejected” state:

For the CLM Continuous Deployment pipeline, we introduced the concept of a “Component Owner” through the use of a build property called “buildComponentOwner”. Each deployment and test execution build contains this property and its value is the Jazz.net user ID of the person responsible for the deployment/test build. The person identified by this property’s value is automatically subscribed to the build item by the pre-participant code. Additionally, when the build fails, a “@me” message is sent to inform them. Triaging and reporting on the failures then becomes the component owner’s responsibility.

NEXT STEPS

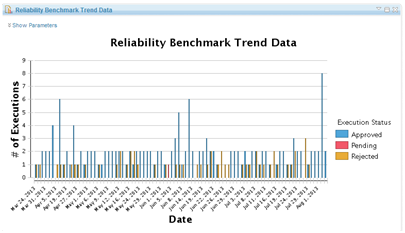

Over the period of last six months, our builds have been successful in creating and recording a number of these CALM-All build tracking items. They have gathered success/failure states of the various pipeline stages in both human-readable comments as well as approval records. Using these points of data, we have been able to develop some initial trend data reports similar to the one captured below:

With my next blog in the same series I plan to take you down the path of what we do with these data points, what reports are we able to generate and how the pipeline-based execution is providing us with a fair and accurate assessment of the quality of the products we ship to you.

Maneesh MehraCLM Automation Architect

IBM Rational Software Group

“Some of the runtime values include … whether JTS setup must be run or not”

I’m curious about this bit – my company is looking into automating the deployment of CLM instances for our projects. We’ve been able to automate all of the Installation Manager part of the process however we’ve not found any information about running the /jts/setup steps in an automated manner. Are you able to share any info on how you were able to do it?

@jrussell: We use what is called the “JTS Scripted Setup”. Its briefly described on the infocenter under https://jazz.net/help-dev/clm/topic/com.ibm.jazz.install.doc/topics/t_running_setup_command_line.html as this:

“The setup command is a repository tools command that allows you to set up a Jazz Team Server and associated applications in an automated way. The command may be run in interactive mode which will help capture the response file needed to drive future automated installs.”

However, there is also a wiki page devoted to it, located here: https://jazz.net/wiki/bin/view/Main/ScriptableSetupForConsumers

I would recommend reading the wiki since it explains how to run the scripted setup to capture a response file for future use. In addition, the wiki links to another page that describes each of the properties captured in this response file (https://jazz.net/wiki/bin/view/Main/ScriptableSetupResponsePropertiesExplained).

Please let me know if these do not help. We may be able to do a deep dive for your specific use case.