Introduction

The Link Index Provider (LDX) is a component of the Engineering Lifecycle Management solution (ELM) that builds and maintains an index of links between artifacts. ELM applications query the LDX to get information about links. In the ELM 7.1 release, the LDX was rearchitected to use a relational database for storing link information. This article discusses the performance of the new LDX architecture. The new LDX architecture, which IBM calls LDX rs, is based on the same architecture that was adopted for the Lifecycle Query Engine (LQE) in the 7.0.3 release. Here's what you can expect from LDX in 7.1:- Simplified deployment topologies, allowing for a single LQE rs to support both reporting and link resolution. A separate LDX server is no longer required (although that is still an option)

- Improved scalability and resilience. LDX rs handles concurrent queries more efficiently, and long link queries will no longer impact the entire LDX server

- Equivalent or better performance for link queries

- Load shifts from the LQE/LDX server to the database server:

- Less memory is required for LDX or LQE servers

- CPU and memory usage will increase on your database server. Consider deploying a new database server to support LQE/LDX rs.

- Architectural changes for LDX rs in 7.1 release

- Deployment topology

- Data shape used for performance testing and testing environment details

- Performance test results

Standard Disclaimer

The information in this document is distributed AS IS. The use of this information or the implementation of any of these techniques is a customer responsibility and depends on the customer’s ability to evaluate and integrate them into the customer’s operational environment. While each item may have been reviewed by IBM for accuracy in a specific situation, there is no guarantee that the same or similar results will be obtained elsewhere. Customers attempting to adapt these techniques to their own environments do so at their own risk. Any pointers in this publication to external Web sites are provided for convenience only and do not in any manner serve as an endorsement of these Web sites. Any performance data contained in this document was determined in a controlled environment, and therefore, the results that may be obtained in other operating environments may vary significantly. Users of this document should verify the applicable data for their specific environment. Performance is based on measurements and projections using standard IBM benchmarks in a controlled environment. The actual throughput or performance that any user will experience will vary depending upon many factors, including considerations such as the amount of multi-programming in the user’s job stream, the I/O configuration, the storage configuration, and the workload processed. Therefore, no assurance can be given that an individual user will achieve results similar to those stated here. This testing was done as a way to compare and characterize the differences in performance between different versions of the product. The results shown here should thus be looked at as a comparison of the contrasting performance between different versions, and not as an absolute benchmark of performance.How LQE and LDX architecture has evolved

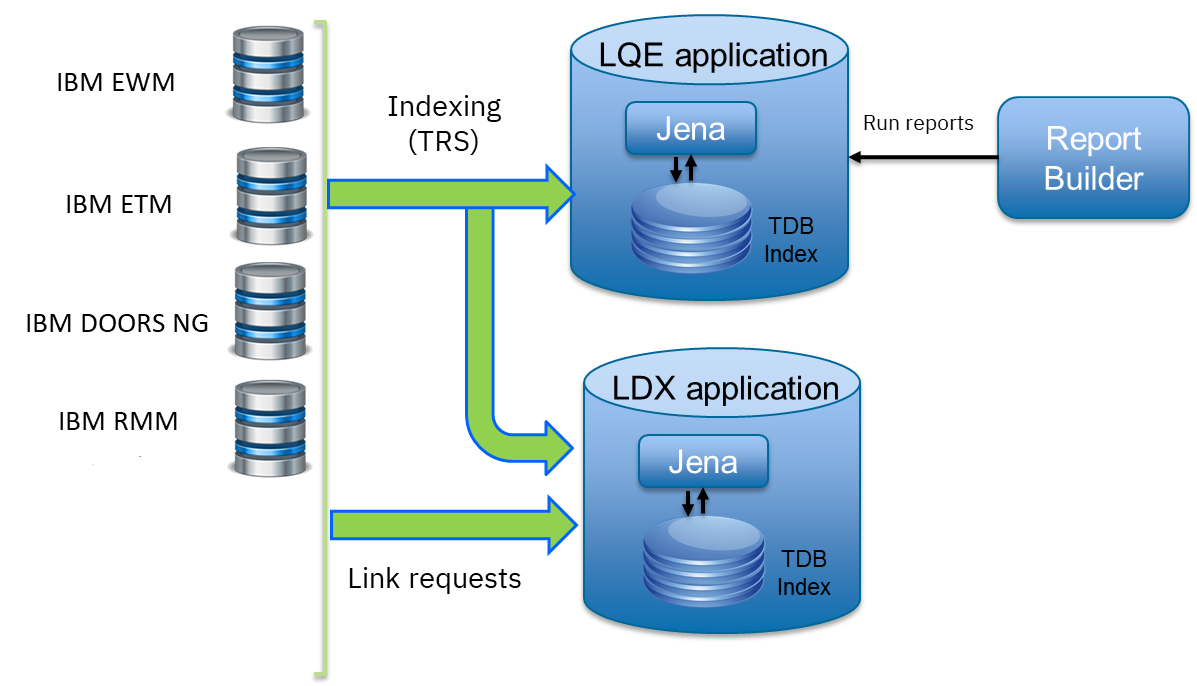

Up until the 7.0.2 release, the Lifecycle Query Engine (LQE) stored information using a triple store database provided by Apache Jena (Jena TDB). This was also true for the Link Index Provider (LDX), since LDX is just a specialized instance of LQE. This is shown in the architectural diagram below. Abbreviations:

Abbreviations: - EWM: Engineering Workflow Management

- ETM: Engineering Test Management

- ERM: Engineering Requirements Management DOORS Next

- The Jena index must be local to the LQE/LDX server machine, which prevents addressing scale issues through clustering

- Both LQE and LDX index application data, which added load to the ELM application servers

- Large Jena indexes must be almost entirely cached in memory for performance reasons, so that LQE/LDX servers require large amounts of RAM (as well as large Java heaps)

- Jena does not have a sophisticated query optimization engine, so complex traceability reports can be slow, and there are limited options to tune SPARQL queries to perform better

- There is contention for internal resources within Jena when running queries. Too many simultaneous queries will interfere with each other, and bottlenecks form when some of those queries take a long time to run

- Workloads that involve both query execution and indexing create contention between read and write operations against Jena. If a query is running when indexed data is written to disk, the updated data is temporarily stored in a journal file. Jena can combine the data in the main database with the updates in the journal to present a consistent view of the state of the database at the time the query started. The journal is merged into the main database once there are no reads pending.

LQE and LDX architecture in the 7.1 release

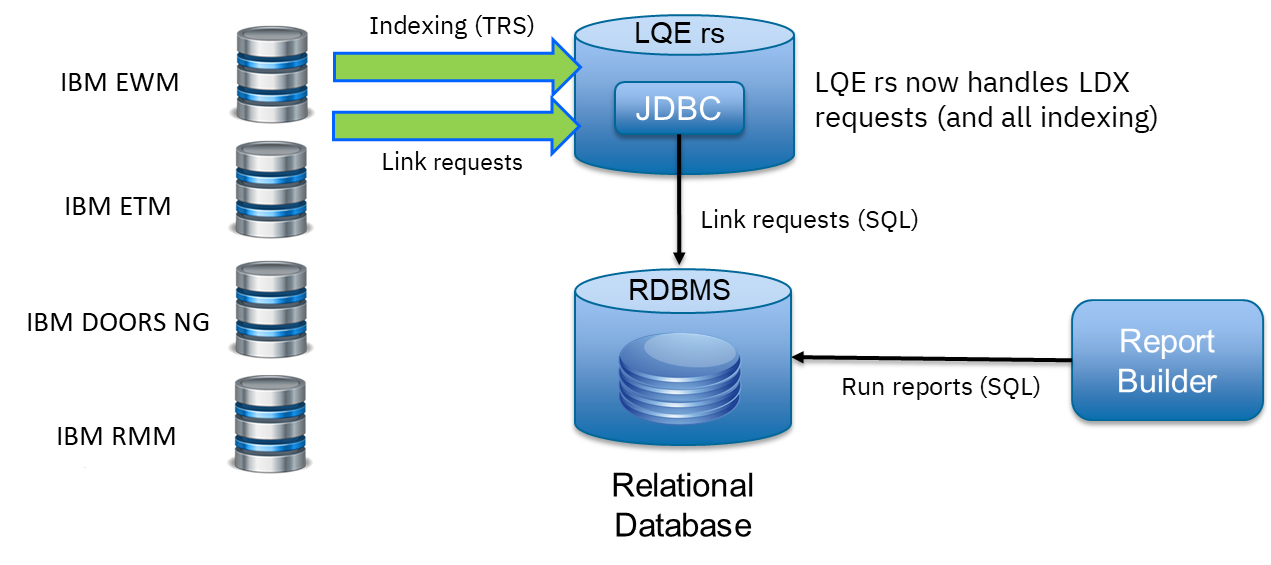

In the 7.1 release, LDX has adopted the relational store. The recommended deployment topology is shown below. Abbreviation:

Abbreviation: - RMM: Engineering Rhapsody Model Manager

- Database optimization engines are more sophisticated than Jena, allowing for improved query execution

- There is no longer a need for a separate LDX application, simplifying the topology (and reducing load on the ELM applications by eliminating unnecessary reads of the TRS feeds)

- There are more options for tuning the performance of SQL queries than there were for SPARQL queries

- Relational databases are designed for high data volumes

- Relational databases are much better at dealing with concurrent reading and writing. While locking is still needed, the locking usually happens at the row level. Lock escalation to the table level is not frequent, so one slow SQL query will not disrupt the entire server.

- Since query processing shifts from the LQE rs server to the database server in this architecture, the LQE rs server requires less RAM. RAM freed up from LQE can be moved to the database server where it can be used more efficiently. Less memory is also required for link resolution, since the caching of selections within LQE/LDX is no longer needed (that moves to the relational database).

- Data is represented more efficiently in the database compared to Apache Jena TDB files, saving storage space

- Clustering becomes possible through standard database solutions like Oracle RAC or Db2 PureScale

Test methodology

This section discusses how the LDX rs performance tests were conducted. It covers:- The data used for testing

- The topology of the test environment

- How load was applied to the servers

Data Shape

The test repository was based on the repository used during LQE rs testing in 7.0.3. That repository was extended with 6 additional ELM applications, and links were created between the artifacts.| Application | Artifact count | Database size |

|---|---|---|

| rm1 | 10 million | 800G |

| rm2 | 20,000 | 27G |

| rm3 | 20,000 | 27G |

| etm1 | 5 million | 832G |

| etm2 | 700,000 | 15G |

| etm3 | 700,000 | 12G |

| ewm1 | 2 million | 152G |

| ewm2 | 60,000 | 65G |

| ewm3 | 60,000 | 3.5G |

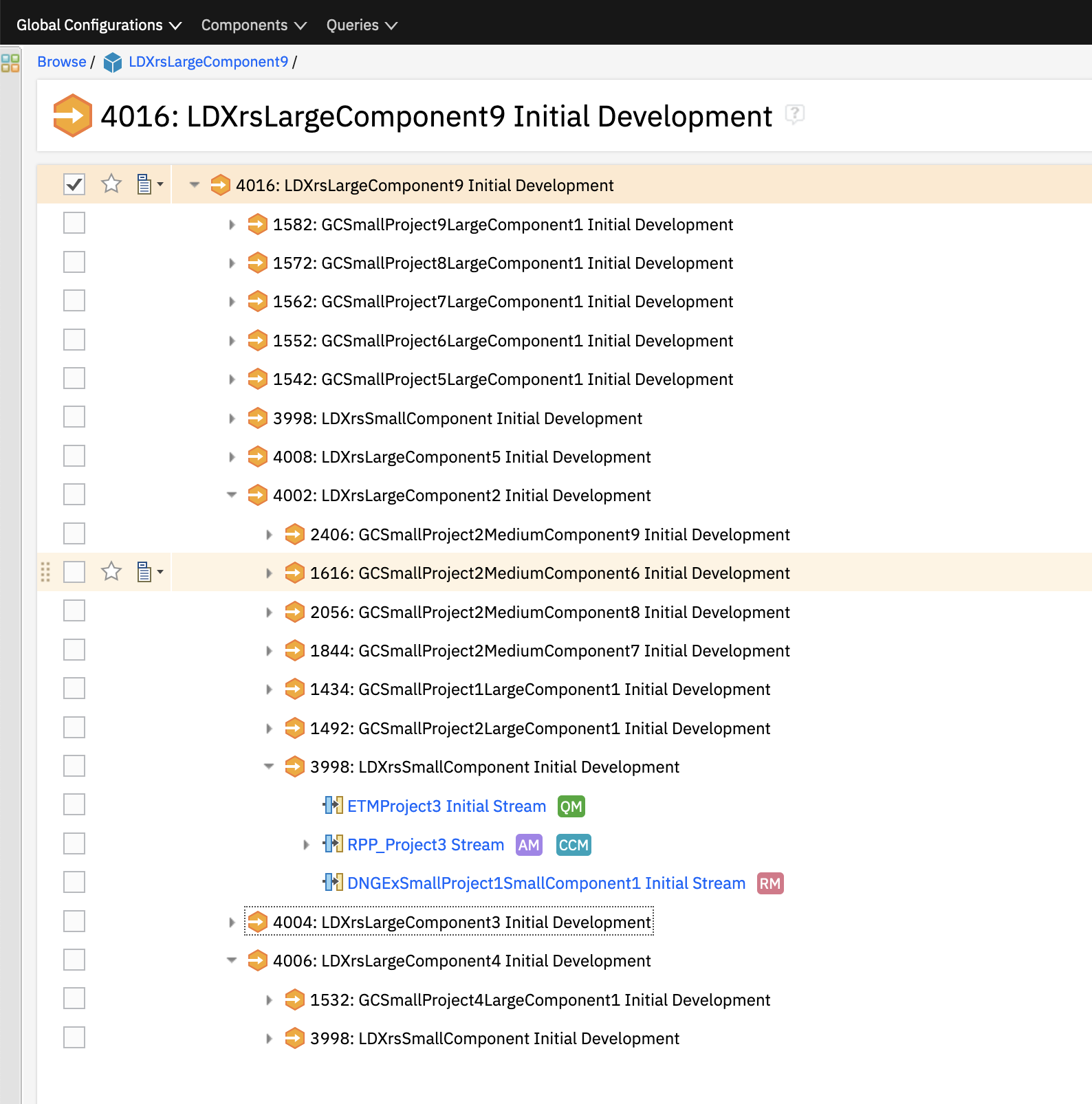

| gc | 1000 components |

Links were established between the requirements in the rm2 and rm3 servers and artifacts in ETM, EWM, and RMM:

- Validated By (ETM): Links artifacts in ERM that are validated by test cases in ETM

- Tracked By (EWM): Links artifacts in ERM that are tracked by work items in EWM

- Implemented By (EWM): Links artifacts in ERM that are implemented by tasks in EWM

- Affected By (EWM): Links artifacts in ERM that are affected by changes in EWM

- Derives Architecture Element (RMM): Links artifacts in ERM that derive architectural elements in RMM

- Traced By Architecture Element (RMM): Links artifacts in ERM that are traced by architectural elements in RMM

- Satisfied By Architecture Element (RMM): Links artifacts in ERM that are satisfied by architectural elements in RMM

- Refined By Architecture Element (RMM): Links artifacts in ERM that are refined by architectural elements in RMM

- EWM = Engineering Workflow Management

- ETM = Engineering Test Management

- ERM = Engineering Requirements Management DOORS Next

- RMM = Engineering Rhapsody Model Manager

Load testing methodology

In normal operation, users interact with applications, and those applications decide whether to send requests for link information to LDX. The load tests simulate user activity by sending requests directly to the LDX server, bypassing the applications. Direct interaction with the LDX during load testing offers several advantages:- More precise control of the workload applied to LDX is possible

- Application-side bottlenecks that might restrict traffic to the LDX are avoided

- Each request asks for links for 1000 artifacts

- Each simulated user makes requests as fast as possible (no think time)

Threads vs. users

The performance tests characterize workload in terms of threads. The workload is generated by sending requests directly to the LDX server from a test program, and the test program simulates multi-user load by running multiple threads. Each thread can be thought of as a simulating a very active user that does nothing but make requests for links. There is no pausing, so each thread makes requests as fast as it can. This is an extremely intense workload that is designed to drive the LDX rs system to its limits. Real users are much less active, and interact with LDX rs indirectly through the applications. For example, when a user opens a view that has link columns using ERM, a request will be sent to LDX rs from the ERM server. You can consider each thread (or simulated user) to be the equivalent of 20 real users.Performance Automation Tools Used in Testing

To evaluate the performance of the LDX rs under various conditions, we employed a combination of custom-developed automation tools and industry-standard monitoring solutions. This approach allowed us to generate load, measure response times, and monitor throughput and CPU usage. Load Generation and Response Time Measurement- Custom JAVA Automation Tool:

- Functionality: The tool was developed using JAVA to generate load on the LDX rs REST API through a multithreading mechanism. It triggers LDX rs API calls in parallel based on configurations specified in a configuration file.

- Configuration Options:

- Thread Load: Number of concurrent threads.

- Database Type: Choice between relational databases and Jena.

- Number of Target URLs: The number of URLs to target per API call.

- API Call Frequency: How many times to trigger the same API.

- ‘RunBoth’ Property: If set to true, the tool can handle load for both relational databases and Jena simultaneously and compare their results at the end.

- Output: The tool generates a simple text file as the result file, capturing the response times for each test run.

- Usage: This tool was instrumental in measuring the response time of the LDX rs API under different thread loads and configurations, providing essential data for performance analysis.

- NMON:

- Functionality: NMON is used for monitoring system performance and resource usage.

- Integration: The NMON tool was started automatically by the JAVA automation tool during each performance run.

- Output: After the performance run, NMON data was used for detailed analysis of CPU usage and other system metrics over the duration of the test.

- IBM Instana:

- Functionality: IBM Instana Observability automatically discovers, maps, and monitors all services and infrastructure components, providing complete visibility across your application stack. We used for monitoring throughput and CPU usage during load test.

- Configuration:

- Central Monitoring: Instana backend was configured on a central machine.

- Agents: Instana agents were running on each server that needed to be monitored.

- Output: Instana provided real-time data and insights into the system's performance, aiding in the identification of performance bottlenecks.

Test Environment Details

Performance tests were conducted against 4 different test environments, in order to example LDX performance on both Oracle and DB2, as well as collecting baseline data from Jena. The 4 test systems:| # | Description | Specs | Notes |

|---|---|---|---|

| 1 | LQE Jena | 40 vCPU, 768G RAM | |

| 2 | LQE rs/Oracle | 40 vCPU, 768G RAM | Oracle co-located with LQE rs |

| 3 | LQE rs/Db2 | 40 vCPU, 768G RAM | Db2 co-located with LQE rs |

| 4a | LDX rs (Oracle) | Oracle: 80 vCPU, 768G RAM | LDX rs and Oracle on separate servers |

| 4b | LDX rs: 24 vCPU, 64G RAM |

Topologies 1-3 are the same as were used in the LQE rs performance testing in 7.0.3. Topology 4 sets up an LDX rs server with its own dedicated database server. LDX rs and Oracle are each deployed onto seperate servers, and the Oracle instance uses high-speed storage (a RAID 10 array of 24 nvme drives). The servers in the test environment are all physical servers. The hardware specifications are listed below.

| Role | Server | Machine Type | Processor | Total Processors | Memory | Storage | OS Version |

|---|---|---|---|---|---|---|---|

| LQE rs/Oracle | Oracle 19c | IBM System SR650 | 2 x Xeon Silver 4114 10C 2.2GHz (ten-core) | 40 | 768 GB | RAID 10 – 900GB SAS Disk x 16 | RHEL 7 | LQE/Jena | Oracle 19c | IBM System SR650 | 2 x Xeon Silver 4114 10C 2.2GHz (ten-core) | 40 | 768 GB | RAID 10 – 900GB SAS Disk x 16 | RHEL 7 |

| LQE rs/Db2 | Db2 11.5 | IBM System SR650 | 2 x Xeon Silver 4114 10C 2.2GHz (ten-core) | 40 | 768 GB | RAID 10 – 900GB SAS Disk x 16 | RHEL 7 |

| LDX rs | WebSphere Liberty | IBM System x3550 M4 | 2 x Intel Xeon E5-2640 2.5GHz (six-core) | 24 | 64 GB | RAID 1 – 300GB SAS Disk x 4 – 128K Strip Size | RHEL 7 |

| Oracle for LDX rs | Oracle 19c | IBM System SR650 V2 | 2 x Xeon Silver 4114 10C 2.2GHz (ten-core) | 80 | 768 GB | Raid 10 - 800GB NVME Kioxia Mainstream Drive x 24 - 7.3 TB on /mnt/raid10 | RHEL 8 |

Performance Test Results

This section presents the results of the performance tests against the 4 topologies. In summary:- LDX rs outperforms the Jena architecture by a significant margin, especially at higher load levels.

- The limiting factor is the number of processors on the server hosting LDX or LQE.

- Higher throughputs should be possible by adding more CPUs

Single-user testing

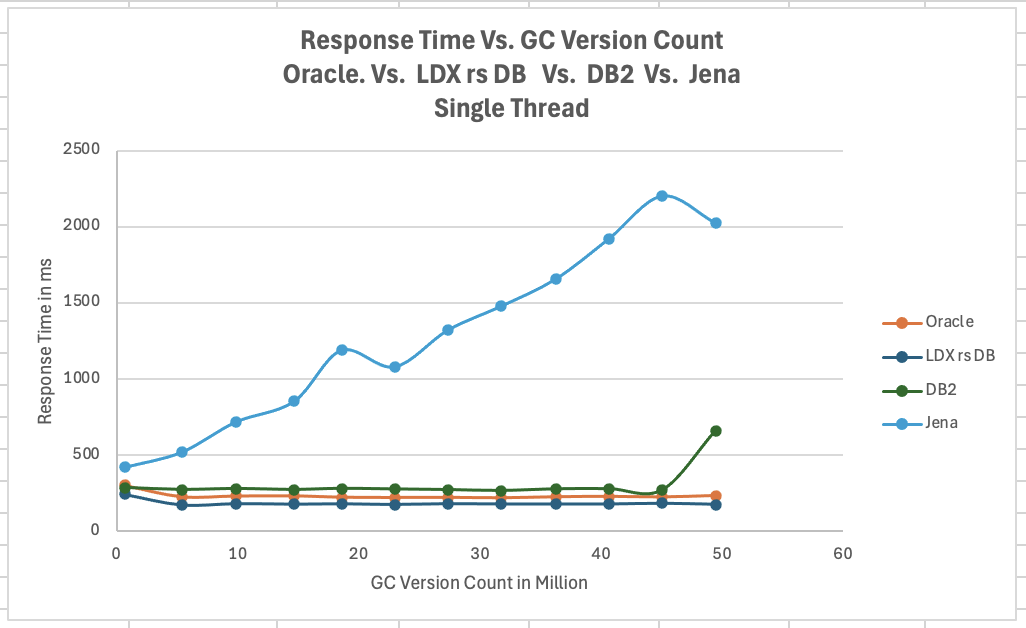

This section looks at the response times for LDX rs queries for the 4 different test topologies, using one simulated user. You can consider these to be "best case" numbers since they reflect performance when the systems are not under heavy load. Each request asks for the links associated with 1000 different artifacts. The tests were repeated with global configurations of various sizes (ranging from 700,000 versions to 50 million versions). Test Parameters:| Parameter | Value |

|---|---|

| Threads | Single thread |

| Target URLs | 1000 |

| GC Selection Size | 0.7M to 50M |

| Number of Link types | 7 |

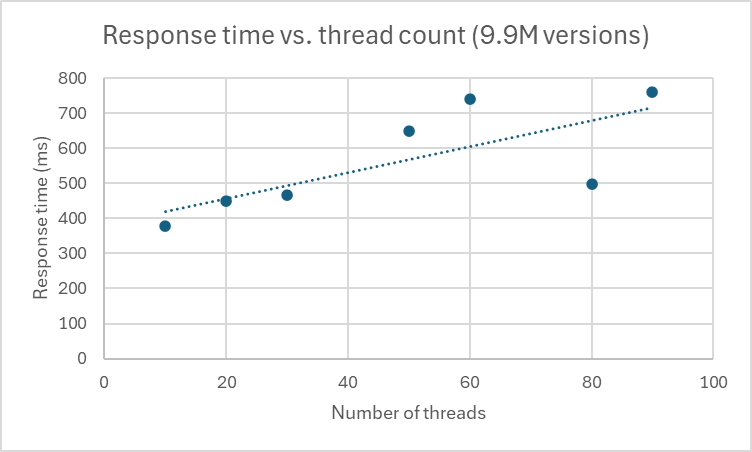

The chart below summarizes the response times for a query returning links for 1000 URLs, executed against all 4 topologies. The relational store topologies outperform Jena at every level of scale, and are not sensitive to the version counts. LQE/Jena degrades as the version counts increase.

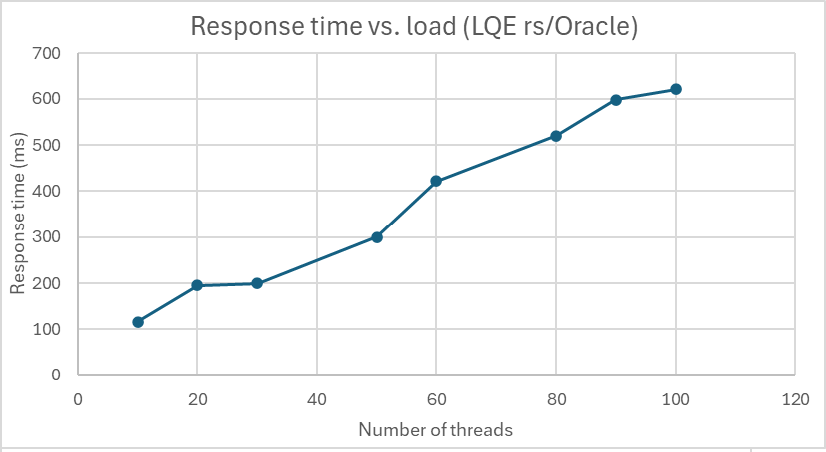

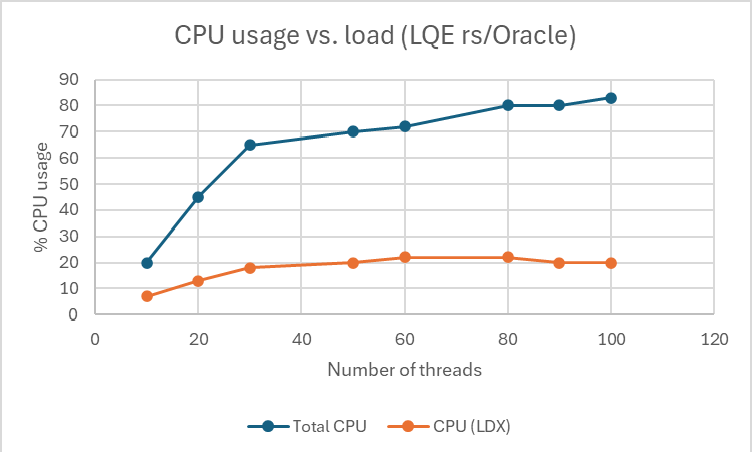

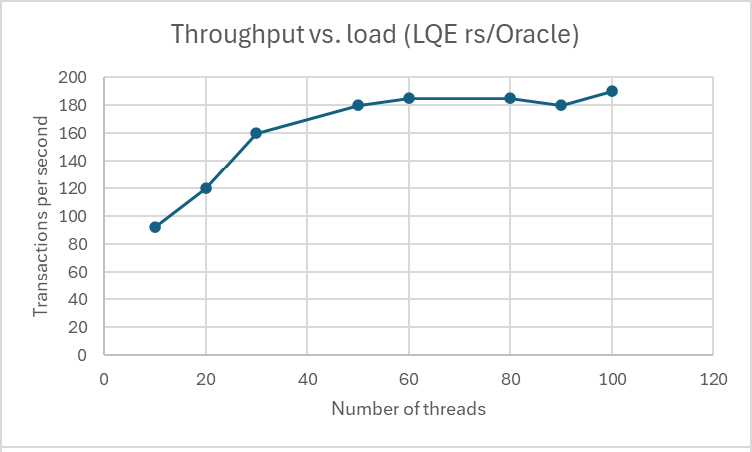

Load test results: LQE rs/Oracle

This section presents the results of the load testing against topology 2 (LQE rs/Oracle). Test Parameters:| Parameter | Value |

|---|---|

| Test environment | LQE rs/Oracle (topology 2) |

| Threads | 10 to 100 |

| Target URLs | 1000 |

| GC Selection Size | 40M |

| Number of Link types | 7 |

| Environment | Topology 2 |

- Maximum throughput: 185 requests per second (at 60 threads)

- Throughput is limited by available CPU

- Response times increase gradually if the system becomes overloaded

- Disk utilization on the database server is low

- System behaviour under load is improved when compared to LQE/Jena

Disk utilization on the LQE rs/Oracle server is low (15% at 100 threads). The storage subsystem is not a bottleneck.

Disk utilization on the LQE rs/Oracle server is low (15% at 100 threads). The storage subsystem is not a bottleneck.

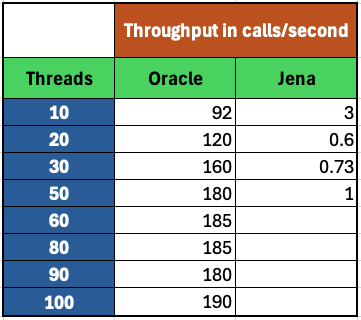

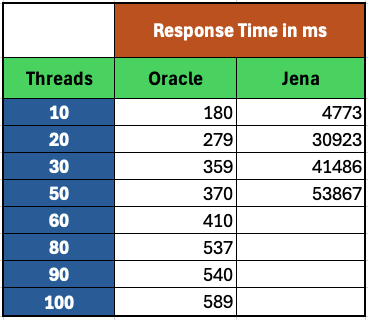

Comparing to LQE/Jena

LQE/Jena reaches a maximum throughput of 3 transactions per second at only 10 threads. Response times for LQE/Jena are much higher than for LQE rs/Oracle, and the Jena response times degrade quickly as load increases .

Response times for LQE/Jena are much higher than for LQE rs/Oracle, and the Jena response times degrade quickly as load increases .

Load test results: LQE rs/Db2

This section presents the results of the load testing against LQE rs/Db2 (topology 3). Test Parameters:| Parameter | Value |

|---|---|

| Threads | 10 to 100 |

| Target URLs | 1000 |

| GC Selection Size | .7M to 50M |

| Number of Link types | 7 |

| Environment | Topology 3 |

- LQE rs/Db2 performance at load is impacted by the number of versions in a global configuration

- Throughput can vary from 200 transactions per second (700k version) to 50 transactions per second (50M version)

- LQE rs/Db2 outperforms LQE/Jena by a significant margin

- Throughput is additionally limited by available CPU

- Response times increase gradually if the system becomes overloaded

- System tolerates overloads without crashing

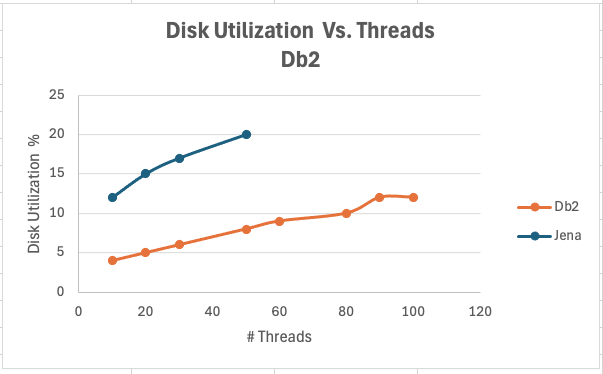

- Disk utilization on the database server is low

Comparing to LQE/Jena

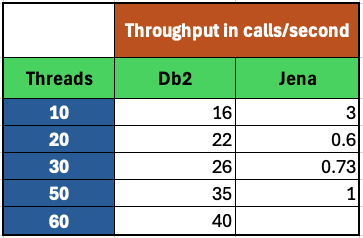

Throughput The table below compares the throughput of LQE rs/Db2 to LQE/Jena, for a large GC containing 40 million versions. LQE rs/Db2 outperforms LQE/Jena significantly, even with this large GC.

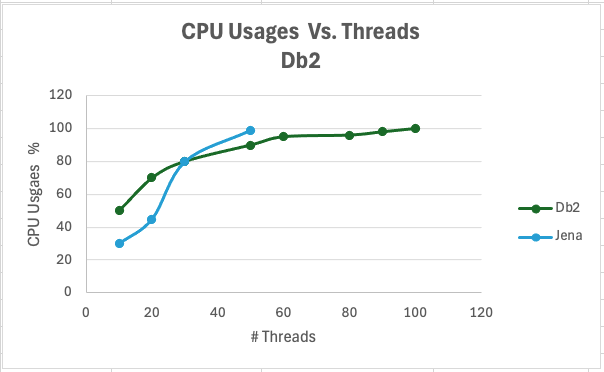

CPU Usage The chart below shows CPU usage for LQE rs/Db2 and Jena, as load increases. For LQE rs/Db2, the server has maxed out the CPU at 60 threads. In this topology, the LQE rs server and the Db2 server are co-located; separating the LQE rs application onto its own server would improve performance. LQE/Jena becomes non-responsive past 50 threads.

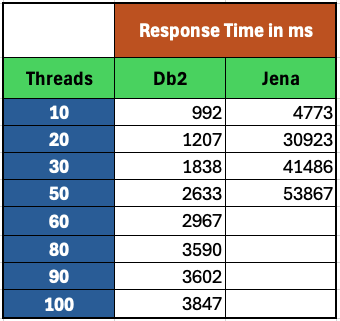

Average Response Time The table below shows how response times vary as load increases, for both LQE rs/Db2 and LQE/Jena, for a large GC selection size of 40 million versions. LQE rs/Db2 outperforms Jena.

Looking at the response times for LQE rs/Db2 using a smaller GC (10 million versions), the response times increase slightly as the load increases. For this run, the CPU on the test system maxes out around 60 threads.

Disk Utilization on DB Server

Disk utilization on the DB server shows a gradual increase as load increases but remains low. The storage subsystem is not a bottleneck.

Disk Utilization on DB Server

Disk utilization on the DB server shows a gradual increase as load increases but remains low. The storage subsystem is not a bottleneck.

LDX rs/Oracle

This section analyzes the performance of the LDX rs system in a topology where the LDX application and the database are hosted on separate servers. Oracle is deployed as the relational store on the dedicated database server. Test Parameters:| Parameter | Value |

|---|---|

| Threads | Up to 500 |

| Target URLs | 1000 |

| GC Selection Size | 40M |

| Number of Link types | 7 |

| Topology | Topology 4 |

Topology 4 involves a standalone LDX rs and a separate high capacity database server:

- LDX rs server: 24 vCPu, 32G RAM

- Database server: 80 vCPU, 768G RAM

- Storage: RAID 10 array of 24 nvme drives

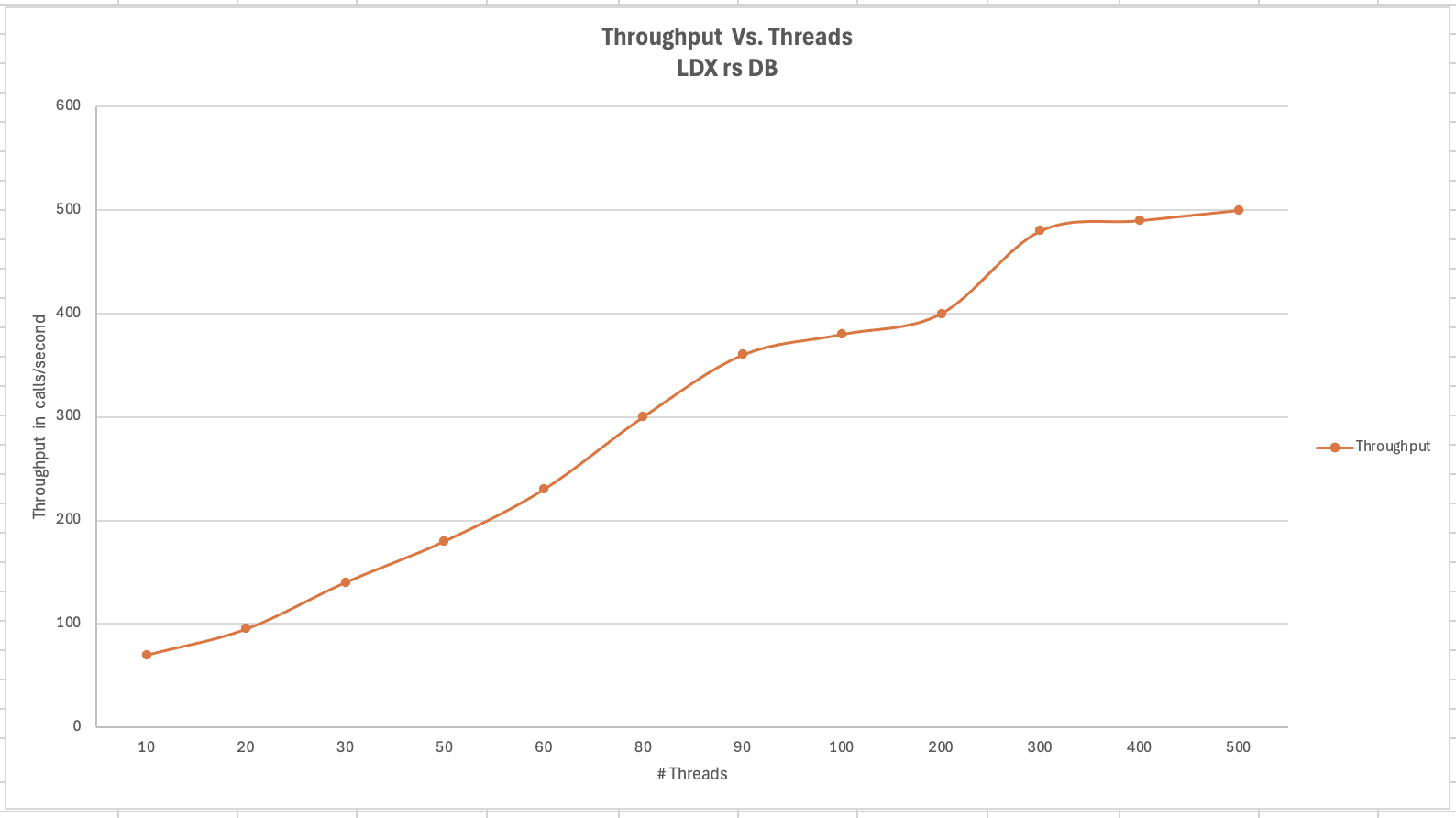

- Maximum throughput: 500 requests per second at 500 threads, with throughput starting to level off starting at 300 threads.

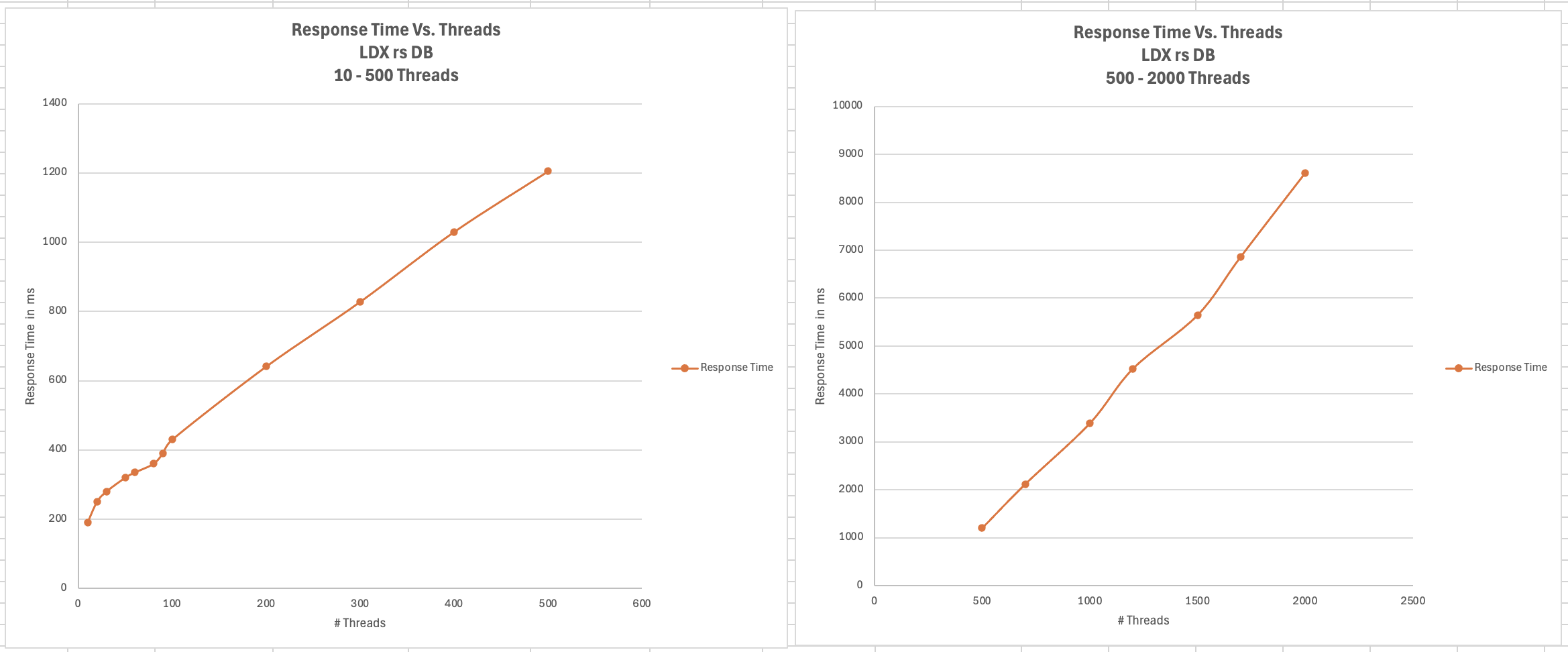

- Response times increase slightly as the workload increases, but stays below 1s up until 400 threads. As load increases past that point, response times continue to increase.

- The LDX rs server nears 100% CPU around 500 threads (90% CPU at 400 threads)

- The system could handle up to 2000 threads without hanging or crashing, but response times had degraded to 9 seconds at that load.

- CPU and Disk utilization on the database server is low. The database is not a bottleneck for this workload.

CPU Usage At 500 threads, the LDX rs server’s CPU usage nears 95%, while the database server still has spare CPU capacity. This indicates that the LDX rs server is the bottleneck rather than the database. As the load increases beyond 500 threads, the LDX rs server becomes overloaded, and response times degrade. The database server’s CPU usage remains relatively constant beyond 500 threads. Database CPU usage does not increase since the bottleneck is in the LDX rs server.

Average Response Time The response time analysis is presented in two charts. The first chart illustrates the system’s response time up to its effective limit of 500 threads. In this range, the response time remains consistently under 1 second. The second chart shows the system’s behavior under extreme load, ranging from 500 to 2000 threads. In this scenario, the response time increases as the thread count increases. Despite the increasing response times, the system continues to process requests, unlike in the case of Jena, which stops responding after 50 threads. This indicates that while the system experiences performance degradation under high load, it still maintains functionality, processing requests without failing.

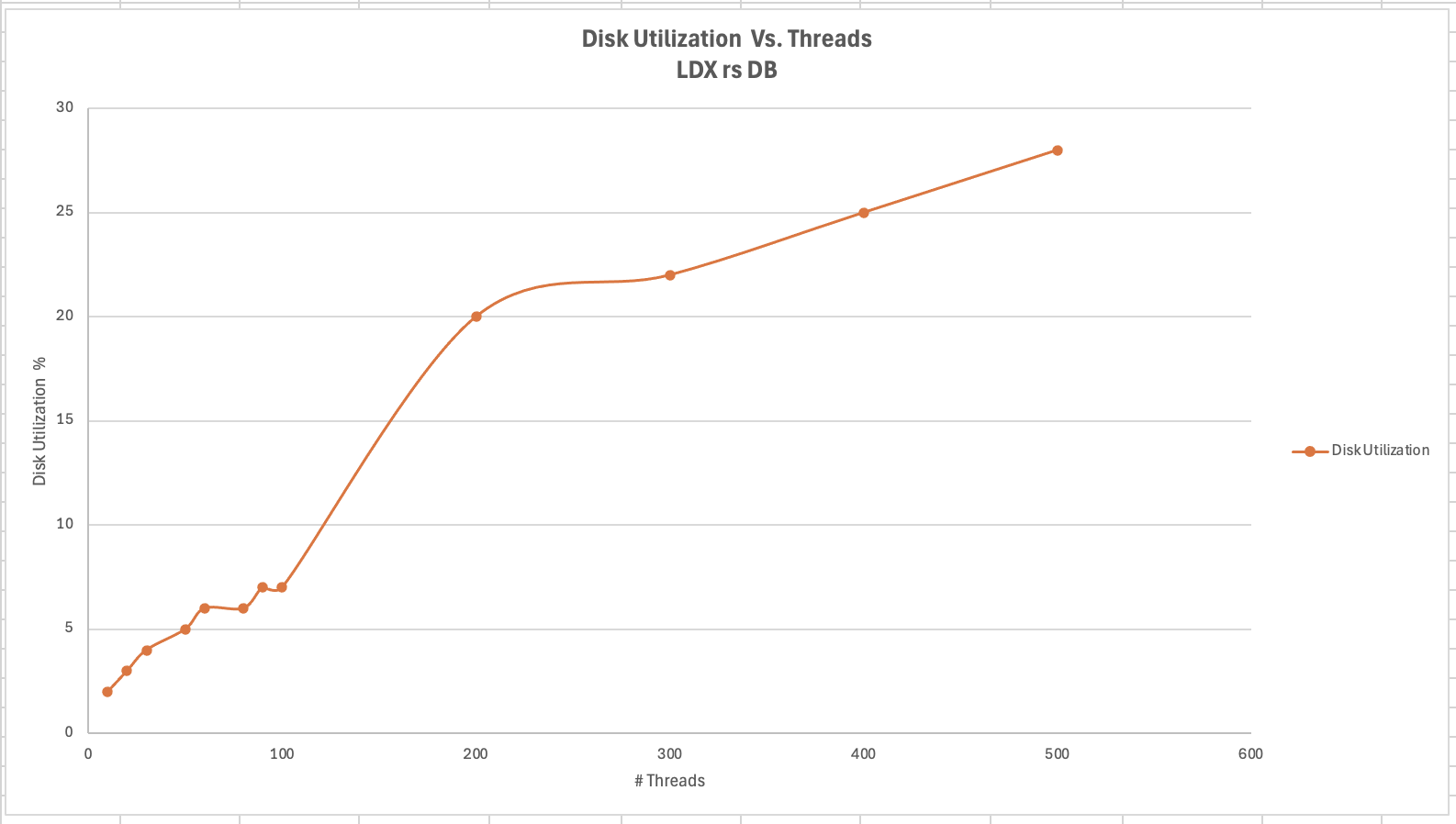

Disk Utilization Disk utilization on the database server increases as the thread count rises. The disk I/O stabilizes at around 30% after the load reaches 1000 threads. This indicates that the disk is not a bottleneck, as it has sufficient capacity to handle the I/O demands even under higher loads.

Tuning LDX rs for large workloads

To ensure optimal performance and stability of the LDX RS under high-load conditions, several tuning configurations need to be adjusted for large workloads, such as scenarios involving 1000, 2000, or 5000 concurrent threads. These adjustments aim to enhance connection handling capabilities. Below sections provide recommendations for tuning critical parameters on the LDX rs server, JTS server, IBM HTTP Server (IHS), Oracle database and Db2 database to handle large workloads effectively. LDX rs tuning To enhance the ability of LDX rs to manage a high number of concurrent connections and reduce potential bottlenecks caused by connection constraints below tuning settings needs to be done. Increase Maximum Total Connections and Maximum Connections Per Route:- Navigate to the LDX rs advanced properties section.

- Set the following properties:

- Maximum Total Connections: Increase the value based on the expected load. For example, set it to 2000 for 2000 concurrent threads.

- Maximum Connections Per Route: Match this value with the Maximum Total Connections property. If the load is 2000, set this property to 2000.

- Go to Relational Store Settings page, ensure that the 'Process query metrics' option is disabled.

- This setting, if enabled, can introduce additional overhead on LDX rs, leading to reduced performance under heavy workloads.

JTS tuning To manage the number of outgoing HTTP connections effectively to avoid resource contention below tuning settings needs to be done. Increase Outgoing HTTP Connection Properties:

- Navigate to the JTS advanced properties section and locate the following properties:

- Maximum Outgoing HTTP Connections per Destination: Set the value based on the load on LDX rs. For a load of 2000 threads, set this property to 2000.

- Maximum Outgoing HTTP Connections Total: Similarly, set this property value to 2000 for a load of 2000 threads.

IHS tuning To adjust the maximum number of clients (HTTP request on IHS server) to allow the IHS server to handle high loads below tuning settings needs to be done. Increase MaxClients Value in httpd.conf:

- Open the ‘httpd.conf ‘configuration file on IHS.

- Set the 'MaxClients' value based on the expected load. For example:

- If the LDX RS is expected to handle 2000 concurrent threads, set MaxClients to 2000.

Oracle tuning For optimal performance on Oracle, it is recommended to tune the specified parameters while keeping filesystemio_options and parallel_degree_policy at their default values to maintain system stability and avoid potential overhead.

alter system set memory_max_target=250G scope=spfile;

alter system set memory_target=250G scope=spfile;

alter system set sga_max_size=0 scope=spfile;

alter system set sga_target=0 scope=spfile;

alter system set pga_aggregate_limit=0 scope=spfile;

alter system set pga_aggregate_target=0 scope=spfile;

#rebounce db. note that in the test we also secured the sizes of sga_max_size, db_cache_size and session_cached_cursors

alter system set sga_max_size=123G scope=spfile;

alter system set db_cache_size=32G scope=spfile;

alter system set session_cached_cursors=1000 scope=spfile;

#rebounce db

####disable adaptive plan

alter system set optimizer_adaptive_plans=false;

alter system set memory_target=250G scope=spfile;

alter system set sga_max_size=0 scope=spfile;

alter system set sga_target=0 scope=spfile;

alter system set pga_aggregate_limit=0 scope=spfile;

alter system set pga_aggregate_target=0 scope=spfile;

#rebounce db. note that in the test we also secured the sizes of sga_max_size, db_cache_size and session_cached_cursors

alter system set sga_max_size=123G scope=spfile;

alter system set db_cache_size=32G scope=spfile;

alter system set session_cached_cursors=1000 scope=spfile;

#rebounce db

####disable adaptive plan

alter system set optimizer_adaptive_plans=false;

Db2 tuning This section addresses Db2 configuration parameters used during the performance testing. We used the following settings for dwDB database:

| Log file size (4KB) | (LOGFILSIZ) = 393216 |

| Number of primary log files | (LOGPRIMARY) = 60 |

| Number of secondary log files | (LOGSECOND) = 112 |

| Size of database shared memory (4KB) | (DATABASE_MEMORY) = 64000000 = 256G |

| Buffer pool BP_32K size | 3950000 = 126G |

restart the Db2 instance to take effect.

Additional details on the data shape

Artifacts count in DOORS NEXT

The data shape for DOORS NEXT is made up of standard-sized components. We have 3 standard size modules small, medium and large in each components. Number of artifacts in all 3 modules are summarized below.. We have 3 different standard module sizes:- Small (200 artifacts)

- Medium (1500 artifacts)

- Large (10,000 artifacts)

| Artifact type | Small component | Medium component | Large component |

|---|---|---|---|

| Number of large modules | 0 | 0 | 1 |

| Number of medium modules | 0 | 3 | 0 |

| Number of small modules | 20 | 0 | 0 |

| Total module artifacts | 4200 | 4500 | 10000 |

| Non-module artifacts | 100 | 100 | 100 |

We have created 1 small project with 3 components 1 small, 1 medium and 1 large. The number of artifacts for this standard small project is summarized below.

| Artifact type | Count |

|---|---|

| Large components | 1 |

| Medium components | 1 |

| Small components | 1 |

| Total components | 3 |

| Module artifacts | 18,700 |

| Non-module artifacts | 300 |

| Total artifacts | 19,000 |

| Large modules (10,000 artifacts) | 1 |

| Medium modules (1,500 artifacts) | 3 |

| Small modules (200 artifacts) | 20 |

So, we have total 19000 artifacts in one DOORS NEXT small project.

Artifacts count in ETM

| Artifact Type | Count |

|---|---|

| test plans | 12 |

| test cases | 10,200 |

| test scripts | 11,875 |

| test suites | 780 |

| test case execution records | 50,756 |

| test suite execution records | 720 |

| test case results | 122,400 |

| test suite results | 3,600 |

| test execution schedules | 2,848 |

| test phases and test environments | 840 |

| build definitions and build records | 244 |

Artifacts count in EWM

There are total 30,187 different work items in the EWM project area.| Work Item Type | Count |

|---|---|

| Defect | 4,537 |

| Task | 25,609 |

| Story | 38 |

| Epic | 3 |

| total | 30,187 |

Table sizes in the LQE rs repository

| TABLE NAME | ROW COUNT |

|---|---|

| SELECTIONS_SELECTS | 411549948 |

| URL | 135587348 |

| WORK_ITEM_LINK_PROP | 18371610 |

| RDF_STATEMENT | 4846212 |

| RDF_STATEMENT_RDF_TYPE | 4846212 |

| VERSION_RESOURCE | 4251587 |

| VERSION_RESOURCE_RDF_TYPE | 4251587 |

| TEST_SCRIPT_STEP_RESULT_LINK_PROP | 3110140 |

| TEST_RESULT_LINK_PROP | 2956644 |

| WORK_ITEM_SUBSCRIBERS | 2731486 |

| WORK_ITEM_SCHEDULE_RDF_TYPE | 1954679 |

| WORK_ITEM | 1954679 |

| WORK_ITEM_SCHEDULE | 1954679 |

| WORK_ITEM_RDF_TYPE | 1954679 |

| TEST_SCRIPT_STEP | 1567500 |

| TEST_SCRIPT_STEP_RDF_TYPE | 1567500 |

| TEST_EXECUTION_RECORD_LINK_PROP | 1091366 |

| TEST_SCRIPT_STEP_RESULT_RDF_TYPE | 969595 |

| TEST_SCRIPT_STEP_RESULT | 969595 |

| REQUIREMENT_RELATIONSHIP | 851067 |

| REQUIREMENT_LINK_PROP | 851066 |

| ITEM | 832101 |

| RDF_STATEMENT_LINK_PROP | 645587 |

| ARCHITECTURE_ELEMENT_RDF_TYPE | 522294 |

| TEST_CASE_REQUIREMENT_RELATIONSHIP | 458191 |

| TEST_RICH_TEXT_SECTION | 428836 |

| TEST_RICH_TEXT_SECTION_RDF_TYPE | 428836 |

| ITEM_LINK_PROP | 427743 |

| TEST_SCRIPT | 391875 |

| TEST_SCRIPT_RDF_TYPE | 391875 |

| TEST_CASE | 390207 |

| TEST_CASE_RDF_TYPE | 390207 |

| TEST_EXECUTION_SUITE_ELEMENT_LINK_PROP | 296265 |

| ARCHITECTURE_ELEMENT | 261147 |

| TEST_RESULT_RDF_TYPE | 242398 |

| TEST_RESULT | 242398 |

| WORK_ITEM_REQUIREMENT_RELATIONSHIP | 201702 |

| REQUIREMENT_RDF_TYPE | 198532 |

| TEST_SUITE_RESULT_LINK_PROP | 188803 |

| TEST_CASE_LINK_PROP | 143323 |

| TEST_SCRIPT_STEP_LINK_PROP | 120000 |

| REQUIREMENT | 99266 |

| TEST_EXECUTION_RECORD | 99134 |

| TEST_EXECUTION_RECORD_RDF_TYPE | 99134 |

| TEST_EXECUTION_SUITE_ELEMENT_RDF_TYPE | 98755 |

| TEST_EXECUTION_SUITE_ELEMENT | 98755 |

| TEST_SCRIPT_LINK_PROP | 80000 |

| WORK_ITEM_TEST_CASE_RELATIONSHIP | 79422 |

| TEST_APPROVAL_DESCRIPTOR_APPROVAL | 59870 |

| TEST_APPROVAL_RDF_TYPE | 59870 |

| TEST_APPROVAL | 59870 |

| TEST_SUITE_ELEMENT_LINK_PROP | 59400 |

| RESOURCE_PROPERTY_LINK_PROP | 52116 |

| CONFIGURATION_LINK_PROP | 49922 |

| CONFIGURATION_RDF_TYPE | 41101 |

| SELECTIONS_RDF_TYPE | 28123 |

| SELECTIONS_LINK_PROP | 25602 |

| TEST_SUITE_LINK_PROP | 25600 |

| ARCHITECTURE_RELATIONSHIP | 25348 |

| ARCHITECTURE_RELATIONSHIP_LINK_PROP | 25348 |

| TEST_APPROVAL_DESCRIPTOR_RDF_TYPE | 20040 |

| TEST_APPROVAL_DESCRIPTOR | 20040 |

| TEST_QUALITY_APPROVAL_APPROVAL_DESCRIPTOR | 20040 |

| TEST_PLAN_LINK_PROP | 19924 |

| TEST_SUITE_ELEMENT | 19800 |

| TEST_SUITE_ELEMENT_RDF_TYPE | 19800 |

| CONFIGURATION_NAME | 19342 |

| CONFIGURATION | 19321 |

| CONFIGURATION_ACCEPTED_BY | 19321 |

| CONFIGURATION_SELECTIONS | 15313 |

| SELECTIONS | 15313 |

| RESOURCE_PROPERTY_RDF_TYPE | 15225 |

| RESOURCE_PROPERTY | 15225 |

| ITEM_STRING_PROP | 11885 |

| CONFIGURATION_CONTRIBUTION | 11885 |

| TEST_QUALITY_APPROVAL | 10020 |

| TEST_QUALITY_APPROVAL_RDF_TYPE | 10020 |

| RDF_PROPERTY_RDF_TYPE | 9463 |

| RDF_PROPERTY | 9413 |

| CONFIGURATION_PREVIOUS_BASELINE | 8952 |

| CONFIGURATION_BASELINE_OF_STREAM | 8952 |

| TEST_SUITE_EXECUTION_RECORD_LINK_PROP | 8123 |

| RESOURCE_SHAPE_PROPERTY | 7495 |

| CACHED_CONFIG_ALL_CONFIGS | 6757 |

| TEST_SUITE_RESULT | 6600 |

| TEST_SUITE_RESULT_RDF_TYPE | 6600 |

| CONFIGURATION_ACCEPTS | 4920 |

| CACHED_CONFIG_ALL_SELECTIONS | 4279 |

| TEST_PLAN_RDF_TYPE | 4123 |

| TEST_PLAN | 4123 |

| COMPONENT_RDF_TYPE | 3936 |

| COMPONENT | 3936 |

| COMPONENT_LINK_PROP | 3925 |

| ITEM_RDF_TYPE | 3779 |

| RDF_PROPERTY_LINK_PROP | 3467 |

| CONFIGURATION_BOOLEAN_PROP | 2503 |

| RESOURCE_SHAPE_LINK_PROP | 2263 |

| CONFIGURATION_COMMITTER | 1561 |

| RDF_CLASS_LINK_PROP | 1522 |

| TEST_SUITE_RDF_TYPE | 1380 |

| TEST_SUITE | 1380 |

| TEST_SUITE_EXECUTION_RECORD | 1320 |

| TEST_SUITE_EXECUTION_RECORD_RDF_TYPE | 1320 |

| CONFIGURATION_DESCRIPTION | 1290 |

| RDF_CLASS_RDF_TYPE | 1218 |

| RDF_CLASS | 1154 |

| TEST_ENVIRONMENT_RDF_TYPE | 1040 |

| TEST_ENVIRONMENT | 1040 |

| TEST_ENVIRONMENT_LINK_PROP | 800 |

| WORK_ITEM_PLAN_LINK_PROP | 566 |

| TEST_PLATFORM_COVERAGE_LINK_PROP | 400 |

| RESOURCE_SHAPE_RDF_TYPE | 360 |

| RESOURCE_SHAPE | 360 |

| TEST_PHASE | 330 |

| TEST_PHASE_RDF_TYPE | 330 |

| TEST_BUILD_RECORD | 320 |

| TEST_BUILD_RECORD_RDF_TYPE | 320 |

| TEST_PHASE_LINK_PROP | 292 |

| TEST_CATEGORY_RDF_TYPE | 216 |

| TEST_CATEGORY | 216 |

| TEST_PLATFORM_COVERAGE_RDF_TYPE | 200 |

| TEST_PLATFORM_COVERAGE | 200 |

| TEST_BUILD_RECORD_LINK_PROP | 200 |

| SOURCE_FILE | 159 |

| SOURCE_FILE_RDF_TYPE | 159 |

| WORK_ITEM_CATEGORY_LINK_PROP | 156 |

| WORK_ITEM_PLAN | 154 |

| WORK_ITEM_PLAN_RDF_TYPE | 154 |

| TEST_BUILD_DEFINITION_LINK_PROP | 112 |

| TEST_CATEGORY_LINK_PROP | 108 |

| WORK_ITEM_DELIVERABLE_RDF_TYPE | 93 |

| WORK_ITEM_DELIVERABLE_LINK_PROP | 93 |

| WORK_ITEM_DELIVERABLE | 93 |

| WORK_ITEM_DELIVERABLE_RELEASE_PREDECESSOR | 93 |

| WORK_ITEM_CATEGORY_RDF_TYPE | 92 |

| WORK_ITEM_CATEGORY | 92 |

| PREFIX | 55 |

| TEST_BUILD_DEFINITION_RDF_TYPE | 30 |

| TEST_BUILD_DEFINITION | 30 |

| ACCESS_CONTEXT_RDF_TYPE | 26 |

| LQE_INDEXING_ERROR | 18 |

| LQE_INDEXING_ERROR_LINK_PROP | 18 |

| TRS_DESCRIPTOR | 16 |

| TRS_DESCRIPTOR_RDF_TYPE | 16 |

| TRS_DESCRIPTOR_LINK_PROP | 16 |

| RESOURCE_GROUP_USER_GROUPS | 15 |

| RESOURCE_GROUP_RDF_TYPE | 15 |

| RESOURCE_GROUP | 15 |

| ACCESS_CONTEXT | 13 |

| USER_GROUP | 13 |

| USER_GROUP_RDF_TYPE | 13 |

| USER_GROUP_NAME | 12 |

| CACHED_CONFIG | 12 |

| RESOURCE_GROUP_NAME | 12 |

References

- LQE rs Performance Report

- What's New in IBM Engineering Reporting 7.0.3

- What's new for IBM Engineering 7.0.3 administrators?

- LQE rs 703 Performance Report

Contributions are governed by our Terms of Use. Please read the following disclaimer.

Dashboards and work items are no longer publicly available, so some links may be invalid. We now provide similar information through other means. Learn more here.