Introduction

This article presents the results of the Data Collection Component (DCC) performance testing for the Rational solution for Collaborative Lifecycle Managment (CLM) 5.0 release. The DCC is introduced in the 5.0 release in order to address concerns with the current ETL solutions. The DCC can help to improve the performance of the DM and JAVA ETL by optimizing round tips to a data source and using concurrent processing to fetch data. The DCC also improved the utilization of the system resources of the server. This article will show the performance benchmark of the DCC testing and also provide a comparison with JAVA ETL and DM ETL.

Disclaimer

The information in this document is distributed AS IS. The use of this information or the implementation of any of these techniques is a customer responsibility and depends on the customerís ability to evaluate and integrate them into the customerís operational environment. While each item may have been reviewed by IBM for accuracy in a specific situation, there is no guarantee that the same or similar results will be obtained elsewhere. Customers attempting to adapt these techniques to their own environments do so at their own risk. Any pointers in this publication to external Web sites are provided for convenience only and do not in any manner serve as an endorsement of these Web sites. Any performance data contained in this document was determined in a controlled environment, and therefore, the results that may be obtained in other operating environments may vary significantly. Users of this document should verify the applicable data for their specific environment.

Performance is based on measurements and projections using standard IBM benchmarks in a controlled environment. The actual throughput or performance that any user will experience will vary depending upon many factors, including considerations such as the amount of multi-programming in the userís job stream, the I/O configuration, the storage configuration, and the workload processed. Therefore, no assurance can be given that an individual user will achieve results similar to those stated here.

This testing was done as a way to compare and characterize the differences in performance between different versions of the product. The results shown here should thus be looked at as a comparison of the contrasting performance between different versions, and not as an absolute benchmark of performance.

What our tests measure

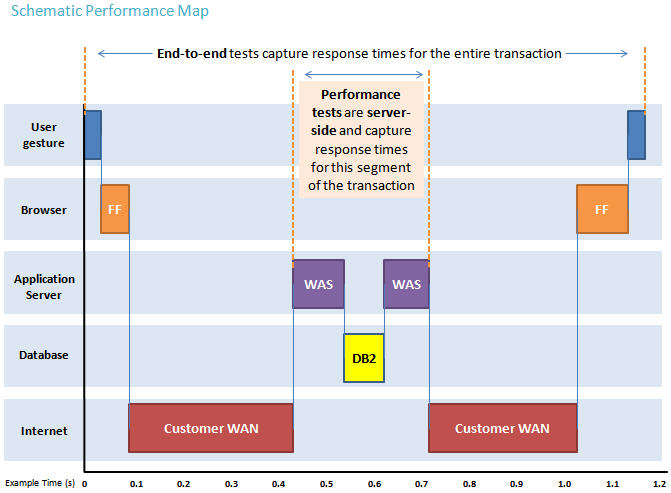

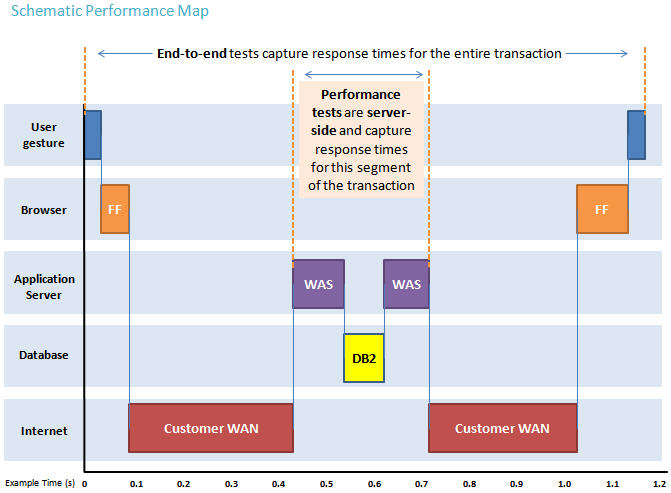

We use predominantly automated tooling such as Rational Performance Tester (RPT) to simulate a workload normally generated by client software such as the Eclipse client or web browsers. All response times listed are those measured by our automated tooling and not a client.

The diagram below describes at a very high level which aspects of the entire end-to-end experience (human end-user to server and back again) that our performance tests simulate. The tests described in this article simulate a segment of the end-to-end transaction as indicated in the middle of the diagram. Performance tests are server-side and capture response times for this segment of the transaction.

Methodology

The testing is based on 3 CLM repositories with the same data volume which are generated using data population tool and use DCC server, Data Manager tool client, and CLM JAVA ETL component to load the data into data warehouse. The testing runs the JAVA, DM, and DCC ETL based on one CLM repository in order to get the performance comparison of DCC compared with JAVA and DM ETL. Another run was done with the DCC ETL based on 3 CLM repositories in order to get the performance comparison of DCC with JAVA, DM ETL and DCC based on 3 CLM repositories.

Findings

Performance goals

- Make comparison of performance with DCC, JAVA and DM ETL based on one CLM repository.

- Make comparison of the performance with DCC, JAVA and DM ETL based on 3 CLM repositories concurrently.

Findings

- The DCC has two major improvements on :

-

- Compared with JAVA and DM ETL loading apps in sequence, DCC ETL loads the applications in parallel. The total duration of DCC depends on the slowest app data loading. The performance of DCC is clearly improved compared with the JAVA/DM ETL loading in sequence.

-

- For specific application data loading, DCC ETL does optimization by merging the same REST services to reduce the number of requests sent to the CLM Server. DCC is optimized to find the REST requests with the same relative URL path and merge them together and then send the REST request once to get the data that JAVA/DM ETL fetch by sending many REST requests. Thus for a specific application DCC ETL also has a performance improvement.

- Against one CLM repository of performance test data, DCC ETL has better performance than JAVA and DM ETL. For the specific ETL job (CCM,QM, RM and star job) the DCC has better performance than JAVA and DM ETL. Because DCC merges some REST requests the ETL job sends fewer REST requests than JAVA and DM ETL. For the details, please refer to the Results. For the total duration of one repository (the duration time of ETL to complete the JFS, QM, RRC, RTC, and star jobs) DCC has more significant improvement than JAVA and DM ETL. Because DCC runs the ETL jobs concurrently the total duration is the maximum time among the ETL jobs. But the JAVA and DM ETL run the ETL jobs in sequence. For the detail comparison data, please refer to Results

- Against three CLM repositories of performance test data (each repository has the same data volume and is deployed with same configuration) DCC ETL shows a major performance improvement because DCC provides the capability to load the data from multiple CLM repositories concurrently. For the detail comparison data, please refer to Results

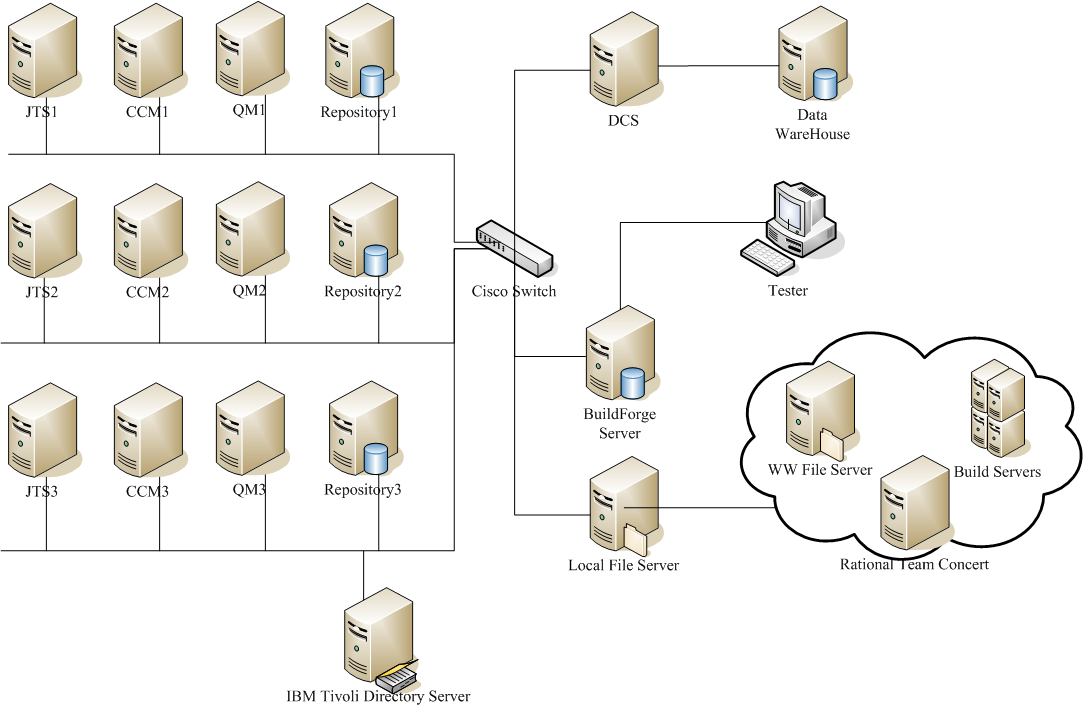

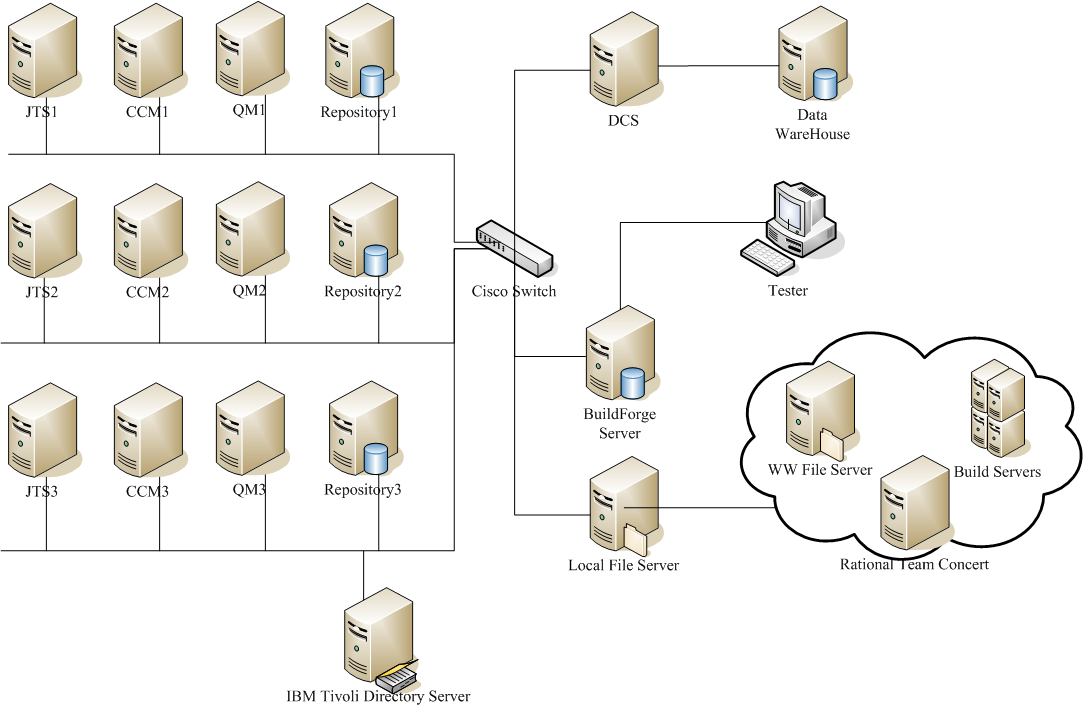

Topology

The topology under test is based on

Standard Topology (E1) Enterprise - Distributed / Linux / DB2.

The specifications of machines under test are listed in the table below. Server tuning details are listed in

Appendix A

Test data was generated using automation. The test environment for the latest release was upgraded from the earlier one by using the CLM upgrade process. Please see the system configuration in the under table.

And refer to the server tuning details listed in

Appendix A

IBM Tivoli Directory Server was used for managing user authentication.

| Function |

Number of Machines |

Machine Type |

CPU / Machine |

Total # of CPU Cores/Machine |

Memory/Machine |

Disk |

Disk capacity |

Network interface |

OS and Version |

| ESX Server |

2 |

IBM X3550 M3 7944J2A |

1 x Intel Xeon E5-2640 2.5 GHz (six-core) |

12 vCPU |

48GB |

RAID0 SAS x3 300G 10k rpm |

900G |

Gigabit Ethernet |

ESXi5.1 |

| ESX Server2 |

1 |

IBM X3650 M3 |

2 x Intel Xeon x5680 3.3GHz (six-core) |

24 vCPU |

191GB |

RAID5 SAS x8 300G 10k rpm |

2T |

Gigabit Ethernet |

ESXi5.1 |

| ESX Server3 |

1 |

IBM X3650 M4 |

2 x Intel Xeon x5680 3.3GHz (12-core) |

48 vCPU |

196GB |

RAID5 SAS x8 900G 10k rpm |

5.7T |

Gigabit Ethernet |

ESXi5.1 |

| JTS/RM Server |

3 |

VM on IBM System x3550 M3 |

|

4 vCPU |

16GB |

|

120G |

Gigabit Ethernet |

Red Hat Enterprise Linux Server release 6.2 |

| CLM Database Server |

3 |

VM on IBM System x3650 M3 |

|

4 vCPU |

16GB |

|

120G |

Gigabit Ethernet |

Red Hat Enterprise Linux Server release 6.2 |

| CCM Server |

3 |

VM on IBM System x3550 M3 |

|

4 vCPU |

16GB |

|

120G |

Gigabit Ethernet |

Red Hat Enterprise Linux Server release 6.2 |

| QM Server |

3 |

VM on IBM System x3550 M3 |

|

4 vCPU |

16GB |

|

120G |

Gigabit Ethernet |

Red Hat Enterprise Linux Server release 6.2 |

| CLM DCC Server |

1 |

VM on IBM System x3650 M4 |

|

16 vCPU |

16GB |

|

120G |

Gigabit Ethernet |

Red Hat Enterprise Linux Server release 6.2 |

| Data Warehouse Server |

1 |

VM on IBM System x3650 M3 |

|

4 vCPU |

16GB |

|

120G |

Gigabit Ethernet |

Red Hat Enterprise Linux Server release 6.2 |

Data volume and shape

The data volume listed in

Appendix B

Network connectivity

All server machines and test clients are located on the same subnet. The LAN has 1000 Mbps of maximum bandwidth and less than 0.3 ms latency in ping.

Results

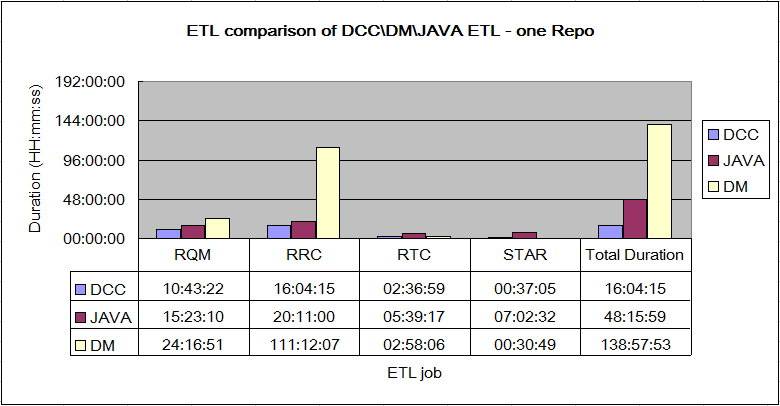

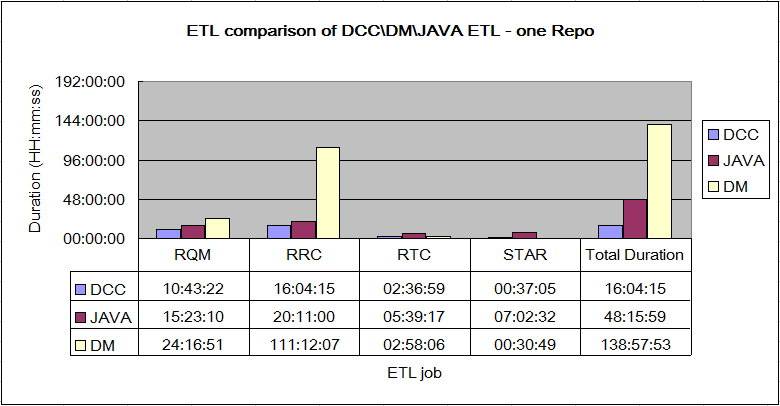

ETL Comparison of DCC\JAVA\DM based on one CLM repository

This part is targeted to determine the performance gain of DCC ETL compared with JAVA ETL and DM ETL based on one CLM repository. Before CLM 5.0, CLM provided JAVA ETL to load the CLM data from the applications one by one who registered on the JTS server. One typical CLM deployment has one JTS, CCM, QM and RM application registered on the JTS. The JAVA ETL will load the data in sequence with following apps: JTS, CCM,QM, RM and Star. The total duration is the sum of ETL duration of all applications. So does DM ETL. DCC provides the capability to load the applications data in parallel. Thus the total duration is the slowest duration among the ETLs of specific applications.

The chart shows the performance gain of DCC compared with JAVA and DM ETL based on one CLM repository. The DCC has two major improvements:

1. DCC performance has a significant improvement per the comparison of JAVA and DCC ETL based on performance test data. The duration reduced from 48 hours to 16 hours. For the duration of specific applications the DCC also has a significant improvement. It improved about 30% on QM loading, 20% on RM loading, 60% on CCM loading and 90% on Star job.

2. DCC duration also has a significant improvement per the comarison of DM and DCC ETL based on performance test data. The duration reduced from 139 hours to 16 hours. The major improvements are the RRC ETL and RQM ETL. RQM loading improved about 60% and RRC loading improved about 85%.

Row 1. DCC : The DCC load the CLM apps data concurrently based on one CLM repository.

Row 2. JAVA : JAVA ETL load the CLM apps data sequentially based on one CLM repository.

Row 3. DM : DM ETL load the CLM apps data sequentially based on one CLM repository.

- Precondition: CCM ETL has one build named as Workitembaseline which records the latest info of each workitem by getting the latest workitem history record. When the workitembaseline ETL build is running, the ETL gets the latest info (status, state, priority, severity, etc.) by requesting the latest WI history with the query condition that the change time of the WI history is earlier than the ETL build start time. If there is no ETL schedule one day, the latest info of each workitem on that day are not loaded in the worktiembaseline table of DW. JAVA ETL will fill the WI latest info on the days that have no ETL running. For example, if there is no JAVA ETL run on Jan 1st, the workitemBaseline table won't have chance to load the workitem latest info into DW. However, the next JAVA ETL run on Jan 2nd will insert the missing data on Jan 1st. The performance team always uses the same data set to do the ETL performance so that the performance results are comparable. This feature will cause the ETL get more workitembaseline data for the JAVA Full ETL load along with time passed. That means the baseline data of 4.0.6 is slightly more than that in 4.0.5, and that the data of 4.0.5 is slightly more than that in 4.0.4, etc. To improve the comparability of performance data release by release, we insert one pseudo record so that the ETL only inserts a single day's baseline. We get the same number of workitembaseline ELT builds inserted by this way.

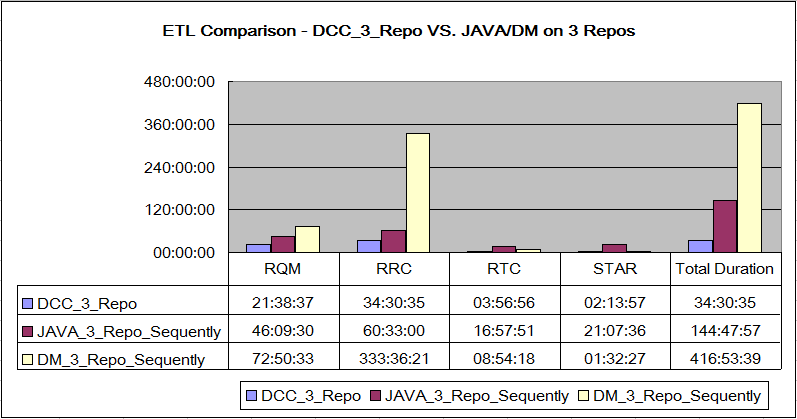

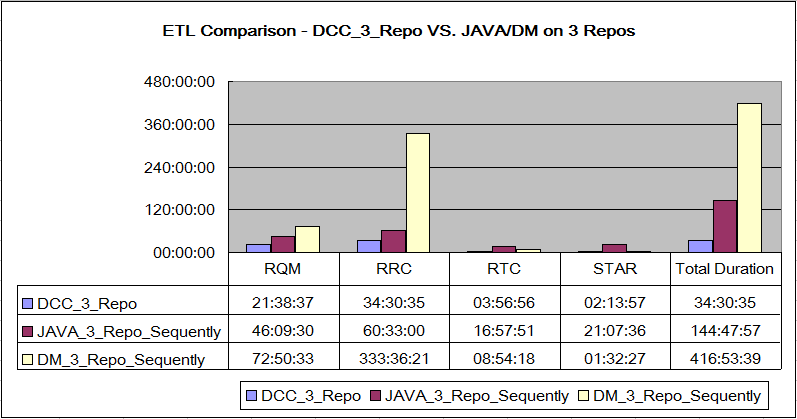

ETL Comparison of DCC\JAVA\DM based on three CLM repositories

This part is targeted to determine the performance gain of DCC ETL compared with JAVA ETL and DM ETL based on multiple CLM repositories. In theory, the DCC will save more time of ETL along with more CLM repositories to be loaded because DCC can load all of the CLM applications concurrently.

In this test senario DCC loads 3 CLM repositories data (one CCM, QM, and RM application registered per CLM repository) concurrently. The DCC has two major improvements:

1. DCC performance has a significant improvement per the comparison of JAVA and DCC ETL based on 3 CLM repositories. The duration reduced from 144 hours to 34 hours. And for the duration of specific applications, the DCC also has a significant improvement. It improved about 30% on QM loading, 20% on RM loading, 60% on CCM loading, and 90% on Star job.

2. DCC duration also has a significant improvement per the comparison of DM and DCC ETL based on 3 CLM repositories. The duration reduced from 416 hours to 16 hours (96% improved). For the duration of specific applications the DCC also has a significant improvement. It improved about 70% on QM loading, 90% on RM loading, and 55% on CCM loading.

Row1. DCC_3_Repo : The DCC loads 3 CLM repositories data concurrently.

Row2. JAVA_3_Repo_Sequently : The JAVA ETL loads 3 CLM repositories in sequence. For one CLM repository loading JAVA ETL loads the apps in sequence as well.

Row3. DM_3_Repo_Sequently: The DM ETL loads 3 CLM repositories in sequence. For one CLM repository loading the DM ETL loads the apps in sequence as well.

Appendix A - Server Tuning Details

Product

|

Version |

Highlights for configurations under test |

| DCC |

DCC 5.0 |

DCC Data Collection Properties:

- Data Collection Thread Size = 60

- OS max number of open files set as 65536

- OS max number of processes set as 65536

|

| Data warehouse |

DCC 5.0 / DB2 10.1.1 |

Transaction log setting of data warehouse:

- Transaction log size changed to 40960

- Transaction log primary file number to 50

- Transaction log secondary file number to 100

db2 update db cfg using LOGFILSIZ 40960

db2 update db cfg using LOGPRIMARY 50

db2 update db cfg using LOGSECOND 100

|

| IBM WebSphere Application Server |

8.5.0.1 |

JVM settings:

- GC policy and arguments, max and init heap sizes:

-verbose:gc -XX:+PrintGCDetails -Xverbosegclog:gc.log -Xgcpolicy:gencon

-Xmx8g -Xms8g -Xmn1g -Xcompressedrefs -Xgc:preferredHeapBase=0x100000000

-XX:MaxDirectMemorySize=1g

|

| DB2 |

DB2 10.1.1 |

Transaction log setting of data warehouse:

- Transaction log size changed to 40960

db2 update db cfg using LOGFILSIZ 40960

|

| LDAP server |

IBM Tivoli Directory Server 6.3 |

|

| License server |

|

Hosted locally by JTS server |

| Network |

|

Shared subnet within test lab |

Appendix B - Data Volume and Shape

|

Record type |

Record Per Repository |

Total Count for 3 repositories |

| CCM |

APT_ProjectCapacity |

1 |

3 |

| |

Project |

1 |

3 |

| |

APT_TeamCapacity |

0 |

0 |

| |

Build |

0 |

0 |

| |

Build Result |

0 |

0 |

| |

Build Unit Test Result |

0 |

0 |

| |

Build Unit Test Events |

0 |

0 |

| |

Complex CustomAttribute |

0 |

0 |

| |

Custom Attribute |

0 |

0 |

| |

File Classification |

3 |

9 |

| |

First Stream Classification |

3 |

9 |

| |

History Custom Attribute |

0 |

0 |

| |

SCM Component |

2 |

6 |

| |

SCM WorkSpace |

2 |

6 |

| |

WorkItem |

100026 |

300078 |

| |

WorkItem Approval |

100000 |

300000 |

| |

WorkItem Dimension Approval Description |

100000 |

300000 |

| |

WorkItem Dimension |

3 |

9 |

| |

WorkItem Dimension Approval Type |

3 |

9 |

| |

WorkItem Dimension Category |

2 |

6 |

| |

WorkItem Dimension Deliverable |

0 |

0 |

| |

WorkItem Dimension Enumeration |

34 |

102 |

| |

WorkItem Dimension Resolution |

18 |

54 |

| |

Dimension |

68 |

204 |

| |

WorkItem Dimension Type |

8 |

24 |

| |

WorkItem Hierarchy |

0 |

0 |

|

WorkItem History |

282369 |

847107 |

| |

WorkItem History Complex Custom Attribute |

0 |

0 |

| |

WorkItem Link |

101014 |

303042 |

| |

WorkItem Type Mapping |

4 |

12 |

| RM |

CrossAppLink |

136 |

408 |

| |

Custom Attribute |

2173181 |

6519543 |

| |

Requirement |

634432 |

1903296 |

| |

Collection Requirement Lookup |

0 |

0 |

| |

Module Requirement Lookup |

306000 |

918000 |

| |

Implemented BY |

0 |

0 |

| |

Request Affected |

0 |

0 |

| |

Request Tracking |

0 |

0 |

| |

REQUICOL_TESTPLAN_LOOKUP |

0 |

0 |

| |

REQUIREMENT_TESTCASE_LOOKUP |

0 |

0 |

| |

REQUIREMENT_SCRIPTSTEP_LOOKUP |

0 |

0 |

| |

REQUIREMENT_HIERARCHY |

0 |

0 |

| |

REQUIREMENT_EXTERNAL_LINK |

0 |

0 |

| |

RequirementsHierarchyParent |

31610 |

94830 |

| |

Attribute Define |

10 |

30 |

| |

Requirement Link Type |

176 |

528 |

| |

Requirement Type |

203 |

609 |

| QM |

Record type |

|

Record Per Repository |

Total Count for 3 repositories |

| TestScript | | 0 | 0 |

| BuildRecord | | 20000 | 60000 |

| Category | | 520 | 1560 |

| CategoryType | | 120 | 360 |

| Current log of Test Suite | | 6000 | 18000 |

| EWICustomAttribute | | 0 | 0 |

| EWIRelaLookup | | | |

| | CONFIG_EXECUTIONWORKITM_LOOKUP | 0 | 0 |

| | EXECWORKITEM_REQUEST_LOOKUP | 0 | 0 |

| | EXECWORKITEM_ITERATION_LOOKUP | 180000 | 540000 |

| | EXECWORKITEM_CATEGORY_LOOKUP | 0 | 0 |

| ExecResRelaLookup | | | |

| | EXECRES_EXECWKITEM_LOOKUP | 540000 | 1620000 |

| | EXECRES_REQUEST_LOOKUP | 60000 | 180000 |

| | EXECRESULT_CATEGORY_LOOKUP | 0 | 0 |

| | EXECUTION_STEP_RESULT | 0 | 0 |

| ExecStepResRequestLookup | | 0 | 0 |

| ExecutionResult | | 540000 | 1620000 |

| ExecutionStepResult | | 0 | 0 |

| ExecutionWorkItem | | 180000 | 540000 |

| Job | | 0 | 0 |

| JobResult | | 0 | 0 |

| KeyWord | | 0 | 0 |

| KeyWordTestScriptLookup | | 0 | 0 |

| LabRequestChangeState | | 0 | 0 |

| LabRequest | | 2520 | 7560 |

| LabResource | | 24000 | 72000 |

| Objective | | 0 | 0 |

| Priority | | 4 | 0 |

| RemoteScript | | 0 | 0 |

| Requirement | | 0 | 0 |

| Reservation | | 32000 | 96000 |

| ReservationRequestLookup | | 30 | 90 |

| ResourceGroup | | 0 | 0 |

| ScriptStep_Rela_Lookup | | 240000 | 720000 |

| State | | 240 | 720 |

| StateGroup | | 60 | 180 |

| TestCase | | 60000 | 60000 |

| TestCaseCustomAttribute | | 0 | 0 |

| TestCaseRelaLookup | | | |

| | TESTCASE_RemoteTESTSCRIPT_LOOKUP | 0 | 0 |

| | TESTCASE_TESTSCRIPT_LOOKUP | 60000 | 180000 |

| | TESTCASE_CATEGORY_LOOKUP | 161060 | 483180 |

| | REQUIREMENT_TESTCASE_LOOKUP | 60000 | 180000 |

| | REQUEST_TESTCASE_LOOKUP | 60000 | 180000 |

| | TestCase RelatedRequest Lookup | 0 | 0 |

| TestEnvironment | | 4000 | 12000 |

| TestPhase | | 1200 | 3600 |

| TestPlan | | 110 | 330 |

| TestPlanObjectiveStatus | | 0 | 0 |

| TestPlanRelaLookup | | | |

| | REQUIREMENT_TESTPLAN_LOOKUP | 0 | 0 |

| | TESTSUITE_TESTPLAN_LOOKUP | 6000 | 18000 |

| | TESTPLAN_CATEGORY_LOOKUP | 0 | 2 |

| | TESTPLAN_TESTCASE_LOOKUP | 60000 | 180000 |

| | TESTPLAN_OBJECTIVE_LOOKUP | 0 | 0 |

| | REQUIREMENT COLLECTION_TESTPLAN_LOOKUP | 320 | 9600 |

| | TESTPLAN_TESTPLAN_HIERARCHY | 0 | 0 |

| | TESTPLAN_ITERATION_LOOKUP | 1200 | 3600 |

| | REQUEST_TESTPLAN_LOOKUP | 0 | 0 |

| TestScript | | 60000 | 180000 |

| TestScriptRelaLookup _ Manual | | | |

| | TESTSCRIPT_CATEGORY_LOOKUP | 0 | 0 |

| | REQUEST_TESTSCRIPT_LOOKUP | 0 | 0 |

| TestScriptRelaLookup _ Remote | | 0 | 0 |

| TestScriptStep | | 240000 | 720000 |

| TestSuite | | 6000 | 18000 |

| TestSuite_CusAtt | | 0 | 0 |

| TestSuiteElement | | 90000 | 270000 |

| TestSuiteExecutionRecord | | 6000 | 18000 |

| TestSuiteLog | | 30000 | 90000 |

| TestSuiteRelaLookup | | | |

| | TESTSUITE_CATEGORY_LOOKUP | 15950 | 47850 |

| | REQUEST_TESTSUITE_LOOKUP | 0 | 0 |

| TestSuLogRelaLookup | | | |

| | TESTSUITE_TESTSUITELOG_LOOKUP | 30000 | 90000 |

| | TESTSUITELOG_EXECRESULT_LOOKUP | 213030 | 639090 |

| | TESTSUITELOG_CATEGORY_LOOKUP | 0 | 0 |

| TestSuiteExecutionRecord_CusAtt | | 6000 | 18000 |

| TSERRelaLookup | | 0 | 0 |

| | TSTSUITEXECREC_CATEGORY_LOOKUP | 0 | 0 |

| Total | | 1203023 | 3609069 |

N/A: Not applicable.

For more information

About the authors

PengPengWang

Questions and comments:

- What other performance information would you like to see here?

- Do you have performance scenarios to share?

- Do you have scenarios that are not addressed in documentation?

- Where are you having problems in performance?

Warning: Can't find topic Deployment.PerformanceDatasheetReaderComments

The specifications of machines under test are listed in the table below. Server tuning details are listed in Appendix A

Test data was generated using automation. The test environment for the latest release was upgraded from the earlier one by using the CLM upgrade process. Please see the system configuration in the under table.

And refer to the server tuning details listed in Appendix A

IBM Tivoli Directory Server was used for managing user authentication.

The specifications of machines under test are listed in the table below. Server tuning details are listed in Appendix A

Test data was generated using automation. The test environment for the latest release was upgraded from the earlier one by using the CLM upgrade process. Please see the system configuration in the under table.

And refer to the server tuning details listed in Appendix A

IBM Tivoli Directory Server was used for managing user authentication.

Row 1. DCC : The DCC load the CLM apps data concurrently based on one CLM repository.

Row 2. JAVA : JAVA ETL load the CLM apps data sequentially based on one CLM repository.

Row 3. DM : DM ETL load the CLM apps data sequentially based on one CLM repository.

Row 1. DCC : The DCC load the CLM apps data concurrently based on one CLM repository.

Row 2. JAVA : JAVA ETL load the CLM apps data sequentially based on one CLM repository.

Row 3. DM : DM ETL load the CLM apps data sequentially based on one CLM repository.

Row1. DCC_3_Repo : The DCC loads 3 CLM repositories data concurrently.

Row2. JAVA_3_Repo_Sequently : The JAVA ETL loads 3 CLM repositories in sequence. For one CLM repository loading JAVA ETL loads the apps in sequence as well.

Row3. DM_3_Repo_Sequently: The DM ETL loads 3 CLM repositories in sequence. For one CLM repository loading the DM ETL loads the apps in sequence as well.

Row1. DCC_3_Repo : The DCC loads 3 CLM repositories data concurrently.

Row2. JAVA_3_Repo_Sequently : The JAVA ETL loads 3 CLM repositories in sequence. For one CLM repository loading JAVA ETL loads the apps in sequence as well.

Row3. DM_3_Repo_Sequently: The DM ETL loads 3 CLM repositories in sequence. For one CLM repository loading the DM ETL loads the apps in sequence as well.