Introduction

Reliability testing is about exercising the CLM applications so that failures are discovered and removed before the system is deployed. There are many different combinations of alternate pathways through the complex CLM application, this test scenario exercises the most likely use cases (explained in detail below). The use cases are put under constant load for a seven day period to validate that the CLM application provides the expected level of service, without any downtime or degradation in overall system performance. This report is a sample of the results from a recent CLM 405 Reliability test.

Disclaimer

The information in this document is distributed AS IS. The use of this information or the implementation of any of these techniques is a customer responsibility and depends on the customerís ability to evaluate and integrate them into the customerís operational environment. While each item may have been reviewed by IBM for accuracy in a specific situation, there is no guarantee that the same or similar results will be obtained elsewhere. Customers attempting to adapt these techniques to their own environments do so at their own risk. Any pointers in this publication to external Web sites are provided for convenience only and do not in any manner serve as an endorsement of these Web sites. Any performance data contained in this document was determined in a controlled environment, and therefore, the results that may be obtained in other operating environments may vary significantly. Users of this document should verify the applicable data for their specific environment.

Performance is based on measurements and projections using standard IBM benchmarks in a controlled environment. The actual throughput or performance that any user will experience will vary depending upon many factors, including considerations such as the amount of multi-programming in the userís job stream, the I/O configuration, the storage configuration, and the workload processed. Therefore, no assurance can be given that an individual user will achieve results similar to those stated here.

This testing was done as a way to compare and characterize the differences in performance between different versions of the product. The results shown here should thus be looked at as a comparison of the contrasting performance between different versions, and not as an absolute benchmark of performance.

What our tests measure

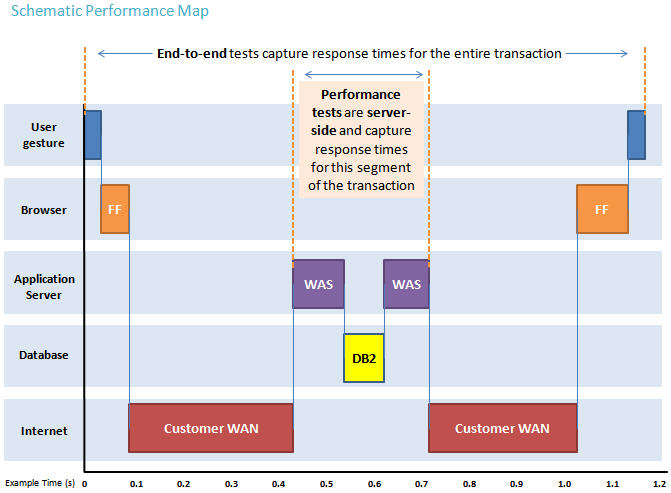

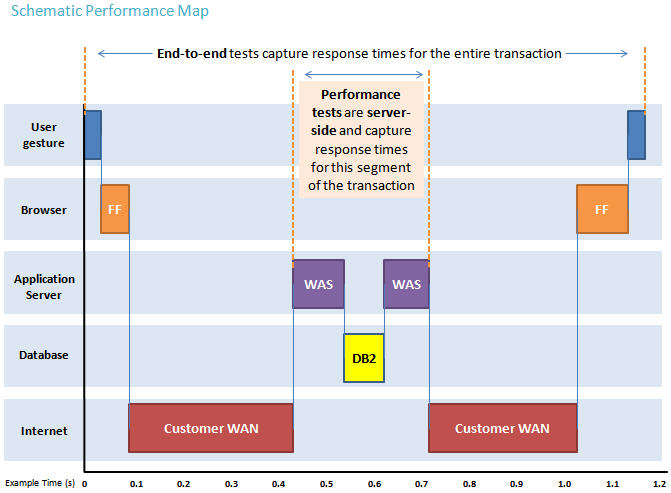

We use predominantly automated tooling such as Rational Performance Tester (RPT) to simulate a workload normally generated by client software such as the Eclipse client or web browsers. All response times listed are those measured by our automated tooling and not a client.

The diagram below describes at a very high level which aspects of the entire end-to-end experience (human end-user to server and back again) that our performance tests simulate. The tests described in this article simulate a segment of the end-to-end transaction as indicated in the middle of the diagram. Performance tests are server-side and capture response times for this segment of the transaction.

Findings

Performance goals

Primary Goals - Detection

- Test the CLM software performance under given conditions, and monitoring for any degradation in performance over the course of the 7 day run.

- Monitor for increases in the overall page or page element averages.

- Report on any specific page which increases over time.

Secondary Goals - Analysis

- To discover the main cause of any failure.

- To find and correlate the structure of repeating failures.

Findings

- Completion of 7 day run without any crashes or noticeable degradation in performance.

- Several page transactions were visibly increasing in response time over the seven day period. Further analysis of these specific transaction will be conducted.

- Final load results will be further analyzed to determine if the actual load fits within our anticipated scalability needs.

Topology

The topology under test is based on

Standard Topology (E1) Enterprise - Distributed / Linux / DB2.

The specifications of machines under test are listed in the table below. Server tuning details listed in

Appendix A

| Function |

Number of Machines |

Machine Type |

CPU / Machine |

Total # of CPU Cores/Machine |

Memory/Machine |

Disk |

Disk capacity |

Network interface |

OS and Version |

| IBM HTTP Server and WebSphere Plugin |

1 |

VMWare Image |

VMWare Hypervisor, Intel(R) Xeon(R) CPU E5-2640 0 @ 2.50GHz |

v4 |

v8GB |

VMFS |

v30GB |

Gigabit Ethernet |

Red Hat Linux Server 2.6.32-358.6.1.el6.x86_64, WebSphere Application Server 8.5.5.0 64-bit |

| JTS Server |

1 |

VMWare Image |

VMWare Hypervisor, Intel(R) Xeon(R) CPU E5-2640 0 @ 2.50GHz |

v4 |

v16GB |

VMFS |

V100GB |

Gigabit Ethernet |

Red Hat Linux Server 2.6.32-358.6.1.el6.x86_64, WebSphere Application Server 8.5.5.0 64-bit |

| CCM Server |

1 |

VMWare Image |

VMWare Hypervisor, Intel(R) Xeon(R) CPU E5-2640 0 @ 2.50GHz |

v4 |

v8GB |

VMFS |

v50GB |

Gigabit Ethernet |

Red Hat Linux Server 2.6.32-358.6.1.el6.x86_64, WebSphere Application Server 8.5.5.0 64-bit |

| QM Server |

1 |

VMWare Image |

VMWare Hypervisor, Intel(R) Xeon(R) CPU E5-2640 0 @ 2.50GHz |

v4 |

v8GB |

VMFS |

v50GB |

Gigabit Ethernet |

Red Hat Linux Server 2.6.32-358.6.1.el6.x86_64, WebSphere Application Server 8.5.5.0 64-bit |

| RM Server |

1 |

VMWare Image |

VMWare Hypervisor, Intel(R) Xeon(R) CPU E5-2640 0 @ 2.50GHz |

v4 |

v8GB |

VMFS |

v50GB |

Gigabit Ethernet |

Red Hat Linux Server 2.6.32-358.6.1.el6.x86_64, WebSphere Application Server 8.5.5.0 64-bit |

| DB2 Server |

1 |

VMWare Image |

VMWare Hypervisor, Intel(R) Xeon(R) CPU E5-2640 0 @ 2.50GHz |

v4 |

v16GB |

VMFS |

V100GB |

Gigabit Ethernet |

Red Hat Linux Server 2.6.32-358.6.1.el6.x86_64, DB2 Enterprise 10.1.0.2 |

| LDAP Server |

1 |

ThinkCentre M57p |

1 x Intel Core 2 Duo E6750 2.66GHz (dual-core) |

2 |

2GB |

SATA Disk |

250GB |

Gigabit Ethernet |

Windows 2003 Enterprise, Tivoli Directory Server 6.1 |

| RPT Workbench |

1 |

VMWare Image |

VMWare Hypervisor, Intel(R) Xeon(R) CPU E5-2640 0 @ 2.50GHz |

v4 |

v16GB |

VMFS |

v100GB |

Gigabit Ethernet |

Red Hat Linux Server 2.6.32-358.6.1.el6.x86_64, Rational Performance Tester 8.3.0.3 64-bit |

| RPT Agent |

6 |

VMWare Image |

VMWare Hypervisor, Intel(R) Xeon(R) CPU E5-2640 0 @ 2.50GHz |

v4 |

v8GB |

VMFS |

v100GB |

Gigabit Ethernet |

Red Hat Linux Server 2.6.32-358.6.1.el6.x86_64, Rational Performance Tester Agent 8.3.0.3 64-bit |

Network connectivity

All CLM server machines and RPT test driver machines are located on the same subnet. The LAN has 1000 Mbps of maximum bandwidth, and the average latency between the IHS server and all distributed Application Servers is 1.12ms.

The LDAP Server is also on the same subnet, but has a latency of less than 0.8ms with the CLM Servers.

Data volume and shape

At the beginning of the test the repository projects were populated to have the following size:

RM/DNG - 10 projects with 100K artifacts

CCM - 10 projects populated with 100K artifacts

QM - 10 projects populated with 100K artifacts

Admin Ė 10 CLM projects with artifacts linked across all projects

Methodology

Rational Performance Tester was used to simulate the CLM workload created using the

web client. Each user is distributed across a set of user groups. Each user group

represents a CLM product area, e.g. CCM, QM, RM. Each user group performs a random

set of use cases from a set of available use cases. A Rational Performance Tester script

is created for each use case. The scripts are organized by pages and each page

represents a single user action.

Based on real customer use, the CLM test scenario provides a ratio of 70% reads

and 30% writes. The users completed use cases at a rate of 6.25 pages per

second, over a seven day period. Each CLM performance test runs for seven days

with no decline in usage or other downtime.

The total CLM workload distribution is 45% CCM, 30% QM, 10% Integrations, 5% DNG Web, and 10% RM. Test cases with the higher weights will be run more frequently within the User Groups.

CLM test cases and weighted distribution

| Use case |

Description |

User Group |

Weight within User Group loop |

| RTC_CreateDefect |

CCM User logs in to their project dashboard, creates a defect, then logs out. |

CCM

| 5 |

| RTC_QueryAndEditDefect |

CCM User logs in to their project dashboard, queries for a defect, edits defect, then logs out. |

CCM |

10 |

| RTC_QueryAndViewDefect |

CCM User logs in to their project dashboard, queries for a defect, views defect, then logs out. |

CCM |

60 |

| QM_CreateTestCase |

QM User logs in to their project dashboard and creates a test case. |

QM |

6 |

| QM_CreateTestPlan |

QM User logs in to their project dashboard and creates a test plan. |

QM |

2 |

| QM_BrowseTERs |

QM User logs in to their project dashboard, then browses Test Case Execution Records. |

QM |

10 |

| QM_editTestScript |

QM User logs in to their project dashboard, opens and edits a test script. |

QM |

18 |

| QM_editTestPlan |

QM User logs in to their project dashboard, opens and edits a test plan. |

QM |

5 |

| QM_editTestCase |

QM User logs in to their project dashboard, opens and edits a test case. |

QM |

18 |

| QM_createTestScript |

QM User logs in to their project dashboard and creates a test script. |

QM |

6 |

| QM_executeTestSteps4 |

QM User logs in to their project dashboard, searches for TER, executes a 4 step TER then shows results. |

QM |

60 |

| QPlan to Req Collection |

RQM User logs in, creates a new test plan, and then creates a new quality task and links it to an RTC task, then logs out. |

Int |

1 |

| TestPlanLinks |

QM User logs in, creates a new test plan, and then links the test plan to a RTC Plan, then logs out. |

Int |

1 |

| Test case links Dev item |

QM User logs in, creates a new test case and links it to an RTC workitem, then logs out. |

Int |

5 |

| TER Links |

QM User logs in to their project, creates and runs a new test case, then creates a linked RTC defect to the TER, user logs out. |

Int |

5 |

| Test script Links |

QM User logs in to their project, creates a new test script and links it to an RTC workitem, then logs out. |

Int |

5 |

| Show RM Traceability viewlet |

QM User logs in to their project dashboard and adds a remote RM traceability viewlet. Save Dashboard, user logs out. |

Int |

1 |

| Test Case links |

QM User logs in to their project, creates a new test case and links it to an RM requirement artifact. User logs out. |

Int |

1 |

| SaveModule |

DNG Web user logs in, opens, edits, and saves a large module. |

DNG Web |

3 |

| SwitchModule |

DNG Web user logs in, opens a random module, then switches view and opens another module. |

DNG Web |

6 |

| AddModuleComment |

DNG Web user opens a large module inserts a comment, saves module. |

DNG Web |

8 |

| OpenLargeModule |

DNG Web user logs in and opens a large module. |

DNG Web |

8 |

| DisplayModuleHistory |

DNG Web user logs in to their project, opens a module then selects display module history. |

DNG Web |

4 |

| CreateArtifactLargeModule |

DNG User logs in to their project, edits a large module by adding an artifact to it. Save module. |

DNG Web |

4 |

| OpenAndScrollLargeModule |

DNG Web User logs in, opens a large module, then scrolls through the entire 1000 artifacts. |

DNG Web |

8 |

| ReviewLargeModule |

DNG Web user logs in, opens a large module, creates and executes a review cycle on the module. |

DNG Web |

1 |

| EditModuleArtifact |

DNG Web user logs in, opens a large module, edits the title of one of the artifacts in the module, saves edits, saves module. |

DNG Web |

4 |

| ManageFolders |

RM User logs in, creates a folder, move it to a new location, and then delete the folder. |

RM |

7 |

| ViewCollections |

RM User logs in to their project then views collections that contain 100 artifacts from the collections folders. |

RM |

12 |

| CreateLinks |

RM user logs in to their project, opens an artifact and creates a link to another RM artifact. |

RM |

10 |

| CreateOSLC link |

RM User logs in to their project, creates a new artifact and links it as validated by the creation of a new QM Test Case. |

RM |

6 |

| NestedLoop |

RM User logs in to their project and then opens three levels of nested folders. |

RM |

7 |

| FilterQuery |

RM User logs in to their project dasboard then runs a filtered query. |

RM |

6 |

| QuerybyID |

RM User logs in to their project and runs a query by id. |

RM |

4 |

| QuerybyString |

RM User logs in to their project, then runs a query by specifying a string. |

RM |

8 |

| HoverCollection |

RM User logs in to their project, opens the collection folder, then hovers over one of the collections until the rich hover appears. |

RM |

6 |

| CreateArtifact |

RM User logs in to their project, creates an artifact, edits the artifact and inserts text and images, then saves the artifact. |

RM |

3 |

| CreateMultiValueArt |

RM User logs in to their project, creates a multi value artifact then adds multi value attributes. |

RM |

3 |

Results

IHS Thread Analysis

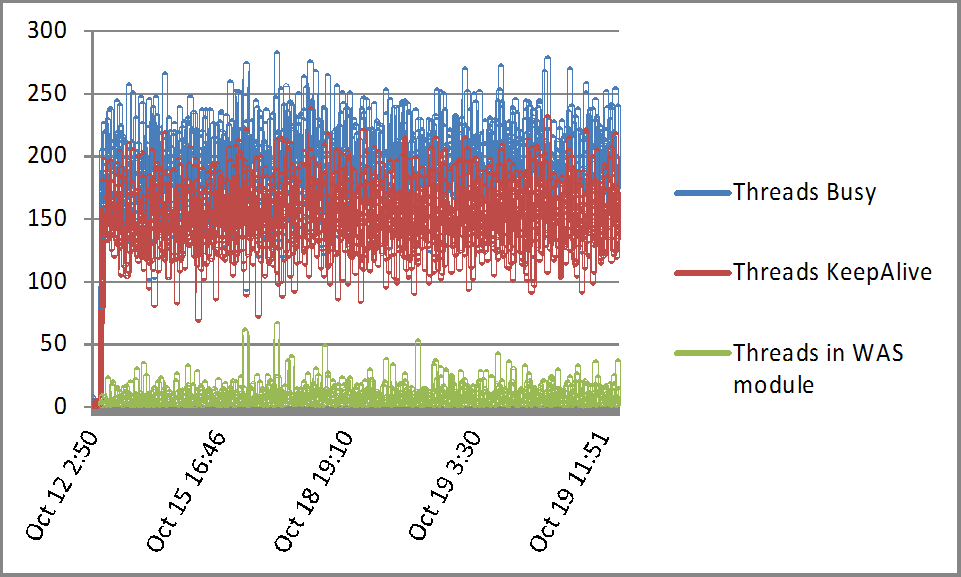

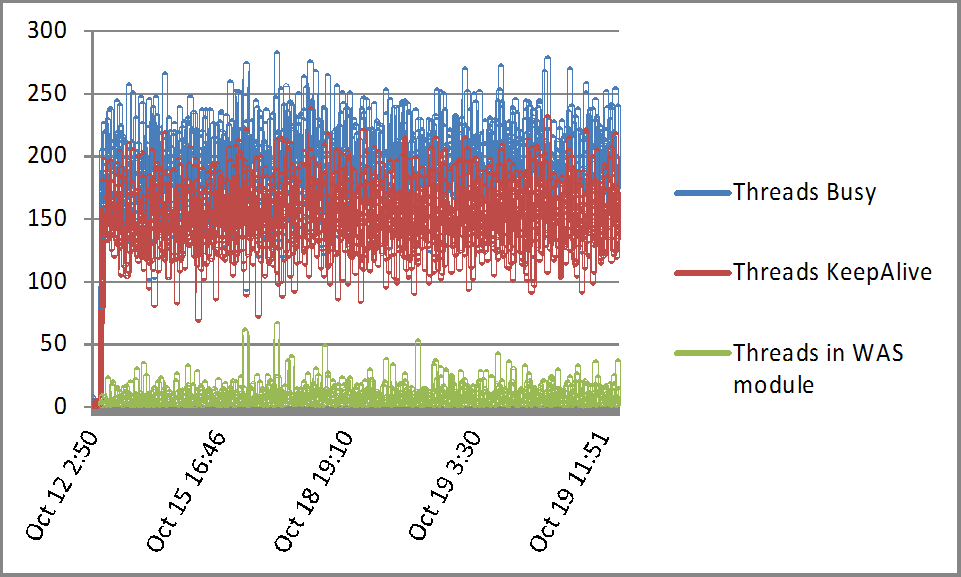

IHS threads usage is monitored to determine how well IHS server was keeping up with the load, and determine if IHS was tuned properly to move the threads through the system most efficiently.

The following chart shows how well the IHS server was handling it's threads, and of note are the threads in WAS module which do not accumulate over time which indicates the WebSphere Application

servers are keeping up with the load.

IHS server-status command monitoring indicates the webserver is processing approximately 91.4 requests/sec.

RPT Page Hit Rate

RPT Page Hit Rate

RPT Page Hit Rate is captured to monitor the load and help with correlation of any incidences where load changes. For purposes of this test the load is constant.

RPT Page Element Response over Time

RPT Page Element Response over Time

Page Element Response Rate is captured to monitor the average page element response over time.

RPT Average Page Response Time

RPT Average Page Response Time

RPT Average Page Response Time shows the average of all pages over time. This is very useful in determining which if/any pages are unexpectedly increasing in response time over the seven day period. For example, two page response times appear to be steadily increasing, so further analysis of these two page transactions will be performed to determine if they are a result of a test design issue, with increasing data volumes pushing up response times, or if this is indeed a product issue.

The two tests under investigation here are "RQM_TestCase_SelectPickFolder", and "RRC_Collection_SelectPickFolder". The RQM_TestCase_SelectPickFolder is a page which is part of the process of creating a new RM requirement in a specific folder from within a new RQM Testcase. The second page RRC_Collection_SelectPickFolder is part of the use case where an RQM Quality Plan is validated by a new RM requirements collection that is created from within RQM.

RPT Byte Transfer Rate

RPT Byte Transfer Rate

RPT Byte Transfer Rate shows us if bytes being sent or received are changing over time, indicating a drop in throughput.

Appendix A

Product

|

Version |

Highlights for configurations under test |

| IBM HTTP Server for WebSphere Application Server |

8.5.5.0 |

IBM HTTP Server functions as a reverse proxy server implemented

via Web server plug-in for WebSphere Application Server. Configuration details can be found from the CLM infocenter.

HTTP server (httpd.conf):

MaxClients: increase value for high-volume loads (adjust value based on user load)

- ThreadsPerChild = 50

- Timeout 150

- MaxKeepAliveRequests 100

- KeepAliveTimeout 8

- ThreadLimit 50

- ServerLimit 100

- StartServers 2

- MaxClients 800

- MinSpareThreads 25

- MaxSpareThreads 100

- ThreadsPerChild 50

- MaxRequestsPerChild 0

Web server plugin-in (plugin-cfg.xml):

OS Configuration:

- max user processes = unlimited

|

| IBM WebSphere Application Server Network Deployment for JTS, Admin, and CLMHelp applications | 8.5.5.0 | JVM settings:

- GC policy and arguments, max and init heap sizes:

-Xgcpolicy:gencon -Xmx8g -Xms8g -Xmn1g -Xcompressedrefs -Xgc:preferredHeapBase=0x100000000 -XX:MaxDirectMemorySize=1g

Thread pools:

- Maximum WebContainer = Minimum WebContainer = 200

OS Configuration:

System wide resources for the app server process owner:

- max user processes = unlimited

- open files = 65536

|

| IBM WebSphere Application Server Network Deployment for CCM application |

8.5.5.0 |

JVM settings:

- GC policy and arguments, max and init heap sizes:

-Xgcpolicy:gencon -Xmx8g -Xms4g -Xmn512m -Xcompressedrefs -Xgc:preferredHeapBase=0x100000000 -XX:MaxDirectMemorySize=1g

Thread pools:

- Maximum WebContainer = Minimum WebContainer = 200

OS Configuration:

System wide resources for the app server process owner:

- max user processes = unlimited

- open files = 65536

|

| IBM WebSphere Application Server Network Deployment QM application |

8.5.5.0 |

JVM settings:

- GC policy and arguments, max and init heap sizes:

-Xgcpolicy:gencon -Xmx4g -Xms4g -Xmn512m -Xcompressedrefs -Xgc:preferredHeapBase=0x100000000 -XX:MaxDirectMemorySize=1g

Thread pools:

- Maximum WebContainer = Minimum WebContainer = 200

OS Configuration:

System wide resources for the app server process owner:

- max user processes = unlimited

- open files = 65536

|

| IBM WebSphere Application Server Network Deployment for RM, Converter applications |

8.5.5.0 |

JVM settings:

- GC policy and arguments, max and init heap sizes:

-Xgcpolicy:gencon -Xmx4g -Xms4g -Xmn512m -Xcompressedrefs -Xgc:preferredHeapBase=0x100000000 -XX:MaxDirectMemorySize=1g

Thread pools:

- Maximum WebContainer = Minimum WebContainer = 200

OS Configuration:

System wide resources for the app server process owner:

- max user processes = unlimited

- open files = 65536

|

| DB2 |

DB2 10.1 |

|

| LDAP server |

|

|

| License server |

|

Hosted locally by JTS server |

| RPT Workbench |

8.3.0.3 |

Defaults |

| RPT agents |

8.3.0.3 |

Defaults |

| Network |

|

Shared subnet within test lab |

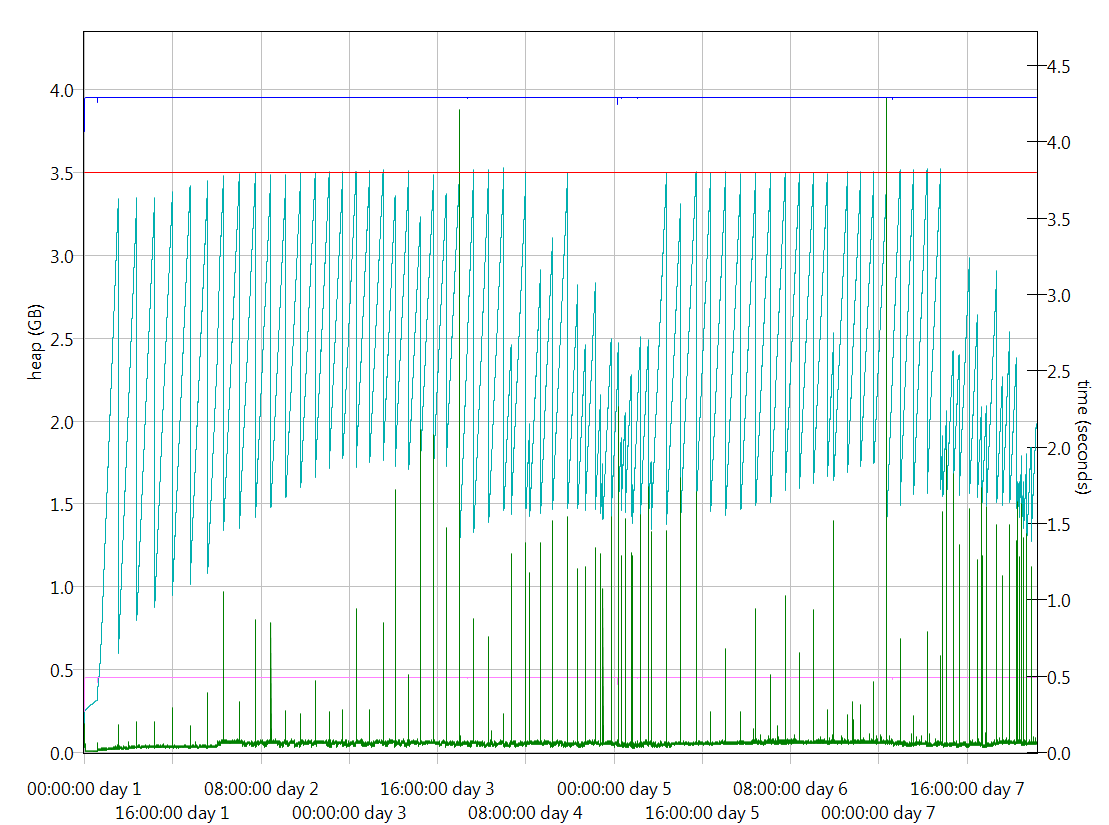

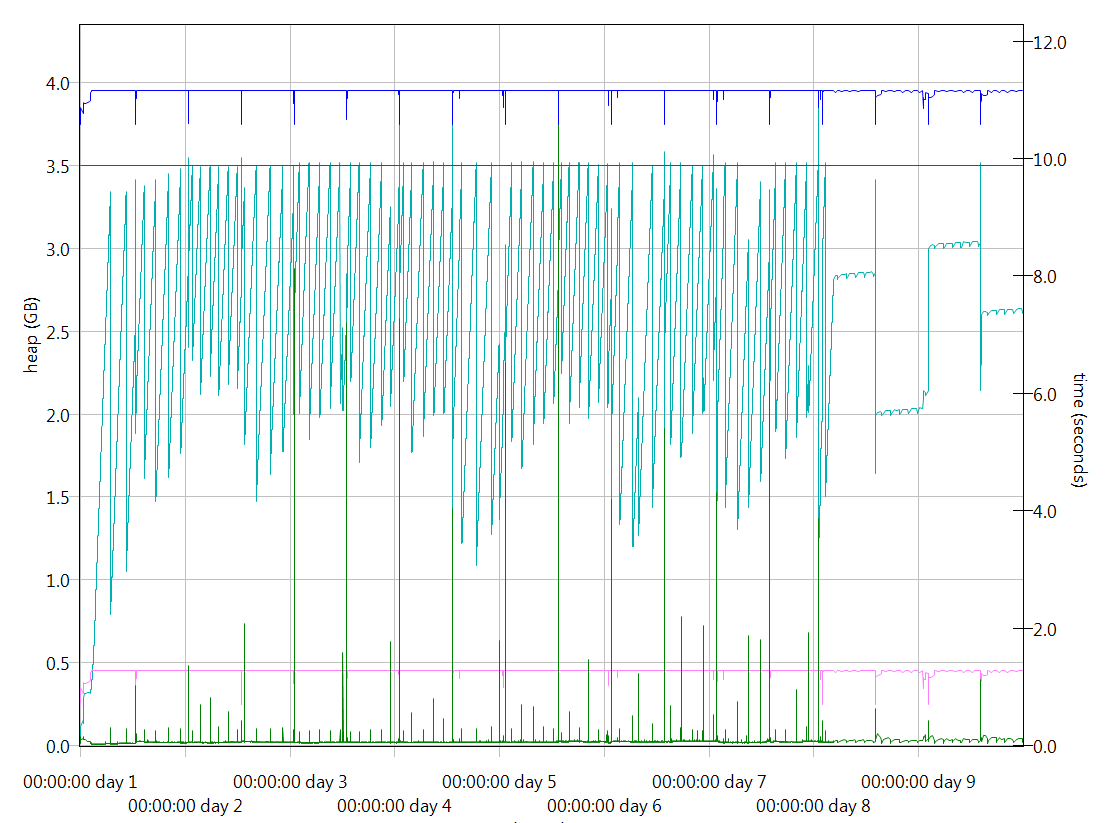

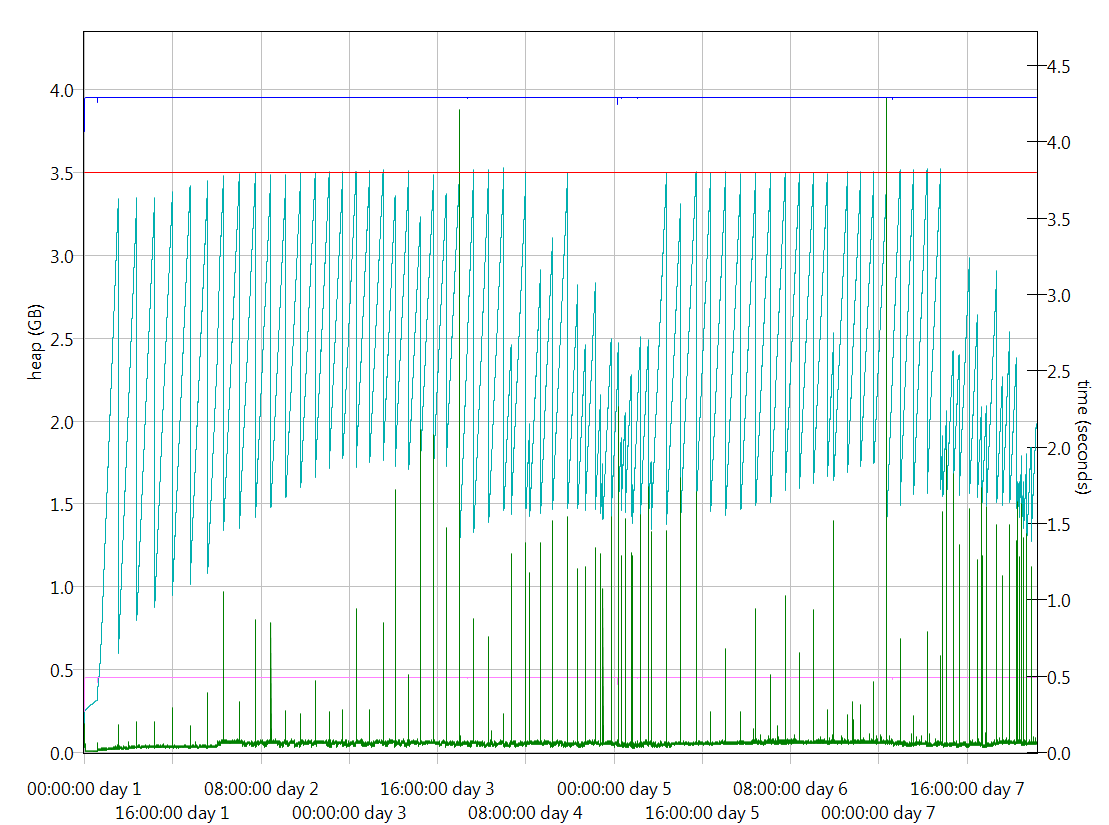

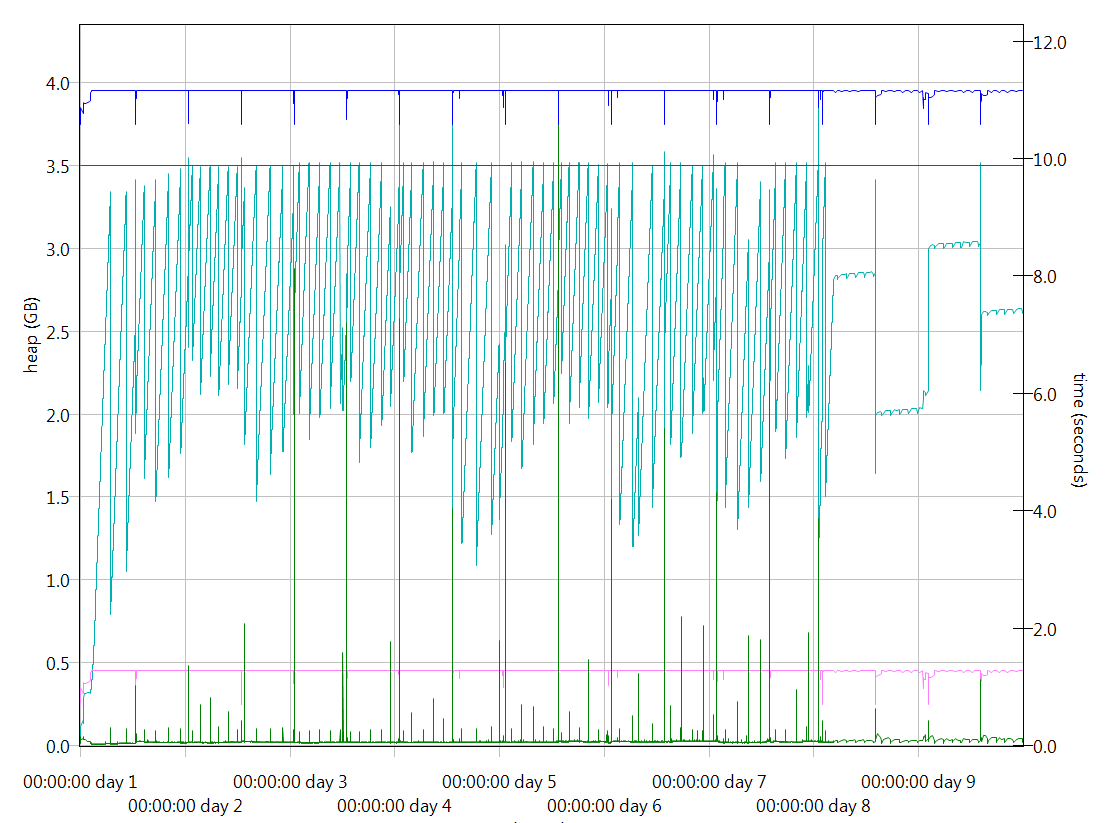

Garbage collection

Verbose garbage collection analysis helps determine how the memory and garbage collection are performing for the CLM applications running our use cases. Optimal JVM configuration depends very greatly on the usage pattern. Our goal is to provide guidance on where to start and what key tuning factors impact performance.

JTS

CCM

CCM

QM

QM

RM

RM

For more information

About the authors

MarkGravina

Questions and comments:

- What other performance information would you like to see here?

- Do you have performance scenarios to share?

- Do you have scenarios that are not addressed in documentation?

- Where are you having problems in performance?

Warning: Can't find topic Deployment.PerformanceDatasheetReaderComments

The specifications of machines under test are listed in the table below. Server tuning details listed in Appendix A

The specifications of machines under test are listed in the table below. Server tuning details listed in Appendix A

RPT Page Hit Rate

RPT Page Hit Rate is captured to monitor the load and help with correlation of any incidences where load changes. For purposes of this test the load is constant.

RPT Page Hit Rate

RPT Page Hit Rate is captured to monitor the load and help with correlation of any incidences where load changes. For purposes of this test the load is constant.

RPT Page Element Response over Time

Page Element Response Rate is captured to monitor the average page element response over time.

RPT Page Element Response over Time

Page Element Response Rate is captured to monitor the average page element response over time.

RPT Average Page Response Time

RPT Average Page Response Time shows the average of all pages over time. This is very useful in determining which if/any pages are unexpectedly increasing in response time over the seven day period. For example, two page response times appear to be steadily increasing, so further analysis of these two page transactions will be performed to determine if they are a result of a test design issue, with increasing data volumes pushing up response times, or if this is indeed a product issue.

The two tests under investigation here are "RQM_TestCase_SelectPickFolder", and "RRC_Collection_SelectPickFolder". The RQM_TestCase_SelectPickFolder is a page which is part of the process of creating a new RM requirement in a specific folder from within a new RQM Testcase. The second page RRC_Collection_SelectPickFolder is part of the use case where an RQM Quality Plan is validated by a new RM requirements collection that is created from within RQM.

RPT Average Page Response Time

RPT Average Page Response Time shows the average of all pages over time. This is very useful in determining which if/any pages are unexpectedly increasing in response time over the seven day period. For example, two page response times appear to be steadily increasing, so further analysis of these two page transactions will be performed to determine if they are a result of a test design issue, with increasing data volumes pushing up response times, or if this is indeed a product issue.

The two tests under investigation here are "RQM_TestCase_SelectPickFolder", and "RRC_Collection_SelectPickFolder". The RQM_TestCase_SelectPickFolder is a page which is part of the process of creating a new RM requirement in a specific folder from within a new RQM Testcase. The second page RRC_Collection_SelectPickFolder is part of the use case where an RQM Quality Plan is validated by a new RM requirements collection that is created from within RQM.

RPT Byte Transfer Rate

RPT Byte Transfer Rate shows us if bytes being sent or received are changing over time, indicating a drop in throughput.

RPT Byte Transfer Rate

RPT Byte Transfer Rate shows us if bytes being sent or received are changing over time, indicating a drop in throughput.

CCM

CCM

QM

QM

RM

RM