Rational Team Concert for System z sizing report: RTC 4.0.4 release

Authors: LuYuliangBuild basis: Rational Team Concert 4.0.4

Introduction

This article provides the Rational Team Concert 4.0.4 sizing report specifically for z/OS, which is part of findings for RTC for System z performance testing. We are adopting the Rational Team Concert test harness to simulate actual client workload and validate our scalability requirements. Read the Collaborative Lifecycle Management performance report: RTC 4.0.4 release first for more information about the Rational Team Concert test harness. Since version 4.0 was released, we have focused on performance testing using standard topologies. For more information related to the system requirements, see System Requirements in the Installing, Upgrading and Migrating section.Disclaimer

The information in this document is distributed AS IS. The use of this information or the implementation of any of these techniques is a customer responsibility and depends on the customerís ability to evaluate and integrate them into the customerís operational environment. While each item may have been reviewed by IBM for accuracy in a specific situation, there is no guarantee that the same or similar results will be obtained elsewhere. Customers attempting to adapt these techniques to their own environments do so at their own risk. Any pointers in this publication to external Web sites are provided for convenience only and do not in any manner serve as an endorsement of these Web sites. Any performance data contained in this document was determined in a controlled environment, and therefore, the results that may be obtained in other operating environments may vary significantly. Users of this document should verify the applicable data for their specific environment. Performance is based on measurements and projections using standard IBM benchmarks in a controlled environment. The actual throughput or performance that any user will experience will vary depending upon many factors, including considerations such as the amount of multi-programming in the userís job stream, the I/O configuration, the storage configuration, and the workload processed. Therefore, no assurance can be given that an individual user will achieve results similar to those stated here. This testing was done as a way to compare and characterize the differences in performance between different versions of the product. The results shown here should thus be looked at as a comparison of the contrasting performance between different versions, and not as an absolute benchmark of performance.What our tests measure

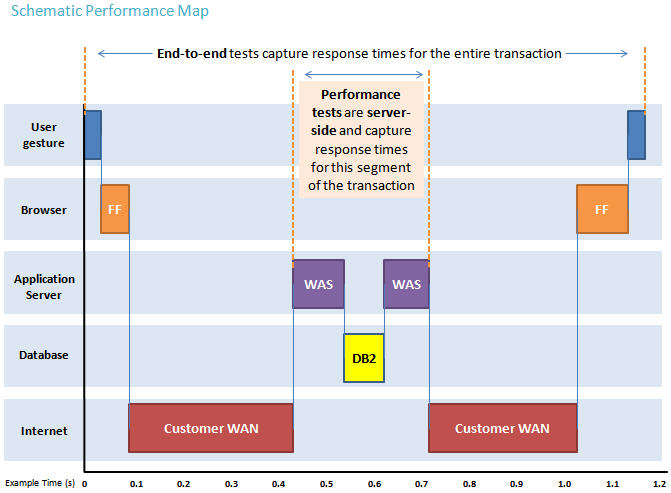

We use predominantly automated tooling such as Rational Performance Tester (RPT) to simulate a workload normally generated by client software such as the Eclipse client or web browsers. All response times listed are those measured by our automated tooling and not a client. The diagram below describes at a very high level which aspects of the entire end-to-end experience (human end-user to server and back again) that our performance tests simulate. The tests described in this article simulate a segment of the end-to-end transaction as indicated in the middle of the diagram. Performance tests are server-side and capture response times for this segment of the transaction.

Topology

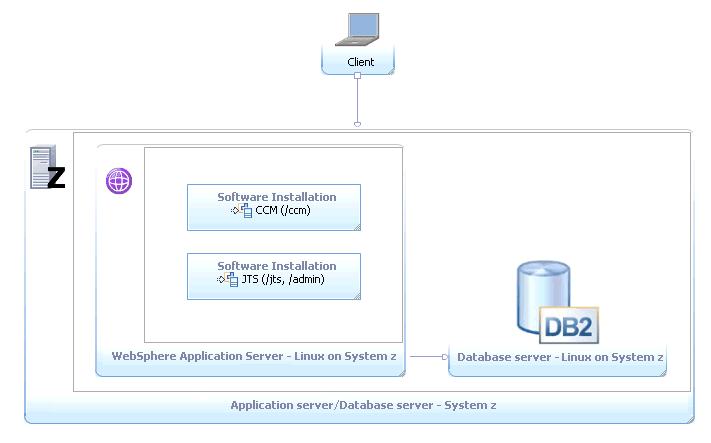

This sizing report is specifically for:- Single-Tier Mode: Linux for System z standalone topology, which deploys the application server on Linux for System z and DB2 on Linux for System z

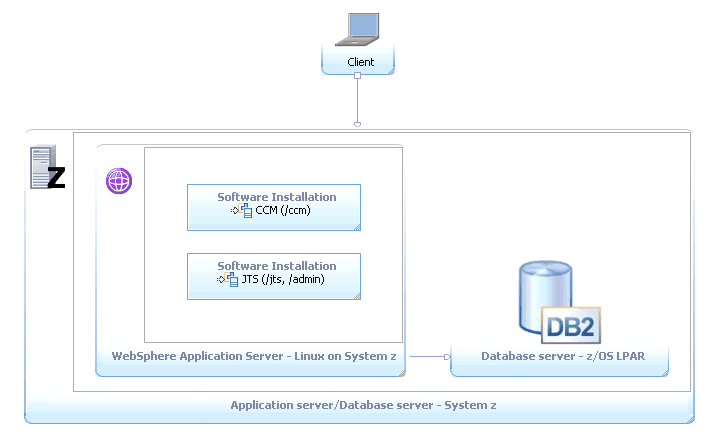

- Dual-Tier Mode: standard topology - E5, which deploys the application server on Linux for System z and DB2 on z/OS

Findings

Small-scale enterprise deployment

For this sizing report, a small-scale deployment is a configuration of between 100 and 700 users on either a single-tier or a double-tier setup. For a single-tier setup, the application server and database are running on the same LPAR. For dual-tier setup, the application server and database are running on different LPARs.- The following table lists the system configurations we used for a small-scale single-tier setup scenario:

Application server and database - Linux for System z (single-tier configuration) Disk DS8000 with PAV enabled Operating System SUSE Linux Enterprise Server 10 (S390x) Application server WebSphere Application Server Network Deployment v8.0.0.3 Database DB2 9.7.0.5 - The following table lists the system configurations we recommend for a small-scale single-tier setup scenario:

Application server and database - Linux for System z (single-tier configuration) CPU 1700 mips or higher Memory 6 GB or higher - The following tables list the system configurations we used for a small-scale dual-tier setup scenario:

Application server - Linux for System z (dual-tier configuration) Disk DS8000 with PAV enabled Operating System SUSE Linux Enterprise Server 10 (S390x) Application server WebSphere Application Server Network Deployment v8.0.0.3 Database - IBM System z10 (dual-tier configuration) Disk DS8000 with PAV enabled Operating System z/OS 1.12 Database DB2 on z/OS v10.1 - The following tables list the system configurations we recommend for a small-scale dual-tier setup scenario:

Application server - Linux for System z (dual-tier configuration) CPU 1700 mips or higher Memory 6 GB or higher Database - IBM System z10 (dual-tier configuration) CPU 200 mips or higher Memory 6 GB or higher

Large-scale enterprise deployment

For this sizing report, a large-scale deployment is a configuration of between 700 and 2000 users on either a single-tier or a double-tier setup. For a single-tier setup, the application server and database are running on the same LPAR. For dual-tier setup, the application server and database are running on different LPARs.- The following table lists the system configurations we used for a large-scale single-tier setup scenario:

Application server and database - Linux for System z (single-tier configuration) Disk DS8000 with PAV enabled Operating System SUSE Linux Enterprise Server 10 (S390x) Application server WebSphere Application Server Network Deployment v8.0.0.3 Database DB2 9.7.0.5 - The following table lists the system configurations we recommend for a large-scale single-tier setup scenario:

Application server and database - Linux for System z (single-tier configuration) CPU 2700 mips or higher Memory 10 GB or higher - The following tables list the system configurations we used for a large-scale dual-tier setup scenario:

Application server - Linux for System z (dual-tier configuration) Disk DS8000 with PAV enabled Operating System SUSE Linux Enterprise Server 10 (S390x) Application server WebSphere Application Server Network Deployment v8.0.0.3 Database - IBM System z10 (dual-tier configuration) Disk DS8000 with PAV enabled Operating System z/OS 1.12 Database DB2 on z/OS v10.1 - The following tables list the system configurations we recommend for a large-scale dual-tier setup scenario:

Application server - Linux for System z (dual-tier configuration) CPU 2700 mips or higher Memory 10 GB or higher Database - IBM System z10 (dual-tier configuration) CPU 300 mips or higher Memory 6 GB or higher

Rational Build Agent

The Rational Build Agent is required for System z builds. We are running benchmark testing to achieve the data. Read Rational Team Concert For z/OS Performance Comparison Between Releases for more information.- The following table lists the system configurations we used for the Rational Build Agent:

Rational Build Agent on IBM System z/OS Disk DS8000 with PAV enabled Operating System z/OS 1.12 Database DB2 on z/OS v10.1 - The following table lists the system configurations we recommend for the Rational Build Agent:

Rational Build Agent on IBM System z/OS CPU 100 mips or higher Memory 2 GB or higher

Appendix A - WebSphere Configuration

| IBM WebSphere Application Server Network Deployment | 8.0.0.3 | JVM settings:

* Small business scale: -Xmx4g -Xms4g -Xmn512m -Xgcpolicy:gencon -Xcompressedrefs -Xgc:preferredHeapBase=0x100000000 * Large business scale: -Xmx6g -Xms6g -Xmn512m -Xgcpolicy:gencon -Xcompressedrefs -Xgc:preferredHeapBase=0x100000000Thread pools:

|

| DB2 | DB2 on z/OS v10.1 | |

| License server | Same as CLM version | Hosted locally by JTS server |

| RPT workbench | 8.2.1.5 | Defaults |

| RPT agents | 8.2.1.5 | Defaults |

| Network | Shared subnet within test lab |

Appendix B - History

Related Links:

About the authors

LuYuliang is has been a Software Engineer at IBM for over 3 years. He is part of the Rational Performance Engineering group.Questions and comments:

- What other performance information would you like to see here?

- Do you have performance scenarios to share?

- Do you have scenarios that are not addressed in documentation?

- Where are you having problems in performance?

Contributions are governed by our Terms of Use. Please read the following disclaimer.

Dashboards and work items are no longer publicly available, so some links may be invalid. We now provide similar information through other means. Learn more here.