7.0.3 Performance Update: IBM Engineering Test Management (ETM)

Authors: SkyeBischoff and JayrajsinhGohil Build basis: IBM Engineering Test Management 7.0.3Standard disclaimer

The information in this document is distributed AS IS. The use of this information or the implementation of any of these techniques is a customer responsibility and depends on the customerís ability to evaluate and integrate them into the customerís operational environment. While each item may have been reviewed by IBM for accuracy in a specific situation, there is no guarantee that the same or similar results will be obtained elsewhere. Customers attempting to adapt these techniques to their own environments do so at their own risk. Any pointers in this publication to external Web sites are provided for convenience only and do not in any manner serve as an endorsement of these Web sites. Any performance data contained in this document was determined in a controlled environment, and therefore, the results that may be obtained in other operating environments may vary significantly. Users of this document should verify the applicable data for their specific environment. Performance is based on measurements and projections using standard IBM benchmarks in a controlled environment. The actual throughput or performance that any user will experience will vary depending upon many factors, including considerations such as the amount of multi-programming in the userís job stream, the I/O configuration, the storage configuration, and the workload processed. Therefore, no assurance can be given that an individual user will achieve results similar to those stated here. This testing was done as a way to compare and characterize the differences in performance between different versions of the product. The results shown here should thus be looked at as a comparison of the contrasting performance between different versions, and not as an absolute benchmark of performance.Updates from previous report:

This report provides an update to IBM Engineering Test Management (ETM) 7.0.3 performance and scalability test, from the 7.0.2 release.Datashape

In this release, the datashape has been evolved as outlined below- Number of components: total number of components is increased from 2,511 to 10,011

- Repository size[1]: increased from 22 million to 34 million total artifacts, or by 55%

- ETM Database size on disk has grown from 1.2TB to 2.2TB

- Component size[1]:

- added small (<1K), medium (5K), and large (50K) sizes

- keeping extra large (500K) and extra-extra-large (10M) sizes

The size and number of different components are summarized in the table below

| Counts | Extra-extra large component(10M) | Extra large component(500K) | Large(50K) | Medium(5K) | Small(<1K) | Sum in Repo |

| test plans | 1,681 | 50 | 6 | 4 | 1 | 18,131 |

| test cases | 1,000,207 | 30,000 | 3,000 | 400 | 20 | 2,510,207 |

| test scripts | 1,000,209 | 30,000 | 3,000 | 400 | 20 | 2,510,209 |

| test suites | 100,020 | 3,000 | 300 | 40 | 10 | 315,020 |

| test case execution records | 4,000,800 | 120,000 | 12,000 | 1,200 | 40 | 9,000,800 |

| test case results | 4,000,921 | 360,000 | 36,000 | 2,400 | 80 | 16,520,921 |

| test suite results | 500,100 | 15,000 | 1,500 | 160 | 20 | 1,263,100 |

| test execution schedules | 47,467 | 1,424 | 500 | 200 | 20 | 668,891 |

| test phases and test environments | 31,636 | 800 | 92 | 63 | 20 | 324,236 |

| build definitions and build records | 33,533 | 1,006 | 120 | 70 | 25 | 384,539 |

| Total # of artifacts per component | 10,716,574 | 561,280 | 56,518 | 4,937 | 256 | 33,516,054 |

| Total # of components in repository | 1 | 10 | 50 | 450 | 2000 | 10,011 |

| Newly added in 703 | N | N | Y | Y | Y | - |

Configuration test coverage

The performance and load tests of small, medium, and large sized components are evaluated under Global Configuration (GC) for this release. The GC component is consisted of all 10,000 ETM components. The extra and extra-extra large sized components remain to be tested against their local ETM configurations including both streams and baselines for regression purpose.Disclaimer

The extra-extra-large sized component was created initially to expedite the growth of the repository without consideration of artifact versions (i.e. the artifacts were generated in an opt-out environment prior to the enablement of Configuration Management feature). The test against this particular component was initially experimental during 7.0, but it continuously serves as a way to regression test the performance of IBM IBM Engineering Test Management (ETM). The results using this component can represent edge cases due to its huge size, thus the measurement for the performance of this component shown in the report should be looked at and analyzed as a comparison of the performance of different releases in the context of datashape. It should not be deemed as a performance benchmark.7.0.3 GA vs 7.0.3 iFix004 Migration Service Issue

Its important to be aware the results below were tested on 7.0.3 iFix004. This was specifically to pickup fixes for a known migration service issue which occurs on some very large scale ETM servers. The issue is related to reduced server performance while one of the background migration processes continues to run. It is not typically an issue for most environments. But it was an important fix for our environment. So our team encourages large scale users to pickup the newest ETM iFix to avoid the issue.Summary of regression test results

We continue to find the following performance characteristics as we keep increasing the size of the repositoryComponent performance vs. component size

For any given repository size, generally:- The smaller a component, the faster page response times or better performance for that component. Under the 34 million repository, for components that have a size ranged from small to large, 99% of the individual page response times are under 2 seconds (with the exception of Saving a test plan may take 5 seconds due to large amount of iterations defined); whereas 11 out of 120 pages are exceeding 5 seconds for the extra-large component, most of these slower pages are browsing/searching a given artifact type that has a larger total count, including searching/filtering test case results (9 seconds) and test case records (5.5 seconds) in the default views within a stream.

Component performance vs. growing repository size

- The general performance of a given component[2] degrades as the repository size grows. Although the measurements based on extra-extra-large sized component stress tested some edge cases, we observed a projection of a slight degradation when comparing between the 20-million and smaller repositories. The degradation degree for 500K component is still minor under 34-million repository (average page response time for all pages degraded by less than 10%, comparing to 20-million repository).

- Within a component context, the pages that are more sensitive to the size of the entire repository include those embracing the largest test artifact counts. Examples seen in our test environment, page Browse Test Case Execution Records (total count of 4 million in a single component, or 30% of total artifact count in the repository), Browse Test Case Results, and the relevant pages to search/filter the test artifact.

Regression Test Results for ETM 7.0.3 with 34 million resources on Oracle

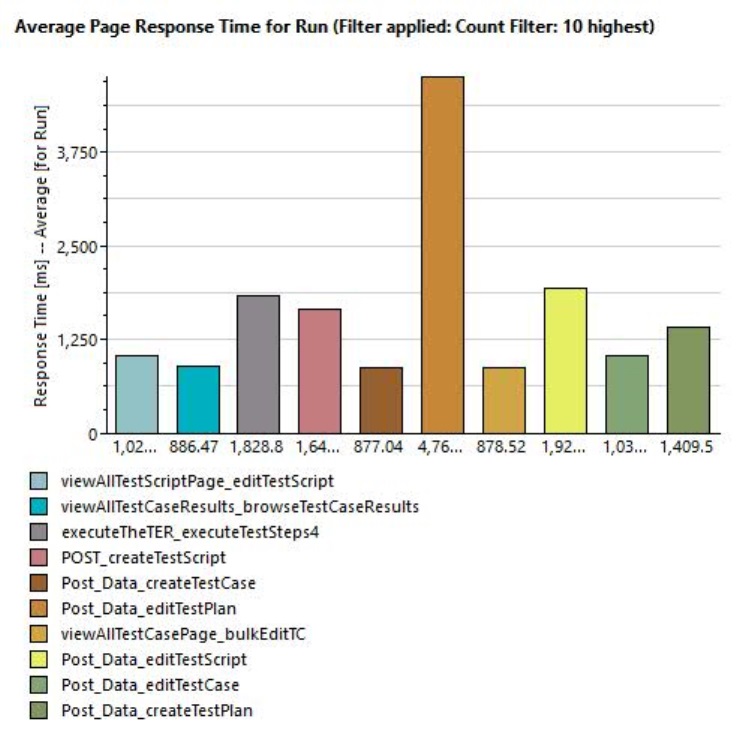

The following chart shows our ETM 7.0.3 performance benchmark with:- 1000 concurrent users

- 34 million resources

- Oracle 19c

- User accessing small components inside of a GC configuration with 10,000 component leafs

Whatís not in scope of test for this release

Like all previous releases, scalability that may be impacted by any of the following dimensions are not covered in 7.0.3 tests -- The growth of number of baselines for a given component or stream

- The growth of number of versions for a given configuration

- The growth of number of states for a given artifact

References:

- Topology and hardware

- Application server and database version and configuration under test

- Performance load

- Page by page performance results in 7.0.1

- Test load based tunings

Related topics: [[702PerformanceReportIBMEngineeringTestManagement][ETM 7.0.2ETM 7.0.1 Performance Report Performance Report]], ETM 7.0.1 Performance Report, Engineering Test Management (ETM) Adapter Deployment Guide, DOORS Next performance guide

| I | Attachment | Action | Size | Date | Who | Comment |

|---|---|---|---|---|---|---|

| |

Top10Pages.jpeg | manage | 100.6 K | 2024-03-26 - 05:24 | SkyeBischoff | Top 10 slowest page operations in ETM 7.0.3 1000 user run in 10,000 GC component |

Contributions are governed by our Terms of Use. Please read the following disclaimer.

Dashboards and work items are no longer publicly available, so some links may be invalid. We now provide similar information through other means. Learn more here.