Enterprise Extensions promotion improvements in Rational Team Concert version 5.0 on z/OS

Authors: Lu LuLast updated: June 2, 2014

Build basis: Rational Team Concert v5.0 on z/OS

Introduction

This report provides performance data for the Enterprise Extensions promotion feature enhancements that were introduced in version 5.0. The tests compare performance between Rational Team Concert version 5.0 sprint 4 and version 4.0.6. The objectives of our tests are to ensure that there is no regression and to verify the performance improvements of the promotion feature. In our scenario, we measured how long it takes for the 'finalize build maps' process to complete with the 'publish build map links' option selected in v5.0 sprint 4 and v4.0.6 promotion definitions. 'Finalize Build Maps' activity is one step that takes about 60% of the whole promotion time, so improvement of 'Finalize Build Maps' activity enhances promotion greatly.Disclaimer

The information in this document is distributed AS IS. The use of this information or the implementation of any of these techniques is a customer responsibility and depends on the customerís ability to evaluate and integrate them into the customerís operational environment. While each item may have been reviewed by IBM for accuracy in a specific situation, there is no guarantee that the same or similar results will be obtained elsewhere. Customers attempting to adapt these techniques to their own environments do so at their own risk. Any pointers in this publication to external Web sites are provided for convenience only and do not in any manner serve as an endorsement of these Web sites. Any performance data contained in this document was determined in a controlled environment, and therefore, the results that may be obtained in other operating environments may vary significantly. Users of this document should verify the applicable data for their specific environment. Performance is based on measurements and projections using standard IBM benchmarks in a controlled environment. The actual throughput or performance that any user will experience will vary depending upon many factors, including considerations such as the amount of multi-programming in the userís job stream, the I/O configuration, the storage configuration, and the workload processed. Therefore, no assurance can be given that an individual user will achieve results similar to those stated here. This testing was done as a way to compare and characterize the differences in performance between different versions of the product. The results shown here should thus be looked at as a comparison of the contrasting performance between different versions, and not as an absolute benchmark of performance.What our tests measure

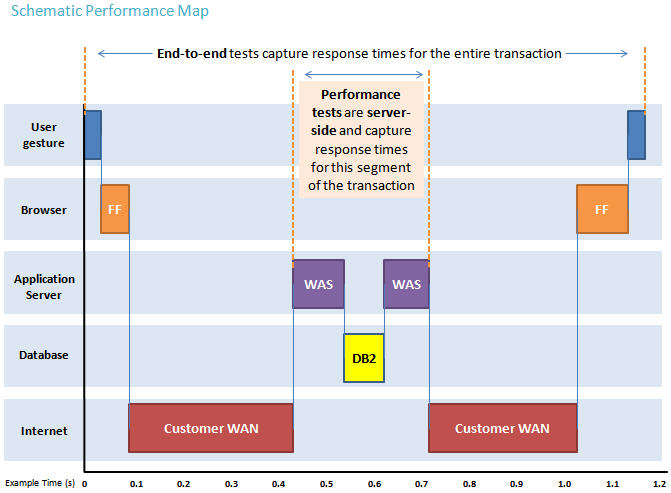

We use predominantly automated tooling such as Rational Performance Tester (RPT) to simulate a workload normally generated by client software such as the Eclipse client or web browsers. All response times listed are those measured by our automated tooling and not a client. The diagram below describes at a very high level which aspects of the entire end-to-end experience (human end-user to server and back again) that our performance tests simulate. The tests described in this article simulate a segment of the end-to-end transaction as indicated in the middle of the diagram. Performance tests are server-side and capture response times for this segment of the transaction.

Findings

In this scenario, we compared 'finalize build maps' activity during v5.0 sprint 4 and v4.0.6 promotions with the 'publish build map links' option selected.- The performance time for the 'finalize build maps' activity changed from 3 minutes 30 seconds for v4.0.6 down to 49.5 seconds in v5.0. An improvement of 70 percent.

Topology

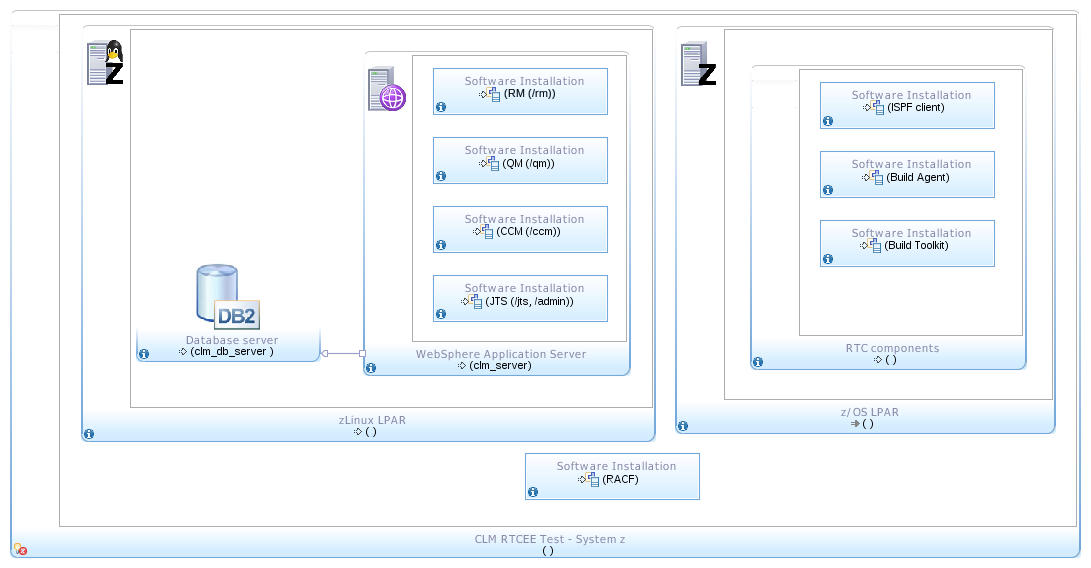

The tests are executed in a single tier topology infrastructure like the one in the following diagram: The Jazz Team Server was set up based on WebSphere and DB2 on Linux for System z. The build machine with the Rational Build Agent was on z/OS.

The Jazz Team Server was set up based on WebSphere and DB2 on Linux for System z. The build machine with the Rational Build Agent was on z/OS.

| Test Environment | ||

|---|---|---|

| Jazz Team Server | Operating System and version: Linux for System z (SUSE Linux Enterprise Server 10 (s390x)) System Resource : 10 GB storage, 4 CPs (20000 mips, CPU type: 2097.710, CPU model: E12) CLM: 5.0 Sprint4 (CALM-I20131211-0734), 4 GB heap size DB2: 9.7.0.5 WAS: 8.5.5.1 | |

| Build Forge Agent | Operating System and version: z/OS 01.12.00 System Resource: 6 GB storage, 4 CPs (20000 mips, CPU type: 2097.710, CPU model: E12) Build System Toolkit: 5.0 Sprint4 (RTC-I20131211-0354) |

Methodology

Monitor tools - NMON is used for the Jazz Team Server and RMF on z/OS was used for Rational Build Agent. The sample project used in this test was Mortgage*100, which is 100 duplicates of the Mortgage sample application .| Test Data | |

|---|---|

| Sample Project | Mortgage*100 |

| Assets | 600 COBOL programs 400 Copybooks 200 BMS 3 others |

| Total Assets | 1203 |

Test Scenarios

| Test Scenario | Description |

|---|---|

| Full Dependency Build and Promotion | 1) Request full dependency build; 2) Select 'publish build map links'; 3) Request promotion build to compare 'finalize build maps' activity; |

Results

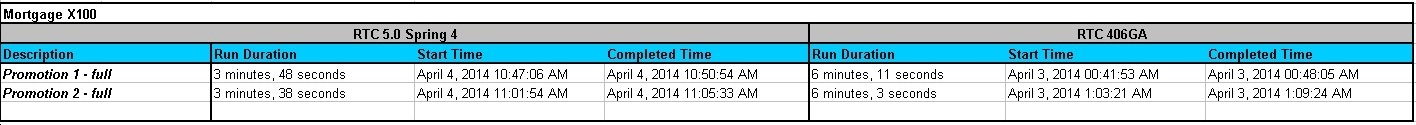

Run duration

These data tables show the run duration comparing 'finalize build maps' activity during promotion between Rational Team Concert Enterprise Extensions version 5.0 sprint 4 and 4.0.6. From the test results of build time, we find that 'finalize build maps' activity in promotion is improved by approximately 70 percent, as 'Finalize Build Maps' activity is one step that takes about 60% of the whole promotion time, we find that promotion is improved by approximately 40 percent.

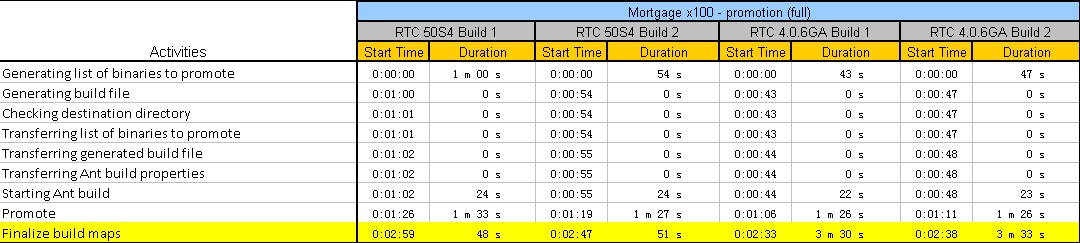

Build Activities

These data tables display the detailed build activities run time. In version 5.0 Sprint4, 'Finalize build maps' activity has significantly improved. The activity charts show that 'Finalize build maps' process improved about 70 percent.

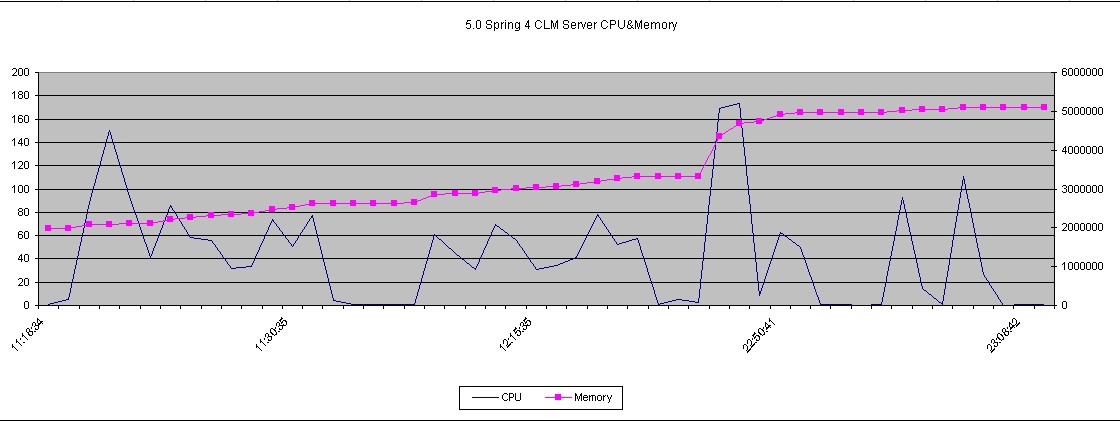

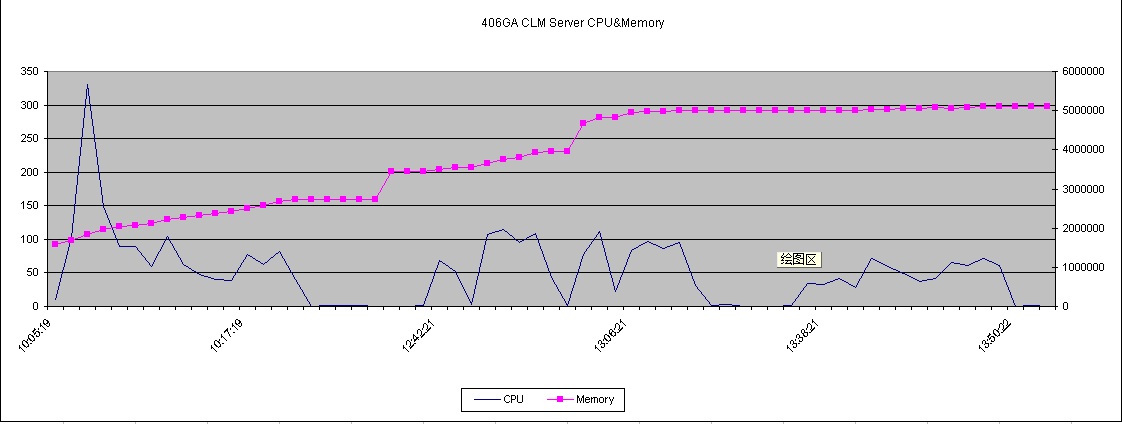

CPU and Memory for Jazz Team Server

| This graph shows CPU and memory utilization for server, data is collected by NMON tool. |  |

|

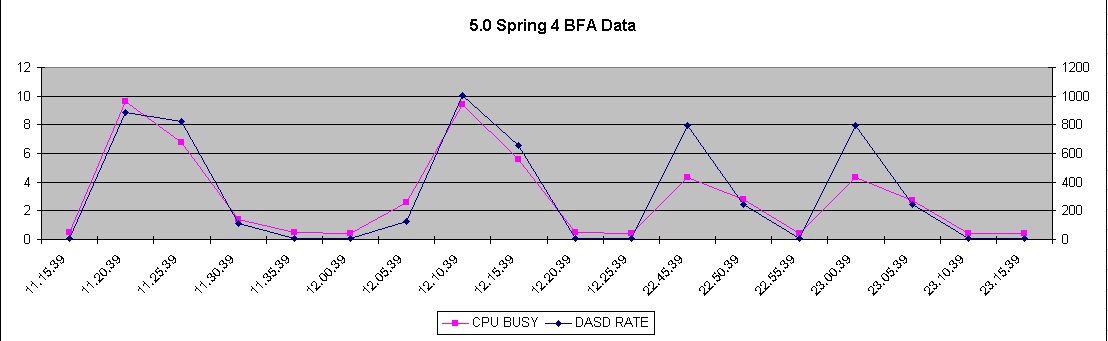

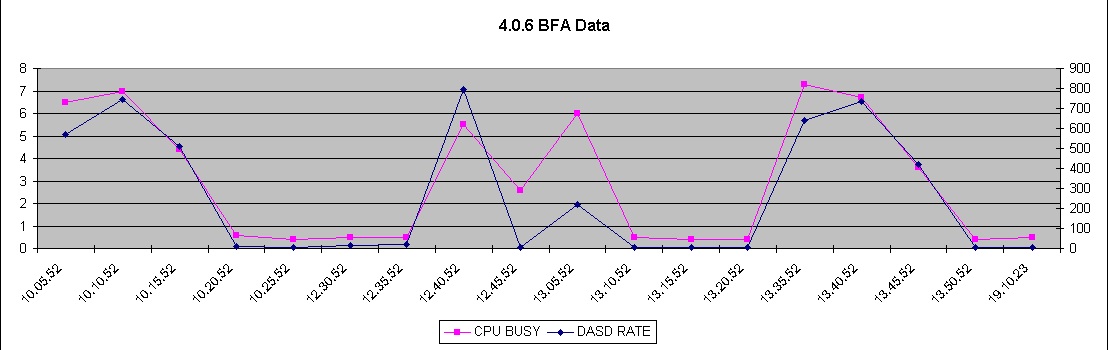

CPU and Memory for Build Agent

| This graph shows CPU utilization and DASD RATE data for Build Forge Agent on z/OS system, data is collected by RMF tool. |  |

|

Appendix A - Key Tuning Parameters

| Product |

Version | Highlights for configurations under test |

|---|---|---|

| IBM WebSphere Application Server | 8.5.5.1 | JVM settings:

* GC policy and arguments, max and init heap sizes:

-Xmn512m -Xgcpolicy:gencon -Xcompressedrefs -Xgc:preferredHeapBase=0x100000000 -Xmx4g -Xms4gOS configuration: * hard nofile 120000 * soft nofile 120000Refer to http://pic.dhe.ibm.com/infocenter/clmhelp/v4r0m4/topic/com.ibm.jazz.install.doc/topics/c_special_considerations_linux.html for details |

| DB2 | DB2 Enterprise Server 9.7.0.5 | Tablespace is stored on the same machine as IBM WebSphere Application Server |

| License Server | Same as CLM version | Hosted locally by JTS server |

| Network | Shared subnet within test lab |

About the authors

LuLuQuestions and comments:

- What other performance information would you like to see here?

- Do you have performance scenarios to share?

- Do you have scenarios that are not addressed in documentation?

- Where are you having problems in performance?

Contributions are governed by our Terms of Use. Please read the following disclaimer.

Dashboards and work items are no longer publicly available, so some links may be invalid. We now provide similar information through other means. Learn more here.