Collaborative Lifecycle Management performance report: RRC 4.0.6 release

Collaborative Lifecycle Management performance report: RRC 4.0.6 release

Authors: GustafSvensson

Last updated: January 15, 2014

Build basis: Rational Requirements Composer 4.0.6

Page contents

- Introduction

- Findings

- Topology

- Methodology

- Results

-

- Create a collection

- Filter a query

- Open nested folders

- Manage folders

- Query by ID

- View collections

- Check suspect links

- Add comments to an artifact

- Open the project dashboard

- Create a multi-value artifact

- Create an artifact

- Show artifacts in a Tree view

- Open graphical artifacts

- Create and edit a storyboard

- Display the hover information for a collection

- Query by string

- Create a PDF report

- Create a Microsoft Word report

-

- Appendix A

Introduction

This report compares the performance of an unclustered Rational Requirements Composer version 4.0.6 deployment to the previous 4.0.5 release. The test objective is achieved in three steps:- Run version 4.0.5 with standard 1-hour test using 400 concurrent users.

- Run version 4.0.6 with standard 1-hour test using 400 concurrent users.

- The test is run three times for each version and the resulting six tests are compared with each other. Three tests per version is used to get a more accurate picture since there are variations expected between runs.

Disclaimer

The information in this document is distributed AS IS. The use of this information or the implementation of any of these techniques is a customer responsibility and depends on the customerís ability to evaluate and integrate them into the customerís operational environment. While each item may have been reviewed by IBM for accuracy in a specific situation, there is no guarantee that the same or similar results will be obtained elsewhere. Customers attempting to adapt these techniques to their own environments do so at their own risk. Any pointers in this publication to external Web sites are provided for convenience only and do not in any manner serve as an endorsement of these Web sites. Any performance data contained in this document was determined in a controlled environment, and therefore, the results that may be obtained in other operating environments may vary significantly. Users of this document should verify the applicable data for their specific environment. Performance is based on measurements and projections using standard IBM benchmarks in a controlled environment. The actual throughput or performance that any user will experience will vary depending upon many factors, including considerations such as the amount of multi-programming in the userís job stream, the I/O configuration, the storage configuration, and the workload processed. Therefore, no assurance can be given that an individual user will achieve results similar to those stated here. This testing was done as a way to compare and characterize the differences in performance between different versions of the product. The results shown here should thus be looked at as a comparison of the contrasting performance between different versions, and not as an absolute benchmark of performance.What our tests measure

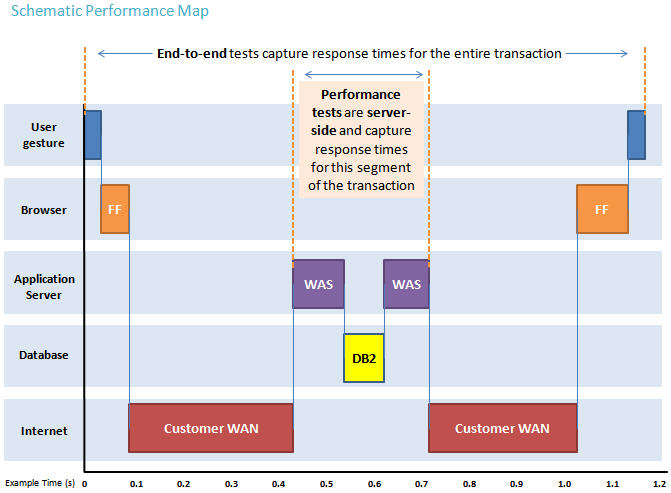

We use predominantly automated tooling such as Rational Performance Tester (RPT) to simulate a workload normally generated by client software such as the Eclipse client or web browsers. All response times listed are those measured by our automated tooling and not a client. The diagram below describes at a very high level which aspects of the entire end-to-end experience (human end-user to server and back again) that our performance tests simulate. The tests described in this article simulate a segment of the end-to-end transaction as indicated in the middle of the diagram. Performance tests are server-side and capture response times for this segment of the transaction.

Findings

Performance goals

- Verify that there are no performance regressions between current release and prior release with 400 concurrent users using the workload described below.

- The challenge we experience is that there is a high variance of execution time within a single run. Depending on the load that the server is under in any given moment the execution time will vary considerably. This could be avoided by testing the performance in a single user environment but the purpose of this test is to ensure performance is acceptable also when the server is under heavy load. Using the median rather than the average when calculating the execution times within each test run will lessen the impact of a few slow running performance numbers.

- When comparing the three old runs with the three new runs we use the average of the three runs. Because of the difference in execution times of pages between runs, as can be observed in the charts below, a margin of error of 10% is generally tolerated although faster running pages typically have a higher margin of errors (larger variation between runs).

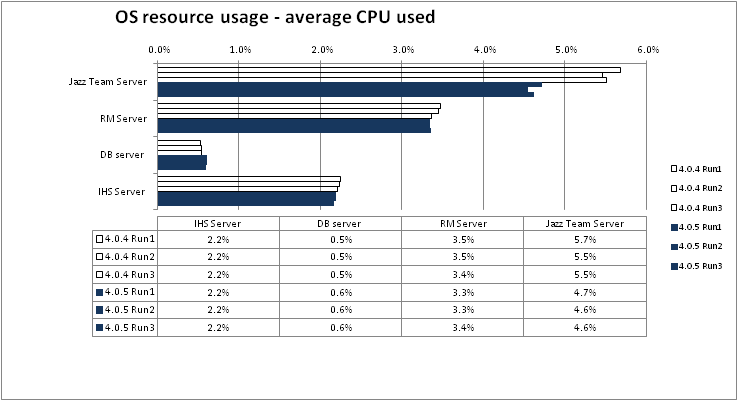

Findings

- RPT report shows similar response times for 4.0.6 and 4.0.5.

- Comparing nmon data for both 4.0.6 and 4.0.5 show similar CPU, memory and disk utilization on application servers and database server.

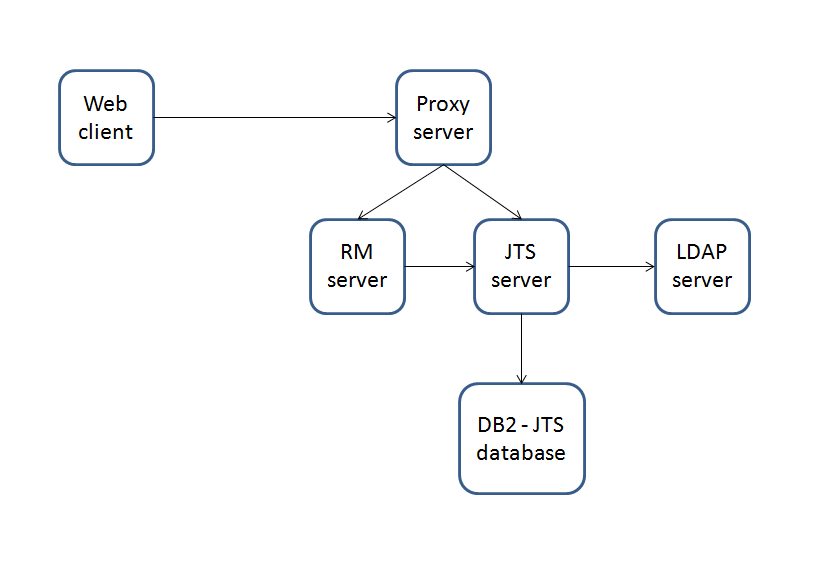

Topology

The topology under test is based on Standard Topology (E1) Enterprise - Distributed / Linux / DB2. The specifications of machines under test are listed in the table below. Server tuning details listed in Appendix A

The specifications of machines under test are listed in the table below. Server tuning details listed in Appendix A

| Function | Number of Machines | Machine Type | CPU / Machine | Total # of CPU Cores/Machine | Memory/Machine | Disk | Disk capacity | Network interface | OS and Version |

|---|---|---|---|---|---|---|---|---|---|

| IBM HTTP Server and WebSphere Plugin | 1 | IBM System x3250 M4 | 1 x Intel Xeon E3-1240 3.4GHz (quad-core) | 8 | 16GB | RAID 1 -- SAS Disk x 2 | 279GB | Gigabit Ethernet | Red Hat Enterprise Linux Server release 6.3 (Santiago) |

| JTS Server | 1 | IBM System x3550 M4 | 2 x Intel Xeon E5-2640 2.5GHz (six-core) | 24 | 32GB | RAID 5 -- SAS Disk x 4 | 279GB | Gigabit Ethernet | Red Hat Enterprise Linux Server release 6.3 (Santiago) |

| RRC Server | 1 | IBM System x3550 M4 | 2 x Intel Xeon E5-2640 2.5GHz (six-core) | 24 | 32GB | RAID 5 -- SAS Disk x 4 | 279GB | Gigabit Ethernet | Red Hat Enterprise Linux Server release 6.3 (Santiago) |

| Database Server | 1 | IBM System x3650 M4 | 2 x Intel Xeon E5-2640 2.5GHz (six-core) | 24 | 64GB | RAID 10 -- SAS Disk x 16 | 279GB | Gigabit Ethernet | Red Hat Enterprise Linux Server release 6.3 (Santiago) |

| RPT Workbench | 1 | VM image | 2 x Intel Xeon X7550 CPU (1-Core 2.0GHz 64-bit) | 2 | 6GB | SCSI | 80GB | Gigabit Ethernet | Microsoft Windows Server 2003 R2 Standard Edition SP2 |

| RPT Agent | 1 | xSeries 345 | 4 x Intel Xeon X3480 CPU (1-Core 3.20GHz 32-bit) | 4 | 3GB | SCSI | 70GB | Gigabit Ethernet | Microsoft Windows Server 2003 Enterprise Edition SP2 |

| RPT Agent | 1 | xSeries 345 | 4 x Intel Xeon X3480 CPU (1-Core 3.20GHz 32-bit) | 4 | 3GB | RAID 1 - SCSI Disk x 2 | 70GB | Gigabit Ethernet | Microsoft Windows Server 2003 Enterprise Edition SP2 |

| RPT Agent | 1 | Lenovo 9196A49 | 1 x Intel Xeon E6750 CPU (2-Core 2.66GHz 32-bit) | 2 | 2GB | SATA | 230GB | Gigabit Ethernet | Microsoft Windows Server 2003 Enterprise Edition SP2 |

| Network switches | N/A | Cisco 2960G-24TC-L | N/A | N/A | N/A | N/A | N/A | Gigabit Ethernet | 24 Ethernet 10/100/1000 ports |

Network connectivity

All server machines and test clients are located on the same subnet. The LAN has 1000 Mbps of maximum bandwidth and less than 0.3ms latency in ping.Data volume and shape

The artifacts were distributed between 40 small projects, 10 medium projects and one large project for a total of 200,589 artifacts. The repository contained the following data:- 141 modules

- 14,500 module artifacts

- 3,203 folders

- 434 collections

- 274 reviews

- 40,798 comments

- 520 public tags

- 110 private tags

- 15,350 terms

- 22,778 links

- 360 views

- Database size = 20 GB

- JTS index size = 9 GB

- 11 modules

- 1,160 folders

- 78,842 requirement artifacts

- 106 collections

- 64 reviews

- 16,897 comments

- 300 public tags

- 50 private tags

- 9,006 terms

- 15,594 links

- 200 views

Methodology

Rational Performance Tester was used to simulate the workload created using the web client. Each user completed a random use case from a set of available use cases. A Rational Performance Tester script is created for each use case. The scripts are organized by pages and each page represents a user action. Based on real customer use, the test scenario provides a ratio of 70% reads and 30% writes. The users completed use cases at a rate of 30 pages per hour per user. Each performance test runs for 60 minutes after all of the users are activated in the system.Test cases and workload characterization

| Use case | Description | % of Total Workload |

|---|---|---|

| Login | Connect to the server using server credentials. | None |

| Create a collection | Create collections with 10 artifacts. | 6 |

| Filter a query | Run a query that has100 results and open 3 levels of nested folders. | 8 |

| Open nested folders | Create review and complete review process. | 4 |

| Manage folders | Create a folder, move it to a new location, and then delete the folder. | 1 |

| Query by ID | Search for a specific ID in the repository. | 8 |

| View collections | View collections that contain 100 artifacts from the collections folders. | 13 |

| Check suspect links | Open an artifact that has suspect links. | 6 |

| Add comments to an artifact | Open a requirement that has 100 comments and creates a comment addressed to 8 people on the team. | 8 |

| Open the project dashboard | Open the project and dashboard for the first time. | 7 |

| Create a multi-value artifact | Create a multi-value artifact and then add a multi-value attribute. | 2 |

| Create an artifact | Create a requirement that contains a table, an image and rich text. Edit an artifact that has 100 enumerated attributes and modify an attribute. | 2 |

| Show artifacts in a Tree view | Open a folder that contains artifacts with links and show the data in a tree view. | 8 |

| Open graphical artifacts | Open business process diagrams, use cases, parts, images, sketches and story boards. | 5 |

| Create and edit a storyboard | Create and edit a storyboard. | 4 |

| Display the hover information for a collection | Open a collection that contains 100 artifacts and hover over the Artifacts page. | 4 |

| Query by String | Search for a string that returns 30 matched items. | 10 |

| Create a PDF report | Generate a 50-artifact PDF report. | 2 |

| Create a Microsoft Word report | Generate a 100-artifact Microsoft Word report. | 2 |

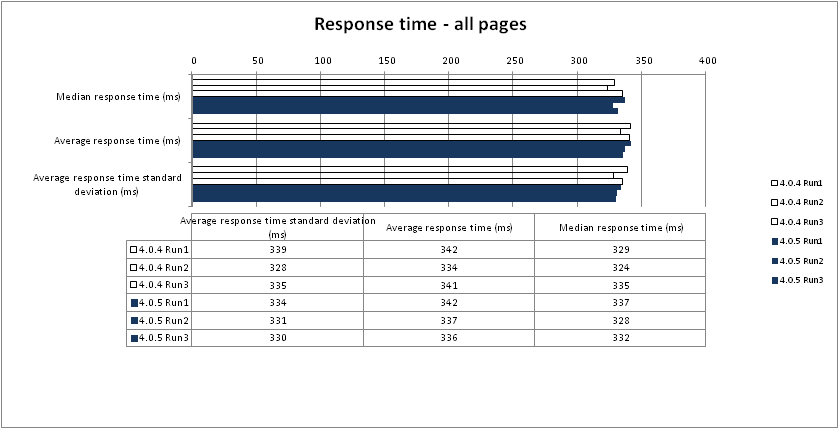

Response time comparison

The median response time provided more even results than the average response time. The nature of the high variance between tests where some tasks at time takes a longer time to run, such as when the server is under heavy load, makes the average response time less predictive. Both the median and average values are included in the following tables and charts for comparison. In the repository that contained 200,000 artifacts with 400 concurrent users, no obvious regression was shown when comparing response times between runs. The numbers in the following charts include all of the pages for all of the scripts that ran.Results

Garbage collection

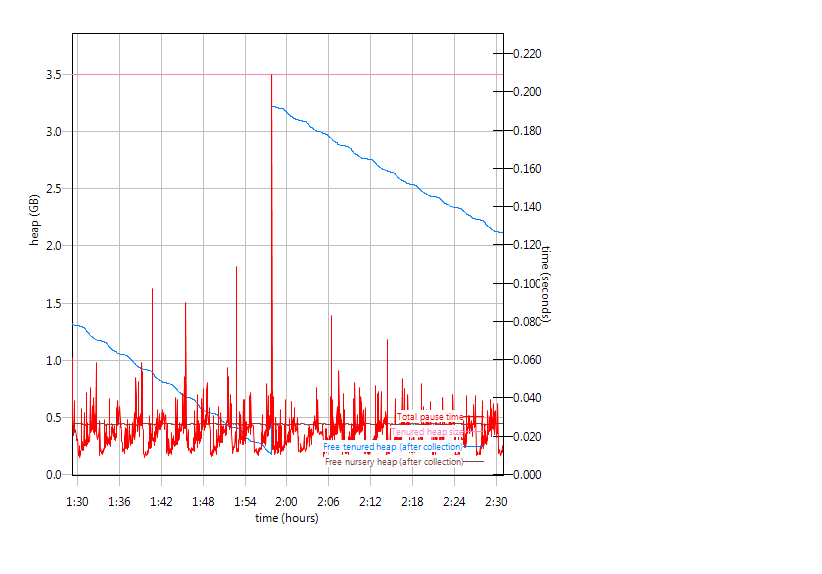

Verbose garbage collection is enable to create the GC logs. The GC logs shows very little variation between runs. There is also no discernible difference between versions . Below is an example of what the graph from the GC log looks like from the first test run for each application.

RM

Garbage collection

Verbose garbage collection is enable to create the GC logs. The GC logs shows very little variation between runs. There is also no discernible difference between versions . Below is an example of what the graph from the GC log looks like from the first test run for each application.

RM - Observation: The graph looks the same as in 4.0.4 and 4.0.5.

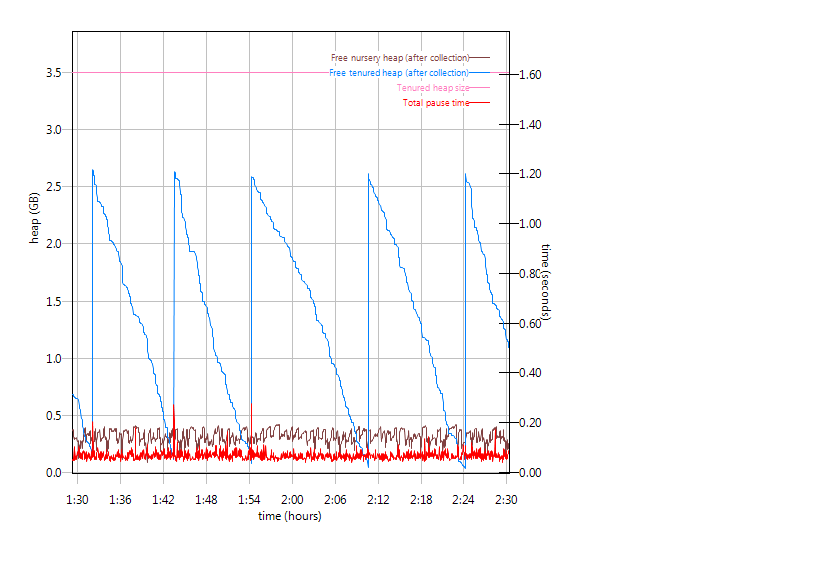

JTS

JTS - Observation: The graph looks the same as in 4.0.4 and 4.0.5. The high frequency of collections is normal behavior for JTS.

Create a collection

- Observation: Two of the requests are slower, "Save the collection" (in average 29ms slower) and "Click Done and close the collection" (in average 16ms slower). This is offset by four other requests being faster.

Back to Test Cases & workload characterization

Back to Test Cases & workload characterization

Filter a query

- Observation: None

Back to Test Cases & workload characterization

Back to Test Cases & workload characterization

Open nested folders

- Observation: Open project request is marginally slower but is offset by other requests being faster.

Back to Test Cases & workload characterization

Back to Test Cases & workload characterization

Manage folders

- Observation: Here open the project is an example of the high variation we can see at times between runs. Note that open projects are not always slower.

Back to Test Cases & workload characterization

Back to Test Cases & workload characterization

Query by ID

- Observation: "Open the project" has an average slower response of 8% (40ms). This particular scenario is where opening the project has the slowest performance.

Back to Test Cases & workload characterization

Back to Test Cases & workload characterization

View collections

- Observation: "Open the project" has an average slower response of 6% (30ms) with small improvements in opening folder and viewing artifacts.

Back to Test Cases & workload characterization

Back to Test Cases & workload characterization

Check suspect links

- Observation: Similar results between runs with small variations

Back to Test Cases & workload characterization

Back to Test Cases & workload characterization

Add comments to an artifact

- Observation: Ideal comparison with small variance

Back to Test Cases & workload characterization

Back to Test Cases & workload characterization

Open the project dashboard

- Observation: None

Back to Test Cases & workload characterization

Back to Test Cases & workload characterization

Create a multi-value artifact

- Observation: None

Back to Test Cases & workload characterization

Back to Test Cases & workload characterization

Create an artifact

- Observation: Edit the artifact is 16ms slower which is offset by other gestures being faster

Back to Test Cases & workload characterization

Back to Test Cases & workload characterization

Show artifacts in a Tree view

- Observation: Displaying the artifacts in a tree view is in average 6% faster (92ms difference)

Back to Test Cases & workload characterization

Back to Test Cases & workload characterization

Open graphical artifacts

- Observation: Our "best" graph. Almost everything is a little bit faster

Back to Test Cases & workload characterization

Back to Test Cases & workload characterization

Create and edit a storyboard

- Observation: None

Back to Test Cases & workload characterization

Back to Test Cases & workload characterization

Display the hover information for a collection

- Observation: Although none of the open project requests for the three runs in 4.0.5 are slower than in 4.0.4 the average is 5% (29ms) slower.

Back to Test Cases & workload characterization

Back to Test Cases & workload characterization

Query by string

- Observation: None

Back to Test Cases & workload characterization

Back to Test Cases & workload characterization

Create a PDF report

- Observation: None

Back to Test Cases & workload characterization

Back to Test Cases & workload characterization

Create a Microsoft Word report

- Observation: None

Back to Test Cases & workload characterization

Back to Test Cases & workload characterization

Appendix A

| Product |

Version | Highlights for configurations under test |

|---|---|---|

| IBM HTTP Server for WebSphere Application Server | 8.5.0.1 | IBM HTTP Server functions as a reverse proxy server implemented

via Web server plug-in for WebSphere Application Server. Configuration details can be found from the CLM infocenter.

HTTP server (httpd.conf):

|

| IBM WebSphere Application Server Network Deployment | 8.5.0.1 | JVM settings:

-XX:MaxDirectMemorySize=1g -Xgcpolicy:gencon -Xmx4g -Xms4g -Xmn512m -Xcompressedrefs -Xgc:preferredHeapBase=0x100000000Thread pools:

|

| DB2 | DB2 10.1 | |

| LDAP server | ||

| License server | Hosted locally by JTS server | |

| RPT workbench | 8.2.1.5 | Defaults |

| RPT agents | 8.2.1.5 | Defaults |

| Network | Shared subnet within test lab |

For more information

About the authors

GustafSvenssonQuestions and comments:

- What other performance information would you like to see here?

- Do you have performance scenarios to share?

- Do you have scenarios that are not addressed in documentation?

- Where are you having problems in performance?

Contributions are governed by our Terms of Use. Please read the following disclaimer.

Dashboards and work items are no longer publicly available, so some links may be invalid. We now provide similar information through other means. Learn more here.