Introduction

In the IBM Engineering Lifecycle Management (ELM) 7.0 release, an IBM Engineering Systems Design Rhapsody Model Manager (RMM) server is deployed as an extension to IBM Engineering Workflow Management (EWM). The purpose of this document is to show the performance impact of combining the two servers.

Summary of results

- Given what we tested, the added RMM load cost an additional ~5% CPU on the combined server with very little impact to EWM service times. Database disk utilization increased by ~2%.

Disclaimer

The information in this document is distributed AS IS. The use of this information or the implementation of any of these techniques is a customer responsibility and depends on the customerís ability to evaluate and integrate them into the customerís operational environment. While each item may have been reviewed by IBM for accuracy in a specific situation, there is no guarantee that the same or similar results will be obtained elsewhere. Customers attempting to adapt these techniques to their own environments do so at their own risk. Any pointers in this publication to external Web sites are provided for convenience only and do not in any manner serve as an endorsement of these Web sites. Any performance data contained in this document was determined in a controlled environment, and therefore, the results that may be obtained in other operating environments may vary significantly. Users of this document should verify the applicable data for their specific environment.

Performance is based on measurements and projections using standard IBM benchmarks in a controlled environment. The actual throughput or performance that any user will experience will vary depending upon many factors, including considerations such as the amount of multi-programming in the userís job stream, the I/O configuration, the storage configuration, and the workload processed. Therefore, no assurance can be given that an individual user will achieve results similar to those stated here.

This testing was done as a way to compare and characterize the differences in performance between different versions of the product. The results shown here should thus be looked at as a comparison of the contrasting performance between different versions, and not as an absolute benchmark of performance.

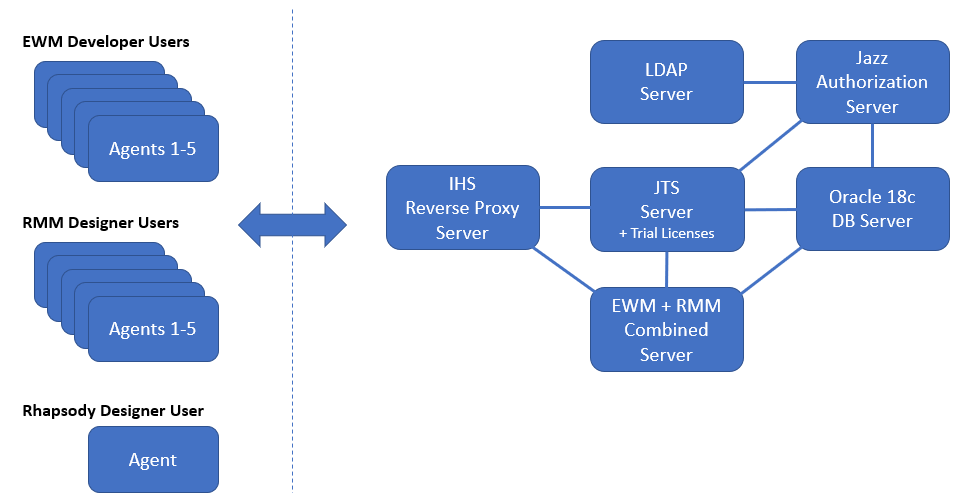

Topology

The topology we used in our performance testing is shown below.

| Function |

Number of Machines |

Machine Type |

CPU / Machine |

Total # of CPU vCores/Machine |

Memory/Machine |

Disk |

Disk capacity |

Network interface |

OS and Version |

| Oracle DB Server |

1 |

Physical |

|

40 |

64G RAM |

|

|

Gigabit Ethernet |

Red Hat Enterprise Linux Server 7.6 |

| EWM + RMM Combined Server |

1 |

Physical |

|

24 |

32G RAM

8G Heap |

|

|

Gigabit Ethernet |

Red Hat Enterprise Linux Server 6.10 |

| JTS Server |

1 |

VMware VM |

2 x Intel Xeon E5-2665 2.4GHz (dual-core) |

4 |

16G RAM

8G Heap |

N/A |

|

Gigabit Ethernet |

Red Hat Enterprise Linux Server 7.1 |

| Jazz Authoriztion Server |

1 |

VMware VM |

2 x Intel Xeon E5-2665 2.4GHz (dual-core) |

4 |

16G |

|

|

Gigabit Ethernet |

Red Hat Enterprise Linux Server 7.1 |

| LDAP Server |

1 |

VMware VM |

N/A |

N/A |

N/A |

N/A |

N/A |

Gigabit Ethernet |

N/A |

| IHS Server |

1 |

VMware VM |

2 x Intel Xeon E5-2698 2.3GHz |

4 |

16G |

N/A |

|

Gigabit Ethernet |

Red Hat Enterprise Linux Server 7.6 |

| RTC Developer Agents |

5 |

VMware VM |

2 x Intel Xeon E5-2698 2.3GHz (dual-core) |

4 |

16G |

N/A |

|

Gigabit Ethernet |

Red Hat Enterprise Linux Server 7.1 |

| RMM Designer Agents |

5 |

VMware VM |

2 x Intel Xeon E5-2698 2.3GHz (dual-core) |

4 |

16G |

N/A |

|

Gigabit Ethernet |

Windows Server Enterprise 2016 |

| Rhapsody Designer Agents |

1 |

VMware VM |

2 x Intel Xeon E5-2698 2.3GHz (dual-core) |

4 |

16G |

N/A |

|

Gigabit Ethernet |

Windows Server Enterprise 2016 |

N/A: Not applicable.

vCores = Cores with hyperthreading

Network connectivity

All server machines and test clients are located on the same subnet. The LAN has 1000 Mbps of maximum bandwidth and less than 0.3 ms latency in ping.

Test methodology

The test methodology involved resetting environment prior to each test, running tests for a duration that allotted time for 500 users to ramp up, settle in, work at a steady pace, and ramp down. Performance data was collected during a steady state period of one hour. The test is repeated and results are compared. Note: there is an acceptable degree of variation in the EWM web service times. So to avoid false alarms when comparing results, response times for each service were rounded up by 50ms.

Test automation and workload characterization

Rational Performance Tester (RPT) was used to simulate the workload created using the web client. Each user completed a random use case from a set of

available use cases. A Rational Performance Tester script is created for each use case. The scripts are organized by pages and each page represents a user action.

The work load is role based as each of the areas defined under sequence of actions are separated into individual user groups within an RPT schedule.

The

User roles, test cases and workload characterization section describes the configuration of the RPT schedule.

User Roles

| Use role |

% of Total |

Related Actions |

| QE Manager |

8 |

Create test plan, Browse test plans and test cases, Browse test script, Simple test plan copy, Search defect, View dashboard |

| Test Lead |

19 |

Edit test environment, Edit test plan, Create test case, Bulk edit of test cases, Browse test script, Test Execution, Search defect, Edit test suite |

| Tester |

68 |

Create defect, Modify defect, Search defect, Edit test case, Create test script, Edit test script, Test Execution, Browse test execution record, Browse test case result, Browse test suite result |

| Dashboard Viewer |

5 |

View dashboard(with login and logout) |

Data volume and shape

Performance results

Response times

In the response time charts that follow, the unit is in milliseconds. When interpreting the data, smaller is better.

Browse Test Case Execution Records

Back to Test Cases & workload characterization

Back to Test Cases & workload characterization

Resource utilization

OS Resource Utilization - Overview

| CPU |

|

| Disk |

|

| Memory |

|

| Network |

|

Garbage collection - JVM GC Charts

For JVM parameters, please refer to

Appendix D: Key configuration parameters.

Verbose garbage collection (GC) is enabled by default to log the GC activities. Below is one example of the output from the GC log for each application.

WAS Liberty JVM Garbage Collection Charts for RQM and JTS Servers

| QM |

|

| JTS |

|

Appendix D: Key configuration parameters

Product

|

Version |

Highlights for configurations under test |

| HAProxy |

1.5.18 |

HAProxy functions as a reverse proxy server.

Configuration details can be found from the HAProxy Configuration Manual.

haproxy.cfg:

- timeout http-request 20s

- timeout connect 20s

- timeout client 5m

- timeout server 5m

OS Configuration:

- max user processes = 65536

|

| IBM WebSphere Application Server Liberty | 18.0.0.3 | JVM settings:

- Memory settings specified in server.startup before line export JAVA_OPTS:

JAVA_OPTS="$JAVA_OPTS -Xmx8g"

JAVA_OPTS="$JAVA_OPTS -Xms8g"

JAVA_OPTS="$JAVA_OPTS -Xmn2g"

JAVA_OPTS="$JAVA_OPTS -XX:MaxDirectMemorySize=2G"

SDK version:

- IBM WebSphere SDK for Java Technology Edition Version 8.0.5.25

OS Configuration:

System wide resources for the app server process owner:

- max user processes = 65536

- open files = 65536

|

| DB2 |

ESE 10.5.0.9 |

See Appendix E: Database Server Tuning |

| LDAP server |

N/A |

N/A |

| License server |

N/A |

N/A |

| RPT workbench |

9.0.0.2 |

Defaults |

| RPT agents |

9.0.0.2 |

Defaults |

| Network |

|

Shared subnet within test lab |

About the author(s):

SentellaCystrunk is a performance engineer focusing on the performance and scalability of products in the Enterprise Lifecycle Management family.

Questions and comments:

- What other performance information would you like to see here?

- Do you have performance scenarios to share?

- Do you have scenarios that are not addressed in documentation?

- Where are you having problems in performance?

Warning: Can't find topic Deployment.PerformanceDatasheetReaderComments

Performance impact of combining Rhapsody Model Manager and Engineering Workflow Management servers

Performance impact of combining Rhapsody Model Manager and Engineering Workflow Management servers  Back to Test Cases & workload characterization

Back to Test Cases & workload characterization