Introduction

The Data Manager ETL is a powerful tool that extracts, transforms, and loads CLM operational data into the data warehouse, which the CLM Reporting features can output as complex statistics and trend charts. You can use the Data Manager ETL to load historical operational data from one or many CLM servers. The Data Manager ETL has an initial load and delta loads. The initial load is the first time that all CLM data is loaded. The duration of the initial load depends on the volume of data: if the volume is large, the inital load can take up to a day or more to complete. The delta loads load incremental changes to the data. The duration of a delta load depends on the time interval between loads and the amount of change to the data during that interval. In general, the time interval between delta loads is daily or weekly. If no big changes occur during an interval, the delta load can be completed in hours. However, if there are many incremental changes or there are many CLM servers, a delta load can take much longer. In the CLM 4.0.4 release, the development team made significant improvements to the Data Manager ETL for the Change and Configuration Management (CCM) and the Requirements Management (RM) applications.

This case study compares the performance between CLM 4.0.3 and CLM 4.0.4 for the CCM and RM Data Manager ETL, with identical data in the same test environment. Based on the test data, the Data Manager ETL performance improved significantly between releases.

Disclaimer

The information in this document is distributed AS IS. The use of this information or the implementation of any of these techniques is a customer responsibility and depends on the customerís ability to evaluate and integrate them into the customerís operational environment. While each item may have been reviewed by IBM for accuracy in a specific situation, there is no guarantee that the same or similar results will be obtained elsewhere. Customers attempting to adapt these techniques to their own environments do so at their own risk. Any pointers in this publication to external Web sites are provided for convenience only and do not in any manner serve as an endorsement of these Web sites. Any performance data contained in this document was determined in a controlled environment, and therefore, the results that may be obtained in other operating environments may vary significantly. Users of this document should verify the applicable data for their specific environment.

Performance is based on measurements and projections using standard IBM benchmarks in a controlled environment. The actual throughput or performance that any user will experience will vary depending upon many factors, including considerations such as the amount of multi-programming in the userís job stream, the I/O configuration, the storage configuration, and the workload processed. Therefore, no assurance can be given that an individual user will achieve results similar to those stated here.

This testing was done as a way to compare and characterize the differences in performance between different versions of the product. The results shown here should thus be looked at as a comparison of the contrasting performance between different versions, and not as an absolute benchmark of performance.

What our tests measure

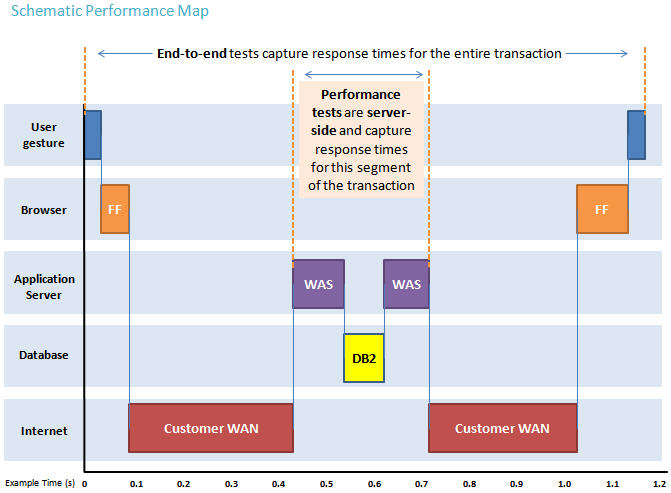

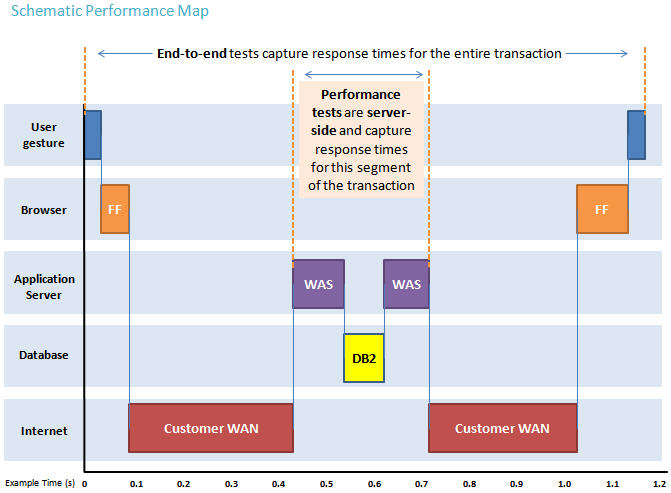

We use predominantly automated tooling such as Rational Performance Tester (RPT) to simulate a workload normally generated by client software such as the Eclipse client or web browsers. All response times listed are those measured by our automated tooling and not a client.

The diagram below describes at a very high level which aspects of the entire end-to-end experience (human end-user to server and back again) that our performance tests simulate. The tests described in this article simulate a segment of the end-to-end transaction as indicated in the middle of the diagram. Performance tests are server-side and capture response times for this segment of the transaction.

Findings

The performance of the Data Manager ETL improved significantly from CLM 4.0.3 to CLM 4.0.4 based on the test data. The initial ETL load for CCM improved about 30% (100,000 work items with 2 history entries per work item). The delta load for CCM improved more than 10% (10% increment of work items and history). The initial ETL load for RM improved about 40% (400,000 requirements). The delta ETL load for RM also improved about 40% (10% increment of requirements).

Topology

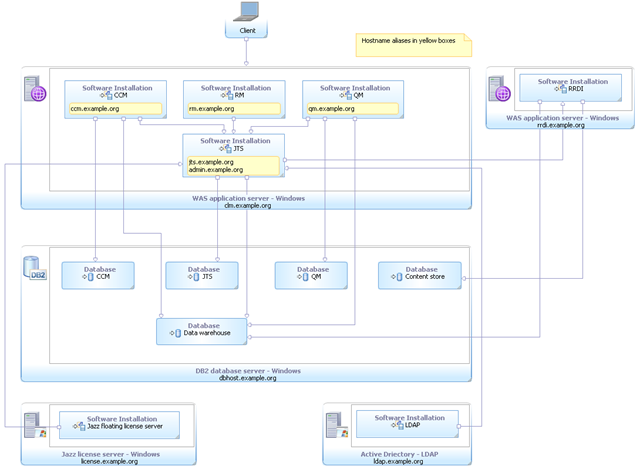

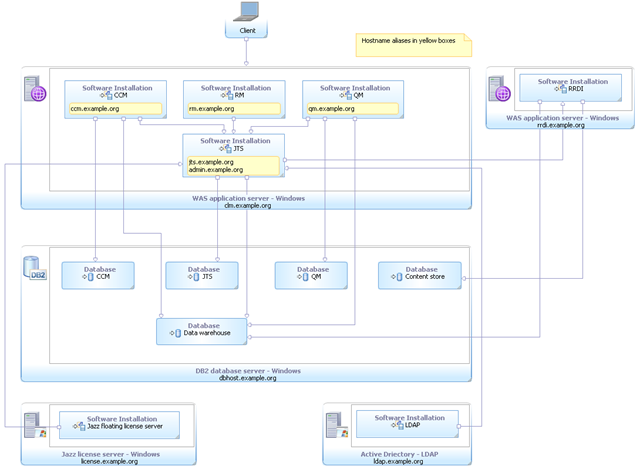

The tests focused on a distributed server setup that is aligned with CLM

Departmental topology D1 (see Figure 1). Unlike the D1 topology, the IBM Tivoli Directory Server was used for user authentication in these tests.

Figure 1: Departmental - Single application server, Windows/DB2

This case study used the same test environment and same test data to test the ETL performance for CLM 4.0.3 and CLM 4.0.4. Test data was generated using automation. The test environment for the latest release was upgraded from the earlier one by using the CLM upgrade process. To create four VMs, one X3550 M3 7944J2A (at 2.67 GHz, 48 GB RAM, and 12 physical cores) was used. In the topology, four CLM applications (JTS, CCM, QM, and RM) were installed on VM1; the CLM repository was installed on VM2; the Data Manager ETL tool was installed on VM3; and the data warehouse was installed on VM4.

The same software configuration was used for both CLM 4.0.3 and CLM 4.0.4. The WebSphere Application Server was version 8.5.1, 64-bit. The database server was IBM DB2 9.7.5, 64-bit. The Rational Reporting for Development Intelligence tool was version 2.0.4. The Jazz Team Sever, CCM, QM, and RM applications co-existed in the same WebSphere Application Server profile. The JVM setting was as follows:

-verbose:gc -XX:+PrintGCDetails -Xverbosegclog:gc.log -Xgcpolicy:gencon

-Xmx8g -Xms8g -Xmn1g -Xcompressedrefs -Xgc:preferredHeapBase=0x100000000

-XX:MaxDirectMemorySize=1g

IBM Tivoli Directory Server was used for managing user authentication.

| Topology 1 (DB2) |

|

ESX Server1 (36 GB memory) |

|

| Server |

CLM Server |

DB Server |

RRDI Data Manager |

| Host name |

CLMsvr1 |

DBsvr1 |

DMsvr1 |

| CPU |

4 vCPU |

4 vCPU |

2 vCPU |

| Memory |

16 GB |

12 GB |

4 GB |

| Hard disk |

120 GB |

100 GB |

80 GB |

| OS |

Win2008 R2 64-bit |

Win2008 R2 64-bit |

Win2008 R2 64-bit |

| Configuration 1 |

CLM 4.0.3, WAS 8.5.1 |

DB2 v9.7 fp5 |

RRDI Dev Tools 2.0.3 |

| Configuration 2 |

CLM 4.0.4, WAS 8.5.1 |

DB2 v9.7 fp5 |

RRDI Dev Tools 2.0.4 |

Data shape

|

Record type |

Initial load |

Delta load |

| CCM |

APT_ProjectCapacity |

1 |

1 |

| |

APT_TeamCapacity |

0 |

0 |

| |

Build |

0 |

0 |

| |

Build Result |

0 |

0 |

| |

Build Unit Test Result |

0 |

0 |

| |

Build Unit Test Events |

0 |

0 |

| |

Complex CustomAttribute |

0 |

0 |

| |

Custom Attribute |

0 |

0 |

| |

File Classification |

3 |

3 |

| |

First Stream Classification |

3 |

3 |

| |

History Custom Attribute |

0 |

0 |

| |

SCM Component |

2 |

0 |

| |

SCM WorkSpace |

2 |

1 |

| |

WorkItem |

100026 |

10000 |

| |

WorkItem Approval |

100000 |

10000 |

| |

WorkItem Dimension Approval Description |

100000 |

10000 |

| |

WorkItem Dimension |

3 |

0 |

| |

WorkItem Dimension Approval Type |

3 |

0 |

| |

WorkItem Dimension Category |

2 |

0 |

| |

WorkItem Dimension Deliverable |

0 |

0 |

| |

WorkItem Dimension Enumeration |

34 |

0 |

| |

WorkItem Dimension Resolution |

18 |

0 |

| |

Dimension |

68 |

0 |

| |

WorkItem Dimension Type |

8 |

0 |

| |

WorkItem Hierarchy |

0 |

0 |

|

WorkItem History |

242926 |

20100 |

| |

WorkItem History Complex Custom Attribute |

0 |

0 |

| |

WorkItem Link |

112000 |

10000 |

| |

WorkItem Type Mapping |

4 |

0 |

| RM |

CrossAppLink |

0 |

0 |

| |

Custom Attribute |

422710 |

51010 |

| |

Requirement |

422960 |

51150 |

| |

Collection Requirement Lookup |

1110 |

21000 |

| |

Module Requirement Lookup |

22000 |

2000 |

| |

Implemented BY |

100 |

0 |

| |

Request Affected |

5988 |

0 |

| |

Request Tracking |

0 |

0 |

| |

REQUICOL_TESTPLAN_LOOKUP |

0 |

0 |

| |

REQUIREMENT_TESTCASE_LOOKUP |

0 |

0 |

| |

REQUIREMENT_HIERARCHY |

12626 |

0 |

| |

REQUIREMENT_EXTERNAL_LINK |

0 |

0 |

| |

RequirementsHierarchyParent |

6184 |

0 |

| |

Attribute Define |

10 |

10 |

| |

Requirement Link Type |

176 |

176 |

| |

Requirement Type |

203 |

203 |

Results

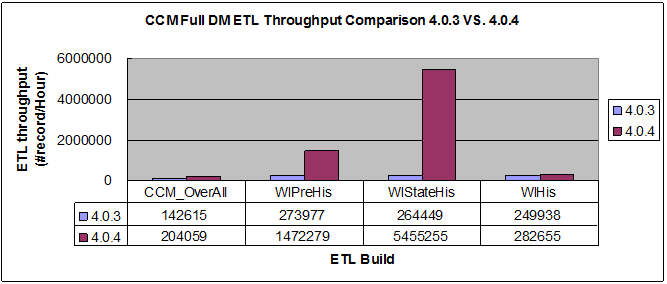

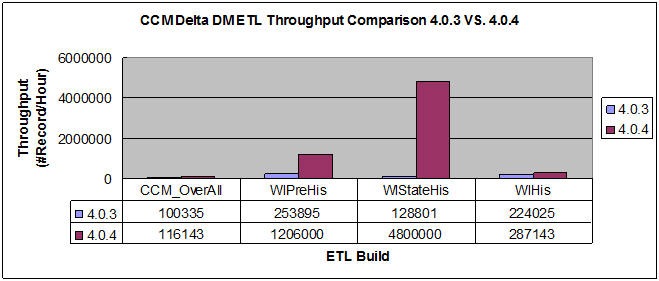

Based on the test data and test environment, the Data Manager ETL performance improved significantly. The CCM ETL improved about 30% and the RM ETL improved about 40%.

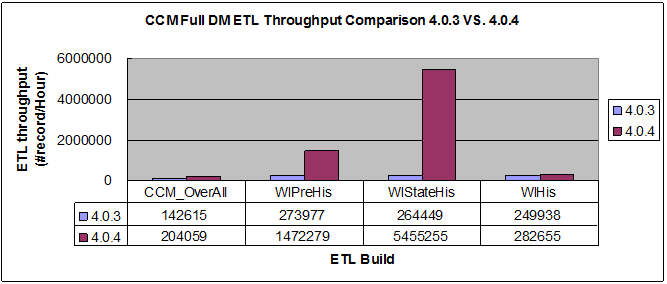

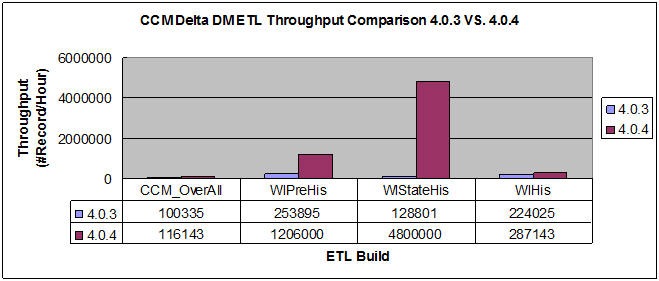

CCM Data Manager ETL performance improvement

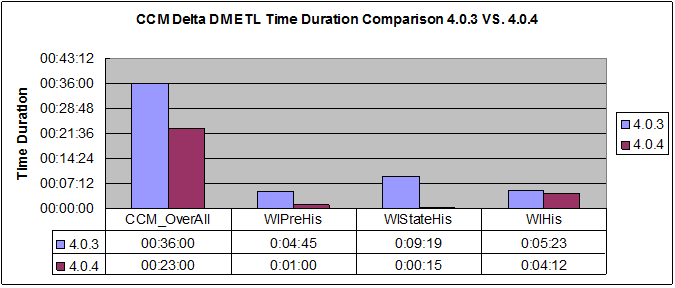

The major improvements occurred in three ETL builds: WorkItemPreviouseHistory, WorkItemStateHistory, and WorkItemHistory. One improvement involved changing the Update Detection Method from "select" to "update." If the majority of records will be updated or inserted, the "update" method is most efficient. Otherwise, if fewer records need to be updated, the "select" method might be faster.

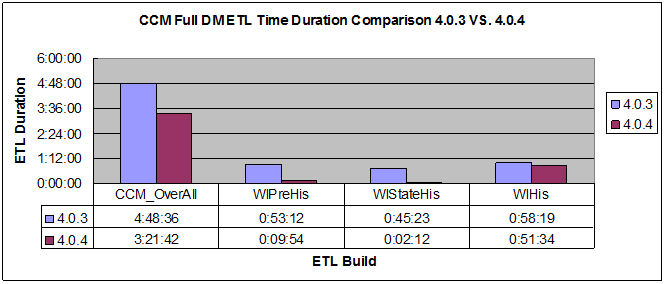

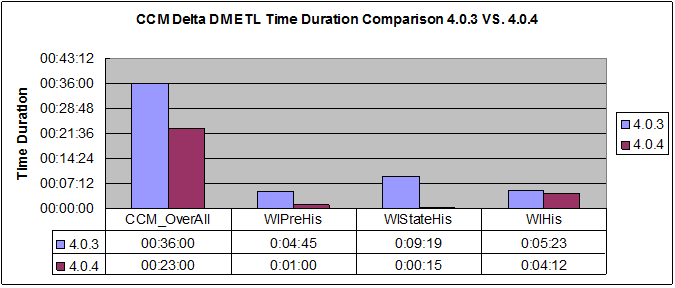

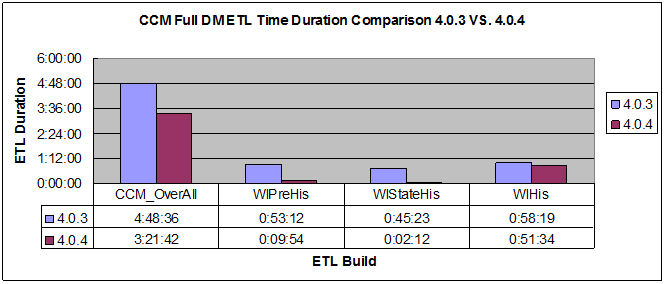

As the following four figures show, the duration of the full load performance was reduced about 30% based on the test data shape. For specific ETL function, the duration of WorkItemPreviousHistory build was reduced about 80%. The WorkItemStateHistory build was reduced 90%. The WorkItemHistory build was reduced 15%.

[Note]: The format of units in the charts for the ETL duration is HH:MM:SS.

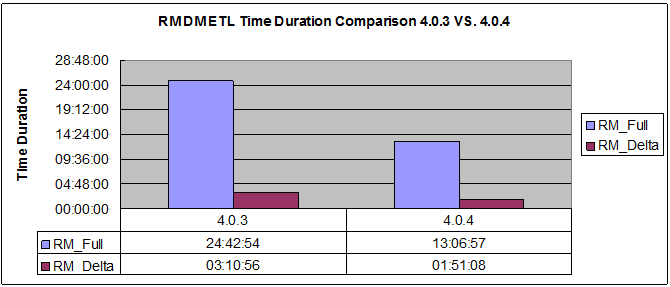

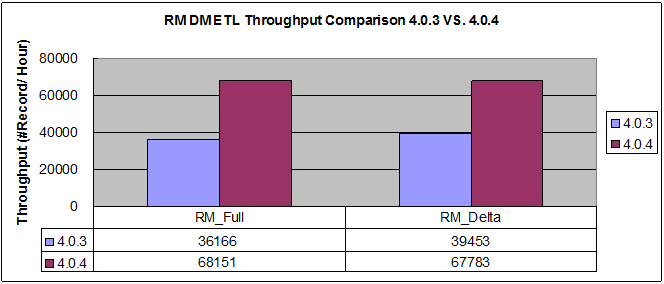

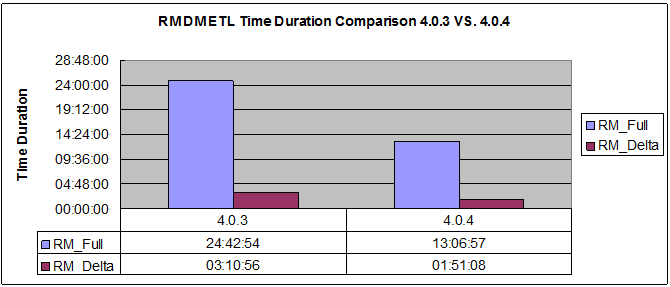

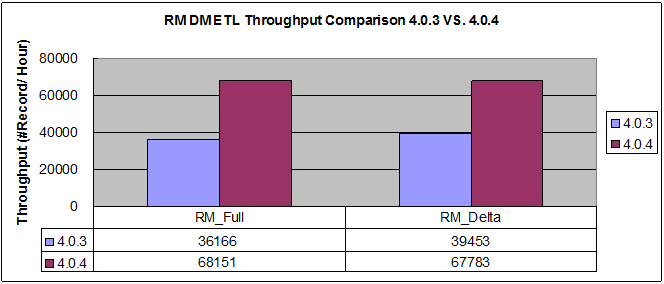

RM Data Manager ETL performance improvement

The major improvement ocurred on the way staging was introduced, which reduced the number of times an operation fetched data from the REST service. In CLM 4.0.4, the RM ETL added two temporary tables that load most of the data at once. In the previous ETL, each record type would be loaded several times. For example, the Custom Attribute, Custom Attribute Type, and Requirement records use the same REST service. Before 4.0.4, the ETL would get the data three separate times and would take about 11 hours to fetch the data from the REST service. In 4.0.4, loading to the temporary table means that the data is fetched only once from the REST service. Then, the ETL builds fetch the data from the relational temporary table in the data warehouse, so the performance is improved.

Related jazz.net defects:

74735,

43066.

As the following two figures show, the throughput of RM Data Manager ETL improved more than 40%. (Throughput_4.0.4 - Throughput_4.0.3)/Throughput_4.0.4

Appendix A

Product

|

Version |

Highlights for configurations under test |

| IBM WebSphere Application Server |

8.5.0.1 |

JVM settings:

- GC policy and arguments, max and init heap sizes:

-verbose:gc -XX:+PrintGCDetails -Xverbosegclog:gc.log -Xgcpolicy:gencon

-Xmx8g -Xms8g -Xmn1g -Xcompressedrefs -Xgc:preferredHeapBase=0x100000000

-XX:MaxDirectMemorySize=1g

|

| DB2 |

DB2 9.7.5 |

Transaction log setting of data warehouse:

- Transaction log size changed to 40960

db2 update db cfg using LOGFILSIZ=40960

|

| LDAP server |

IBM Tivoli Directory Server 6.3 |

|

| License server |

|

Hosted locally by JTS server |

| Network |

|

Shared subnet within test lab |

For more information

About the authors

WangPengPeng

Questions and comments:

- What other performance information would you like to see here?

- Do you have performance scenarios to share?

- Do you have scenarios that are not addressed in documentation?

- Where are you having problems in performance?

Warning: Can't find topic Deployment.PerformanceDatasheetReaderComments

This case study used the same test environment and same test data to test the ETL performance for CLM 4.0.3 and CLM 4.0.4. Test data was generated using automation. The test environment for the latest release was upgraded from the earlier one by using the CLM upgrade process. To create four VMs, one X3550 M3 7944J2A (at 2.67 GHz, 48 GB RAM, and 12 physical cores) was used. In the topology, four CLM applications (JTS, CCM, QM, and RM) were installed on VM1; the CLM repository was installed on VM2; the Data Manager ETL tool was installed on VM3; and the data warehouse was installed on VM4.

The same software configuration was used for both CLM 4.0.3 and CLM 4.0.4. The WebSphere Application Server was version 8.5.1, 64-bit. The database server was IBM DB2 9.7.5, 64-bit. The Rational Reporting for Development Intelligence tool was version 2.0.4. The Jazz Team Sever, CCM, QM, and RM applications co-existed in the same WebSphere Application Server profile. The JVM setting was as follows:

This case study used the same test environment and same test data to test the ETL performance for CLM 4.0.3 and CLM 4.0.4. Test data was generated using automation. The test environment for the latest release was upgraded from the earlier one by using the CLM upgrade process. To create four VMs, one X3550 M3 7944J2A (at 2.67 GHz, 48 GB RAM, and 12 physical cores) was used. In the topology, four CLM applications (JTS, CCM, QM, and RM) were installed on VM1; the CLM repository was installed on VM2; the Data Manager ETL tool was installed on VM3; and the data warehouse was installed on VM4.

The same software configuration was used for both CLM 4.0.3 and CLM 4.0.4. The WebSphere Application Server was version 8.5.1, 64-bit. The database server was IBM DB2 9.7.5, 64-bit. The Rational Reporting for Development Intelligence tool was version 2.0.4. The Jazz Team Sever, CCM, QM, and RM applications co-existed in the same WebSphere Application Server profile. The JVM setting was as follows:

[Note]: The format of units in the charts for the ETL duration is HH:MM:SS.

[Note]: The format of units in the charts for the ETL duration is HH:MM:SS.