Setting up a Change and Configuration Management application clustered environment version 6.0.4

Authors: MichaelAfshar, AlexBernstein, ChrisAustin, PrabhatGupta, YanpingChen, BreunReedBuild basis: Change and Configuration Management 6.0.4

Page contents

- Approach to clustering

- Modifying the MQTT advanced properties

- Configure HAProxy server as load balancer for ccm cluster/ JAS cluster/ IoT MessageSight cluster

- Configure high availability for HAProxy clusters

- Configure high availability for IoT MessageSight cluster

- Configure IHS as Reverse Proxy

- Troubleshooting the cluster

You can set up a clustered environment to host a Jazz Team Server and multiple Change and Configuration Management (CCM) nodes.

For instructions on setting up a clustered environment on version 6.0.5 and later, see Change and Configuration Management clustered environment version 6.0.5 and later

Note: The MessageSight1 instance is online. Therefore, MessageSight2 is offline since it serves as non-primary standby message server in our HA configuration. On failure of the active appliance, the standby appliance activates itself by constructing the IBM MessageSight server state from the data in the store.

Note: The MessageSight1 instance is online. Therefore, MessageSight2 is offline since it serves as non-primary standby message server in our HA configuration. On failure of the active appliance, the standby appliance activates itself by constructing the IBM MessageSight server state from the data in the store.

The steps to setup IoT MessageSight cluster are as following:

* Configuring your system for high availability

* Configuring MessageSight by using the Web UI

* Configuring MessageSight by using REST Administration APIs

To setup communication between ccm cluster and IoT MessageSight cluster, add the following lines to CCM_Install_Dir/server/server.startup file:

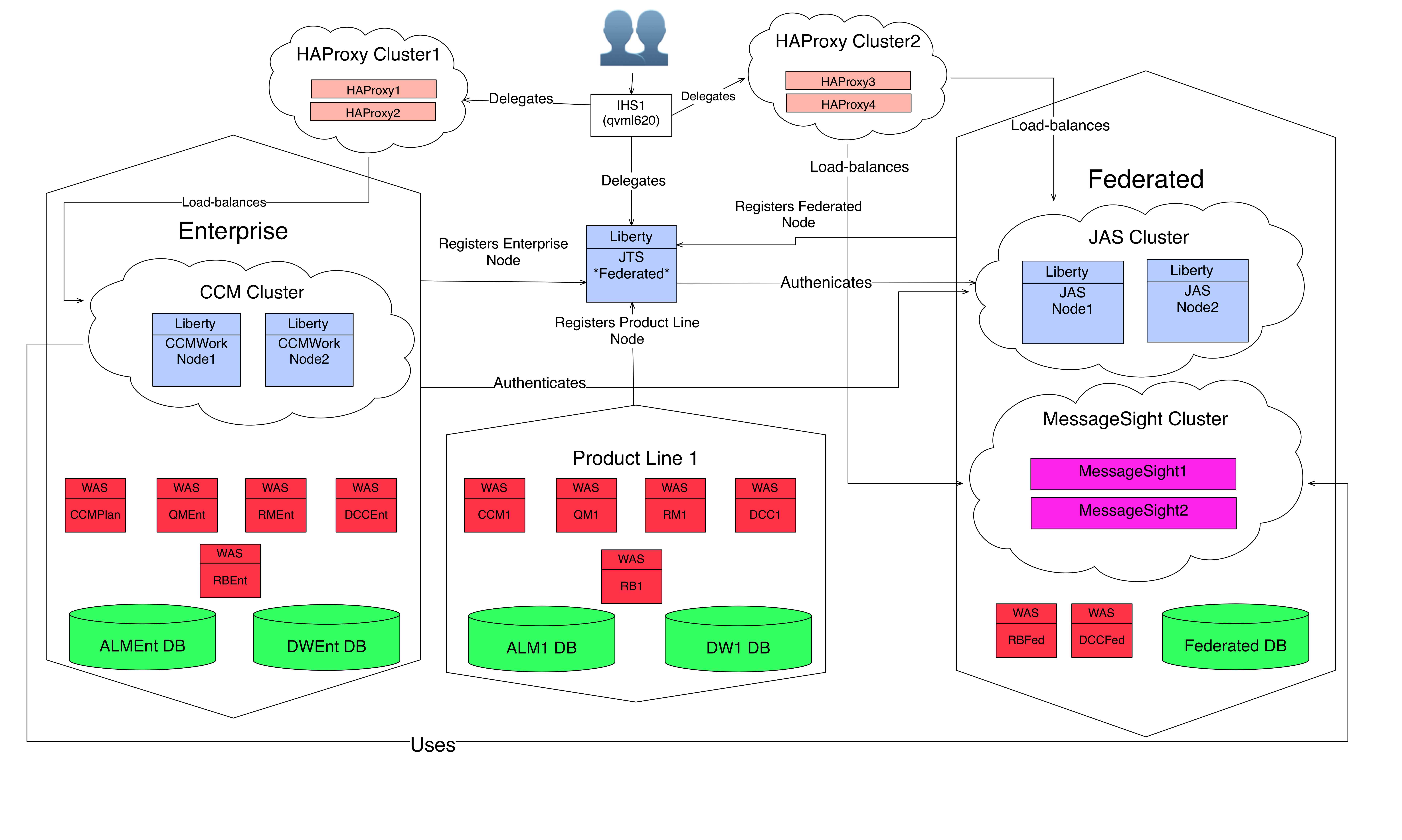

Approach to clustering

The CCM applications must be installed on multiple servers and connected by using an MQTT broker, which enables synchronization across the nodes. A load balancer is used as a front-end URL, which accepts connections and distributes the requests to one of the back-end CCM nodes. The host name of the load balancer is used as public URL for the CCM application during setup.- The following diagram illustrates the approach to clustering:

Modifying the MQTT advanced properties

You can set or modify the default MQTT related service properties on the application's Advanced Properties page:- Log in to the application (CCM) server as an administrator.

- Click Application > Advanced Properties.

- Search for

com.ibm.team.repository.service.mqtt.internal.MqttService. - Click Edit in the title bar and modify each entry. Click Preview in the title bar to exit the edit mode and save your changes. The following table list all MQTT properties:

| Property | Description |

|---|---|

| MQTT Broker address | The address of the MQTT (MQ Telemetry Transport) broker. If not provided, clustering support will be disabled. |

| Session persistence location | The location (the folder) where the "in-flight" messages will be stored if file-persistent location is enabled. Default is mqtt sub-folder under server folder. |

| Unique cluster name | This property can be used to uniquely name your cluster. This property must be set when there are more then one clusters communicating through the same MQTT message broker, or when there are more than one cluster-enabled application on the same cluster. If not provided, the port number of MQTT message broker will be used, but that may not be adequate. |

| Enable file-based persistence | Enabling file-based persistence will cause published MQTT messages to be saved, until the message is confirmed to be delivered. If a node goes down and restarted, or failed connection to MQTT broker is restored, persisted messages with QoS 1 and 2 will be resent. |

| Enable in-memory MQTT message | Message logging causes the last received message for each topic to be retained in memory for debug access. |

| MQTT message log size | Number of messages to store in the MQTT message log. |

| Maximum callback processing | Maximum number of concurrent background threads to use for processing incoming MQTT messages. Must be greater than minimum. |

| Minimum callback processing | Minimum number of concurrent background threads to use for processing incoming MQTT messages. |

| Queue size | This queue will hold background tasks submitted to process the incoming MQTT messages until a callback processing thread becomes available. |

Configure HAProxy server as load balancer for ccm cluster/ JAS cluster/ IoT MessageSight cluster

Installation and setup instruction for HAProxy server can be found in the Interactive Installation Guide. In the SVT Single JTS topology, HAProxy server connects to ccm cluster and JAS cluster in http mode, and connects to IoT MessageSight cluster in tcp mode. The connection information need to be configured in HAProxy_Install_Dir/haproxy.cfg file. The following example shows how to define http connection and tcp connection in haproxy.cfg file.

HAProxy_Install_Dir/haproxy.cfg

# connect JAS cluster in http mode

frontend jas-proxy

bind *:80

bind *:9643 ssl crt /etc/haproxy/ssl/proxy.pem no-sslv3

log global

option httplog

mode http

capture cookie SERVERID len 32

redirect scheme https if !{ ssl_fc }

maxconn 2000 # The expected number of the users of the system.

default_backend jas

backend jas

option forwardfor

http-request set-header X-Forwarded-Port %[dst_port]

http-request add-header X-Forwarded-Proto https if { ssl_fc }

fullconn 1000 # if not specified, HAProxy will set this to 10% of 'maxconn' specified on the frontend

balance leastconn

cookie SERVERID insert indirect nocache

server jas1 [JAS server 1 URI]:9643 minconn 100 maxconn 500 ssl check cookie jas1 verify none

server jas2 [JAS server 2 URI]:9643 minconn 100 maxconn 500 ssl check cookie jas2 verify none

# connect MessageSight cluster in tcp mode

listen MessageSight

bind *:1883

mode tcp

balance leastconn

option tcplog

server MessageSight1 [MessageSight server 1 URI]:1883 check

server MessageSight2 [MessageSight server 2 URI]:1883 check

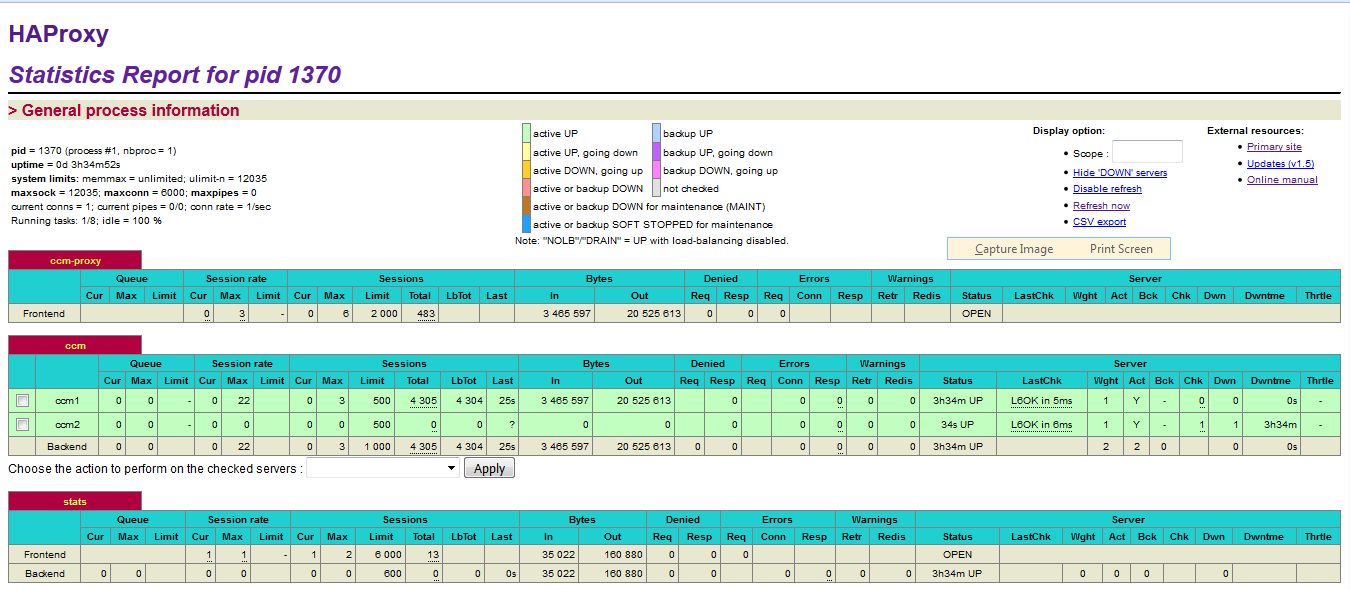

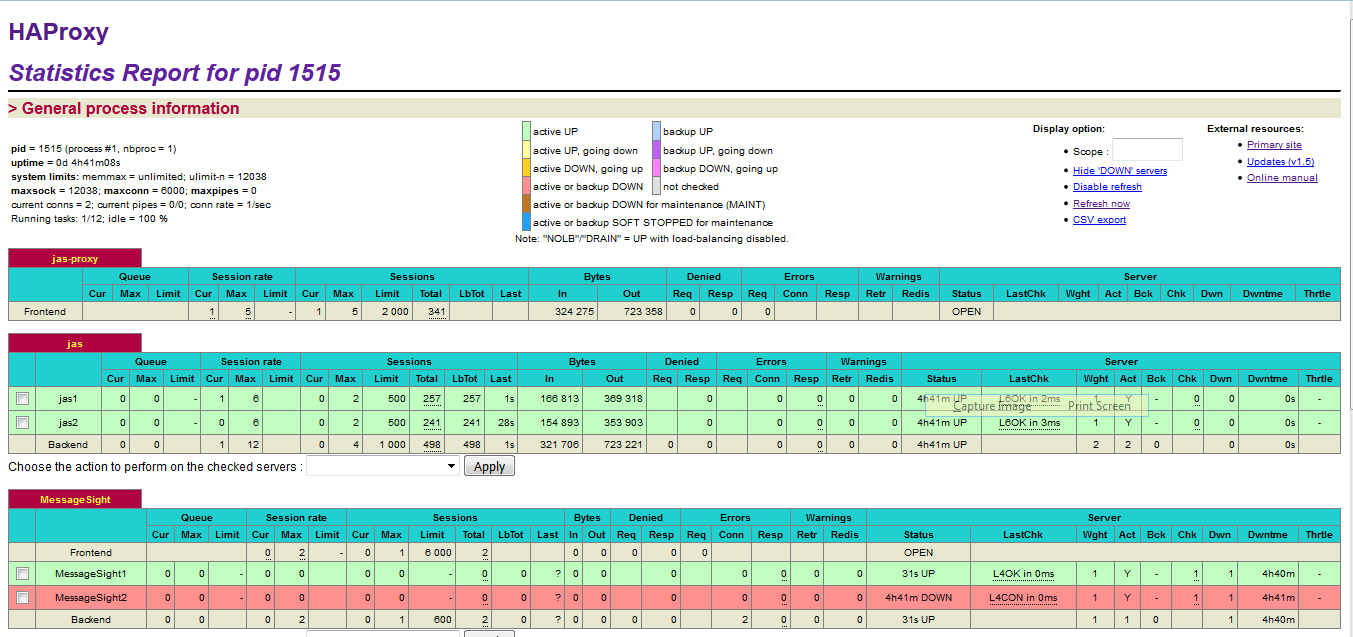

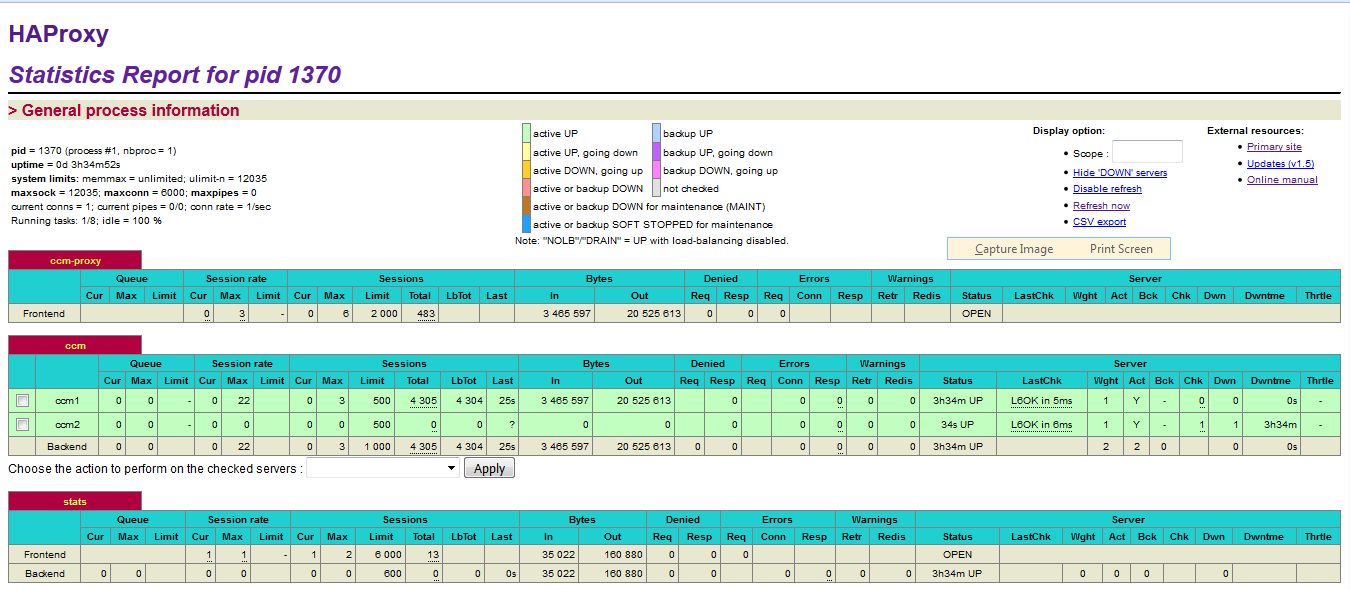

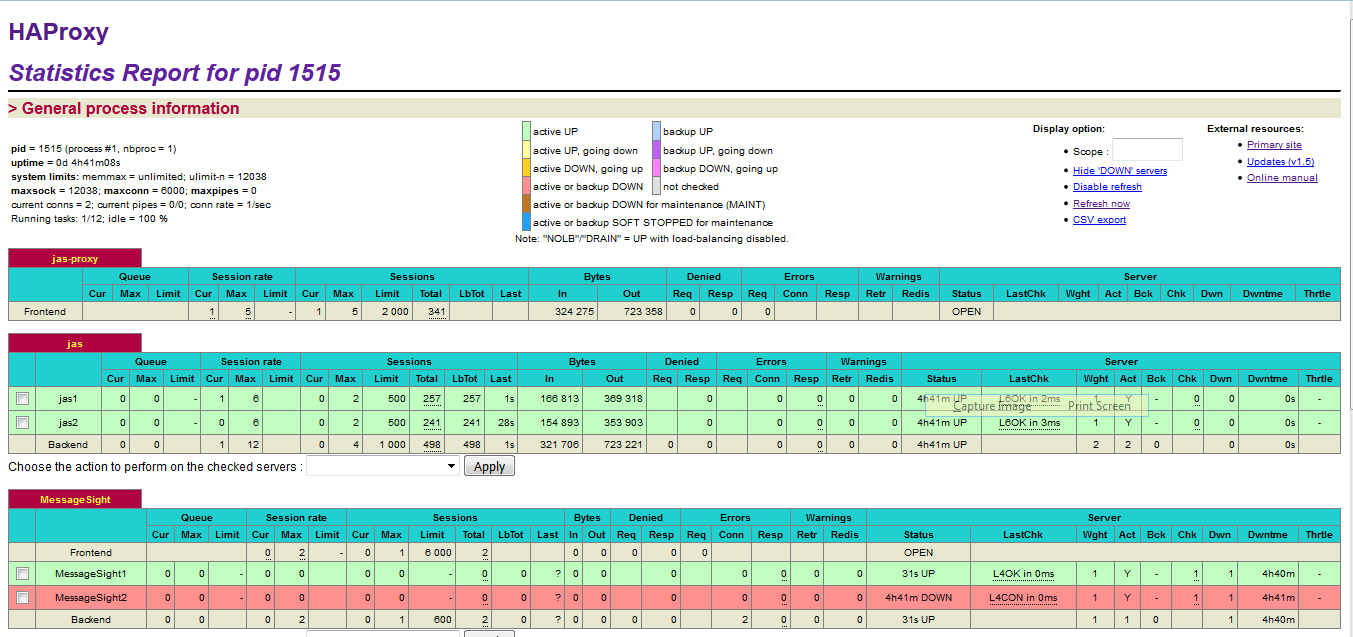

After restart HAProxy server, HAProxy Stats for HAProxy Cluster1 and HAProxy Cluster2 in SVT Single JTS topology should be like the following:

Note: The MessageSight1 instance is online. Therefore, MessageSight2 is offline since it serves as non-primary standby message server in our HA configuration. On failure of the active appliance, the standby appliance activates itself by constructing the IBM MessageSight server state from the data in the store.

Note: The MessageSight1 instance is online. Therefore, MessageSight2 is offline since it serves as non-primary standby message server in our HA configuration. On failure of the active appliance, the standby appliance activates itself by constructing the IBM MessageSight server state from the data in the store.

Configure high availability for HAProxy clusters

We use the Keepalived service and a virtual IP address that would be shared between the primary and secondary HAProxy nodes to setup high availability. We use a script master_backup.sh to check status of HAProxy server nodes and to do the switch between the primary and secondary nodes. Example of Keepalived_Install_Dir/keepalived.conf and Keepalived_Install_Dir/master_backup.sh are as following:

Keepalived_Install_Dir/keepalived.conf

! Configuration File for keepalived

vrrp_script chk_haproxy {

script "killall -0 haproxy"

interval 2

weight 2

}

vrrp_instance VI_1 {

state MASTER # BACKUP on the stand-by node

interface eno192 # To be replaced by real interface number

virtual_router_id 11

priority 101 # 100 on the stand-by node

virtual_ipaddress {

10.10.10.20/24 # To be replaced by real cluster ViP

}

notify_master "/etc/keepalived/master_backup.sh MASTER"

notify_backup "/etc/keepalived/master_backup.sh BACKUP"

notify_fault "/etc/keepalived/master_backup.sh FAULT"

track_script {

chk_haproxy

}

}

Keepalived_Install_Dir/master_backup.sh

#! /bin/bash

STATE=$1

NOW=$(date)

KEEPALIVED="/etc/keepalived"

case $STATE in

"MASTER") touch $KEEPALIVED/MASTER

echo "$NOW Becoming MASTER" >> $KEEPALIVED/COUNTER

/bin/systemctl start haproxy

exit 0

;;

"BACKUP") echo "$NOW Becoming BACKUP" >> $KEEPALIVED/COUNTER

/bin/systemctl stop haproxy || killall -9 haproxy

exit 0

;;

"FAULT") echo "$NOW Becoming FAULT" >> $KEEPALIVED/COUNTER

/bin/systemctl stop haproxy || killall -9 haproxy

exit 0

;;

*) echo "unknow state" >> $KEEPALIVED/COUNTER

echo "NOW Becoming UNKNOWN" >> $KEEPALIVED/COUNTER

exit 1

;;

esac

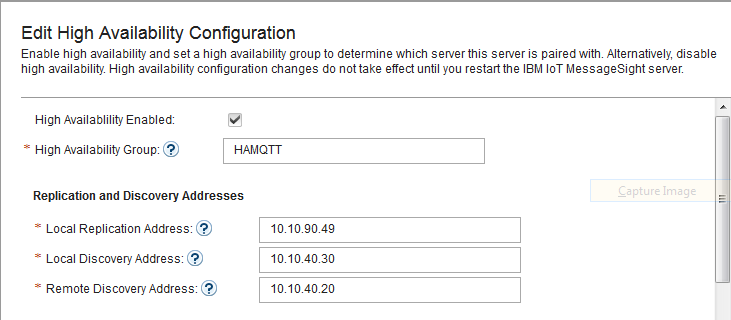

Configure high availability for IoT MessageSight cluster

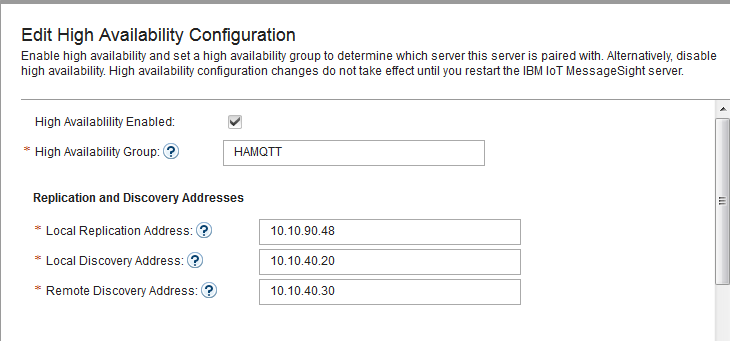

Before configure high availability for IoT MessageSight servers, we need to add two additional vNICs to each IoT MessageSight node. One for discovery interface, and the other one for replication interface. The discovery IP should be in the same subnet as the host’s IP, and the replication IP should be in a different subnet. For example, we can have additional vNICs like this:| Address | Hostname |

|---|---|

| 10.10.40.1 | MessageSight1 |

| 10.10.40.20 | MessageSight1-discover |

| 10.10.90.48 | MessageSight1-replicate |

| 10.10.40.2 | MessageSight2 |

| 10.10.40.30 | MessageSight2-discover |

| 10.10.90.49 | MessageSight2-replicate |

- For each IoT MessageSight node, install the non-Dockerized version of IoT MessageSight and configure it according to the Interactive Installation Guide using IoT MessageSight Web UI.

- Disable all existing endpoints.

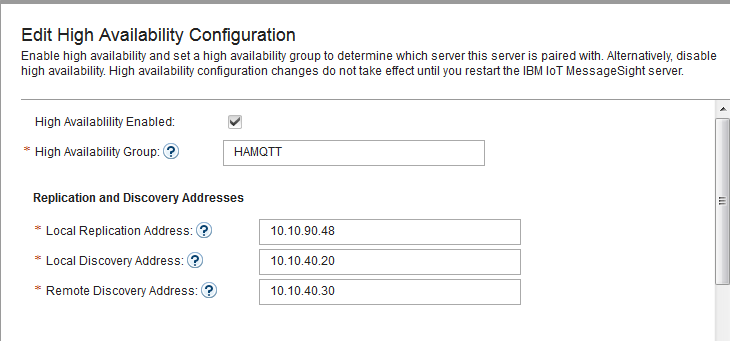

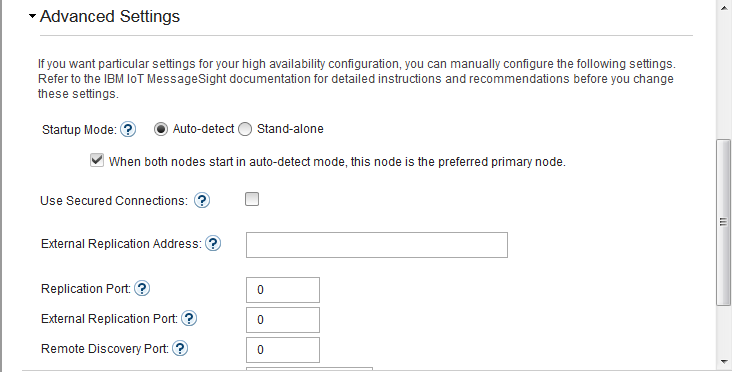

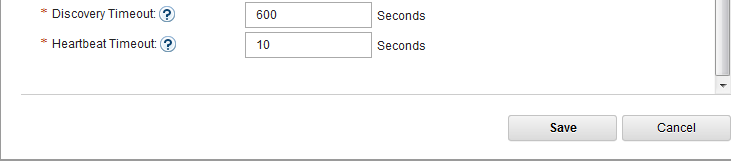

- In IoT MessageSight Web UI, do the following on MessageSight1 node (the primary node):

- Select Server > High Availability, then click Edit in Configuration section.

- Configure the server as follows, then Save.

-

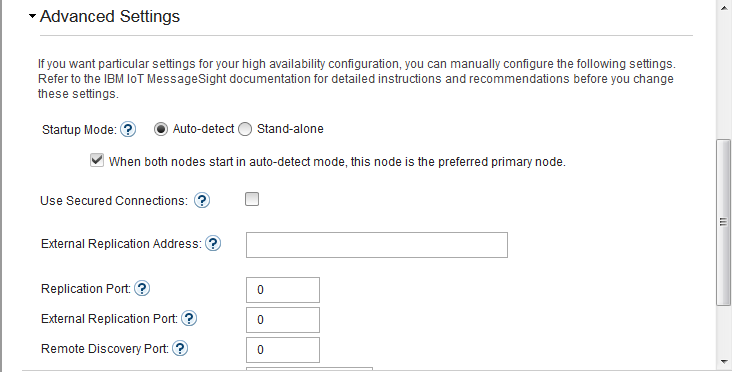

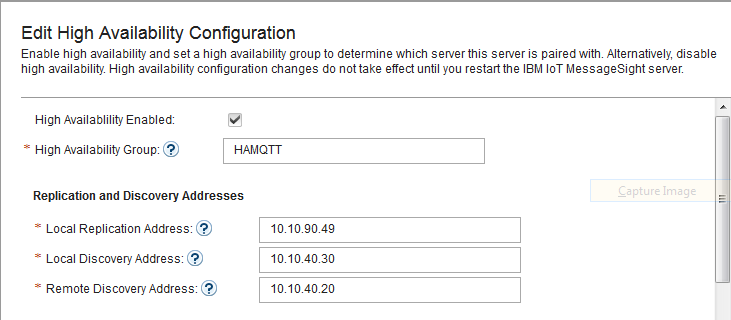

- Switch to MessageSight2(the standby node).

- Select Server > High Availability, then click Edit in Configuration section.

- Configure the server as follows, then Save.

-

- Switch to MessageSight1, restart the server in Clean store mode: select Server > Server Control, then click Clean store in the IoT MessageSight Server section

- Switch back to MessageSight2 and restart the server in Clean store mode. Make sure there is no error in node synchronization.

- Switch to MessageSight1, enable endpoints then restart the server in Clean store mode again.

* Configuring your system for high availability

* Configuring MessageSight by using the Web UI

* Configuring MessageSight by using REST Administration APIs

To setup communication between ccm cluster and IoT MessageSight cluster, add the following lines to CCM_Install_Dir/server/server.startup file:

CCM_Install_Dir/server/server.startup JAVA_OPTS="$JAVA_OPTS -Dcom.ibm.team.repository.cluster.nodeId="To be replaced by a unique ccm node id"" JAVA_OPTS="$JAVA_OPTS -Dcom.ibm.team.repository.service.internal.db.allowConcurrentAccess=true" JAVA_OPTS="$JAVA_OPTS -Dretry.count=0" JAVA_OPTS="$JAVA_OPTS -Dretry.wait=10" JAVA_OPTS="$JAVA_OPTS -Dactivation.code.ClusterSupport=xxxxxxxx-xxxx-xxxx-xxxx-xxxxxxxxxxxx"Add the following line to CCM_Install_Dir/server/conf/ccm/teamserver.properties file:

CCM_Install_Dir/server/conf/ccmwork/teamserver.properties com.ibm.team.repository.mqtt.broker.address=tcp\://[HAProxy cluster 2 visual IP]\:1883

Configure IHS as Reverse Proxy

Note: This section describes about configuring IHS only as a reverse proxy and not for load balancing between clustered nodes. You can also configure IHS to load balance between clustered nodes along with reverse proxy which will be described in next section.- Setup IHS proxy server on a remote web server.

- Configure CLM Plugins for each WAS based CLM applications.

- Configure IHS for all Liberty based Apps, and for both HAProxy clusters.

- In case that the non WAS based applications require different ports, define the ports in HTTPServer_Install_Dir/conf/httpd.conf file and WebSphere_Plugins_Install_Dir/config/webserver1/plugin-cfg.xml. For example, to define port 9643, add the following into httpd.conf file and plugin-cfg.xml files:

HTTPServer_Install_Dir/conf/httpd.conf Listen 0.0.0.0:9643 <VirtualHost *:9643> SSLEnable </VirtualHost> KeyFile HTTPServer_Install_Dir/ihskeys.kdb SSLStashFile HTTPServer_Install_Dir/ihskeys.sth SSLDisable WebSphere_Plugins_Install_Dir/Plugins/config/webserver1/plugin-cfg.xml <VirtualHostGroup Name="default_host"> <VirtualHost Name="*:9443"/> <VirtualHost Name="*:9444"/> <VirtualHost Name="*:9643"/> </VirtualHostGroup> - In the plugin-cfg.xml file, add access information for each of the non WAS based applications/clusters. For a cluster, only one entry is required. In the SVT Single JTS topology, we need entries for JTS, HAProxy cluster1, and HAProxy cluster2. An example is as following:

WebSphere_Plugins_Install_Dir/Plugins/config/webserver1/plugin-cfg.xml <ServerCluster Name="JTS" ServerIOTimeoutRetry="-1" CloneSeparatorChange="false" LoadBalance="Round Robin" GetDWLMTable="false" PostBufferSize="0" IgnoreAffinityRequests="false" PostSizeLimit="-1" RemoveSpecialHeaders="true" RetryInterval="60"> <Server Name="jts" ConnectTimeout="0" ExtendedHandshake="false" ServerIOTimeout="900" LoadBalanceWeight="1" MaxConnections="-1" WaitForContinue="false"> <Transport Protocol="https" Port="9443" Hostname="To be replaced by JTS’s URL" > <Property name="keyring" value="HTTPServer_Install_Dir/ihskeys.kdb"/> <Property name="stashfile" value="HTTPServer_Install_Dir/ihskeys.sth"/> </Transport> </Server> </ServerCluster> <UriGroup Name="jts_URIs"> <Uri Name="/jts/*" AffinityURLIdentifier="jsessionid" AffinityCookie="JSESSIONID"/> <Uri Name="/clmhelp/*" AffinityURLIdentifier="jsessionid" AffinityCookie="JSESSIONID"/> </UriGroup> <Route VirtualHostGroup="default_host" UriGroup="jts_URIs" ServerCluster="JTS"/> <ServerCluster Name="ccm_cluster_server1" ServerIOTimeoutRetry="-1" CloneSeparatorChange="false" LoadBalance="Round Robin" GetDWLMTable="false" PostBufferSize="0" IgnoreAffinityRequests="false" PostSizeLimit="-1" RemoveSpecialHeaders="true" RetryInterval="60"> <Server Name="ccm_cluster_server1" ConnectTimeout="0" ExtendedHandshake="false" ServerIOTimeout="900" LoadBalanceWeight="1" MaxConnections="-1" WaitForContinue="false"> <Transport Protocol="https" Port="9443" Hostname="To be replaced by the visual IP that defined for HAProxy cluster1" > <Property name="keyring" value="HTTPServer_Install_Dir/ihskeys.kdb"/> <Property name="stashfile" value="HTTPServer_Install_Dir/ihskeys.sth"/> </Transport> </Server> </ServerCluster> <UriGroup Name="ccm_server_Cluster_URIs"> <Uri Name="/ccmwork/*" AffinityURLIdentifier="jsessionid" AffinityCookie="JSESSIONID"/> </UriGroup> <Route VirtualHostGroup="default_host" UriGroup="ccm_server_Cluster_URIs" ServerCluster="ccm_cluster_server1"/> <ServerCluster Name="haproxy_server1_status" ServerIOTimeoutRetry="-1" CloneSeparatorChange="false" LoadBalance="Round Robin" GetDWLMTable="false" PostBufferSize="0" IgnoreAffinityRequests="false" PostSizeLimit="-1" RemoveSpecialHeaders="true" RetryInterval="60"> <Server Name="haproxy_server1" ConnectTimeout="0" ExtendedHandshake="false" ServerIOTimeout="900" LoadBalanceWeight="1" MaxConnections="-1" WaitForContinue="false"> <Transport Protocol="https" Port="9444" Hostname="To be replaced by the visual IP that defined for HAProxy cluster1" > <Property name="keyring" value="HTTPServer_Install_Dir/ihskeys.kdb"/> <Property name="stashfile" value="HTTPServer_Install_Dir/ihskeys.sth"/> </Transport> </Server> </ServerCluster> <UriGroup Name="haproxy_server1_Cluster_URIs"> <Uri Name="/haproxy1_stats" AffinityURLIdentifier="jsessionid" AffinityCookie="JSESSIONID"/> </UriGroup> <Route VirtualHostGroup="default_host" UriGroup="haproxy_server1_Cluster_URIs" ServerCluster="haproxy_server1_status"/> <ServerCluster Name="jas_cluster_server1" ServerIOTimeoutRetry="-1" CloneSeparatorChange="false" LoadBalance="Round Robin" GetDWLMTable="false" PostBufferSize="0" IgnoreAffinityRequests="false" PostSizeLimit="-1" RemoveSpecialHeaders="true" RetryInterval="60"> <Server Name=" jas_server1" ConnectTimeout="0" ExtendedHandshake="false" ServerIOTimeout="900" LoadBalanceWeight="1" MaxConnections="-1" WaitForContinue="false"> <Transport Protocol="https" Port="9643" Hostname="To be replaced by the visual IP that defined for HAProxy cluster2" > <Property name="keyring" value="HTTPServer_Install_Dir/ihskeys.kdb"/> <Property name="stashfile" value="HTTPServer_Install_Dir/ihskeys.sth"/> </Transport> </Server> </ServerCluster> <UriGroup Name=" jas_cluster_server1_URIs"> <Uri Name="/oidc/*" AffinityURLIdentifier="jsessionid" AffinityCookie="JSESSIONID"/> <Uri Name="/jazzop/*" AffinityURLIdentifier="jsessionid" AffinityCookie="JSESSIONID"/> </UriGroup> <Route VirtualHostGroup="default_host" UriGroup=" jas_cluster_server1_URIs" ServerCluster="jas_cluster_server1"/> <ServerCluster Name="haproxy_server2_stats" ServerIOTimeoutRetry="-1" CloneSeparatorChange="false" LoadBalance="Round Robin" GetDWLMTable="false" PostBufferSize="0" IgnoreAffinityRequests="false" PostSizeLimit="-1" RemoveSpecialHeaders="true" RetryInterval="60"> <Server Name="haproxy_server2_stats" ConnectTimeout="0" ExtendedHandshake="false" ServerIOTimeout="900" LoadBalanceWeight="1" MaxConnections="-1" WaitForContinue="false"> <Transport Protocol="https" Port="9444" Hostname="To be replaced by the visual IP that defined for HAProxy cluster2" > <Property name="keyring" value="HTTPServer_Install_Dir/ihskeys.kdb"/> <Property name="stashfile" value="HTTPServer_Install_Dir/ihskeys.sth"/> </Transport> </Server> </ServerCluster> <UriGroup Name="haproxy_server2_stats_Cluster_URIs"> <Uri Name="/haproxy2_stats" AffinityURLIdentifier="jsessionid" AffinityCookie="JSESSIONID"/> </UriGroup> <Route VirtualHostGroup="default_host" UriGroup="haproxy_server2_stats_Cluster_URIs" ServerCluster="haproxy_server2_stats"/> - Import certificates for each of the non WAS based applications/clusters/haproxy into IHS’s keystore.

- Restart IHS.

- In case that the non WAS based applications require different ports, define the ports in HTTPServer_Install_Dir/conf/httpd.conf file and WebSphere_Plugins_Install_Dir/config/webserver1/plugin-cfg.xml. For example, to define port 9643, add the following into httpd.conf file and plugin-cfg.xml files:

Troubleshooting the cluster

Related topics: Migrate from Traditional WebSphere to WebSphere Liberty

External links:

Additional contributors: TWikiUser, TWikiUser

Contributions are governed by our Terms of Use. Please read the following disclaimer.

Dashboards and work items are no longer publicly available, so some links may be invalid. We now provide similar information through other means. Learn more here.