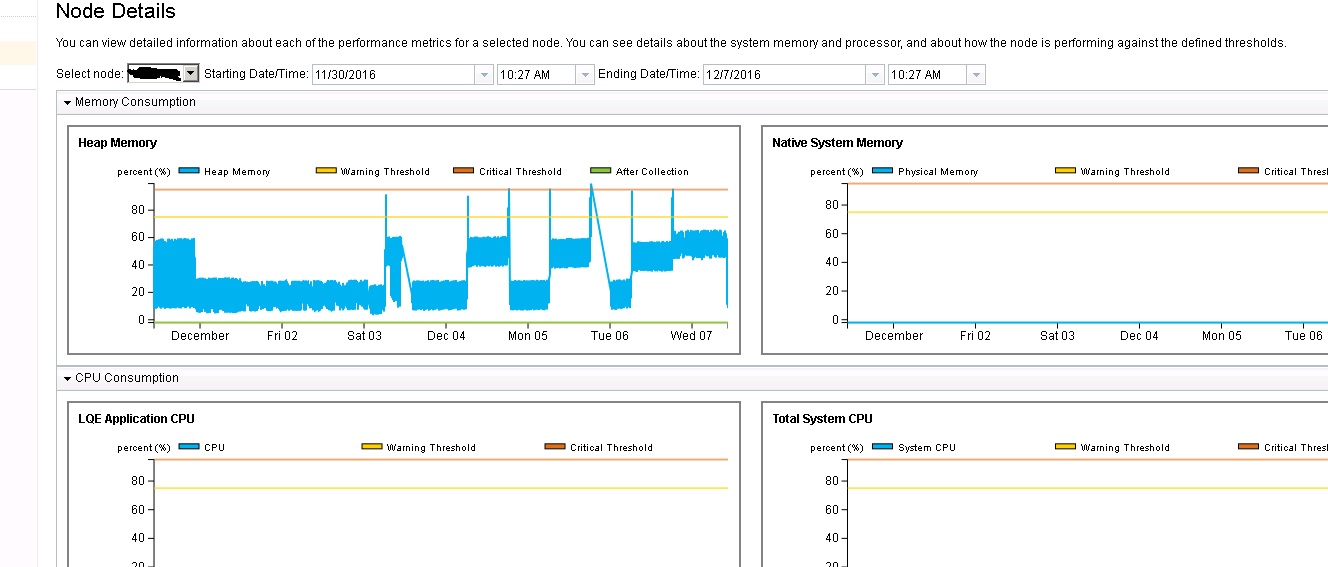

LQE heap explodes and leaves coredumps

NULL ===============================

1TICHARSET 1252

1TISIGINFO Dump Event "systhrow" (00040000) Detail "java/lang/OutOfMemoryError" "Java heap space" received

1TIDATETIME Date: 2016/11/23 at 12:24:55

1TIFILENAME Javacore filename: D:\Program Files\IBM\Websphere\AppServer\profiles\AppSrv01\javacore.20161123.122316.8724.0007.txt

1TIREQFLAGS Request Flags: 0x81 (exclusive+preempt)

1TIPREPSTATE Prep State: 0x104 (exclusive_vm_access+trace_disabled)

NULL ------------------------------------------------------------------------

2 answers

You may already have resolved this problem - but I'll post this anyway for others:

Comments

Just a clarification on what the JVM option -Xnocompressedrefs really does. It tells the JVM to use 64-bit references, which will be stored above the 4GB memory area. There can be still other things (such as NIO) still stored below the 4GB memory area. With a heap size this large, the heap size is allocated above the 4GB memory even without the -Xgc:preferredHeapBase option. Using this option is an acceptable solution if you have OOM in the "native heap". OOM in the "Java heap" can be quite different and requires further investigation.

For more information about compressed references, see below document.

https://www.ibm.com/support/knowledgecenter/SSYKE2_7.0.0/com.ibm.java.win.70.doc/diag/understanding/mm_compressed_references.html

1 vote

Thanks for the reply Daniel and Donald

I would suggest contacting support for this. The solution mentioned above is intended to fix the case of a Native OutOfMemory where as your case is heap exhaustion. If possible, take some javacores over the period of time the memory spike is observed and support can take a look to see what might be causing the heap exhaustion. A heap dump (.phd file) would also be useful if one is generated, along with the LQE logs from when the problem was happening.