How to implement delta loads using Rational Insight

Marc J. Nehme, Master Certified IT Specialist, Rational Reporting Engineer, IBM Rational Software

Last updated: December 16th, 2013

Rational Insight 1.1.1.3

Introduction

This document describes how to configure IBM Rational Insight’s Extract, Transform, Load (ETL) catalog to implement delta load functionality. A “delta load” is an “ETL” (eg. a job or fact build) that processes only the data that has changed since the last successful ETL run. Insight runs ETL jobs that extract data from specified data sources and load that data into the Insight data warehouse database which is used for reporting purposes. Typically, these ETL jobs are run on a daily basis. Rather than perform a full and complete load of your data each day, which can cause performance issues, it is a best practice to ETL only the delta between your data source and the Insight data warehouse.

Using IBM Cognos Data Manager as the ETL mechanism, Rational Insight provides “out of the box” ETL jobs that already have the delta load functionality implemented for solutions such as IBM Rational ClearQuest and IBM Rational RequisitePro. There are also Rational solutions that provide the Insight ETL jobs with the delta load functionality, such as IBM Rational Team Concert and IBM Rational Quality Manager. However, it is common to configure Insight to extract data from other IBM or third party tools where a user must define the ETL jobs and implement the delta load functionality.

This example will demonstrate how to implement delta load functionality in Rational Insight. In my example, I will be using a Microsoft Excel spreadsheet as the data source, however all of the concepts and techniques described are applicable to any data source. In my scenario, I am using Rational Insight v1.1.1.3 with IBM DB2 v9.7 as the database vendor and Microsoft Windows Server 2008 R2 Enterprise Edition (64-bit) as the operating system.

Prerequisites

- You have created tables of data in an Excel spreadsheet and added that spreadsheet as a data source on your ETL server (see steps 1 & 2 of Integrating a Microsoft Excel spreadsheet with Rational Insight)

- Define your spreadsheet (or custom data source) as a new connection in Data Manager (see step 2 of Integrating Rational Insight with HP Quality Center and other third party tools)

- You have existing fact builds that you created to extract data from the Excel spreadsheet and have successfully loaded that data into the Insight data warehouse (see steps 3 & 4 of Integrating Rational Insight with HP Quality Center and other third party tools)

Scenario

An organization is using a Microsoft Excel spreadsheet to capture some of their lifecycle data (eg. quality metrics). They have configured Rational Insight to ETL that Excel data into the Insight data warehouse on a nightly basis. They realize that the ETLs are taking an extended period of time to complete each night and are looking for ways to improve performance. After consulting with IBM, the customer decides that will configure Insight to implement delta load functionality for their Excel ETL job.

Note: In this scenario, I will demonstrate how to implement a delta load for the “Test Case” record type. You can apply these same techniques to other record types.

Before beginning, you need to understand the functions and variables that will be used in this documentation:

Functions

Function | Description |

|---|---|

| GetNFLastETLSuccessDateTime () | Retrieves the date and time of the last successful ETL run from the Insight data warehouse |

| GetSysDate() | Retrieves the current date and time from the Insight ETL server |

| SetNFETLStatusDateTime () | Records the data and time of the successful ETL run in the Insight data warehouse |

*These functions are provided by Insight and located in the “Functions” section of the ETL catalog:

Variables

Variable | Description |

|---|---|

| $DW | The name of the schema that delineates the data mart portion of the warehouse (eg. RIDW) |

| $ODS | The name of the schema that delineates the Operational Data Store which contains the latest snapshot of your data (eg. RIODS) |

| $MODIFIED_SINCE | The date and time of the last successful ETL run from the Insight data warehouse |

| $ETL_START_TIME | The current date and time of the Insight ETL server |

| $RESOURCEGROUP_ID | The unique ID of your data source |

| $DeltaLoad | Boolean flag to determine if the ETL should process a delta load or a full load |

| $RESULT | The result variable name of a fact build |

In my current environment, I have three fact builds (T_Project, T_TestCase, T_User) and one jobstream (Tests_ODS). Each time this is being run, the ETL is processing all of the rows (full load).

- The first step is to modify the “Tests_ODS” jobstream

- Right-click on “Tests_ODS” and select “Properties”

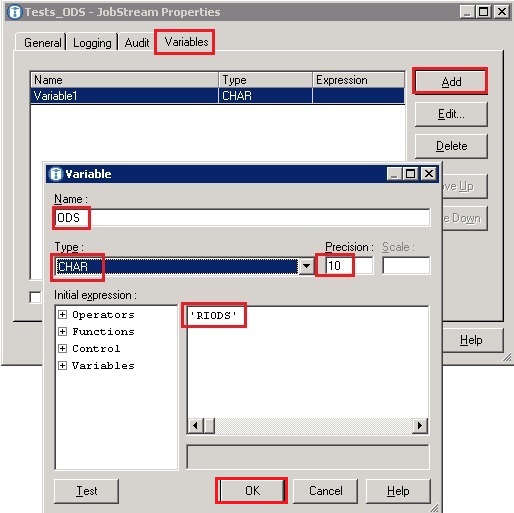

- Select the “Variables” tab then select “Add”. A new window will appear. Map your new variable name “ODS” to “RIODS”, set “Type” to “CHAR” and “Precision” to “10”. Ensure your window looks like the below screenshot and select “OK”.

- Repeat the sequence in step b to set the remaining variables and parameters as shown below. When complete, select “OK”

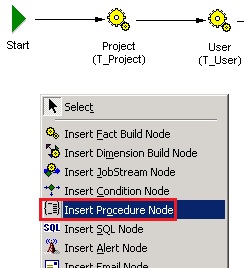

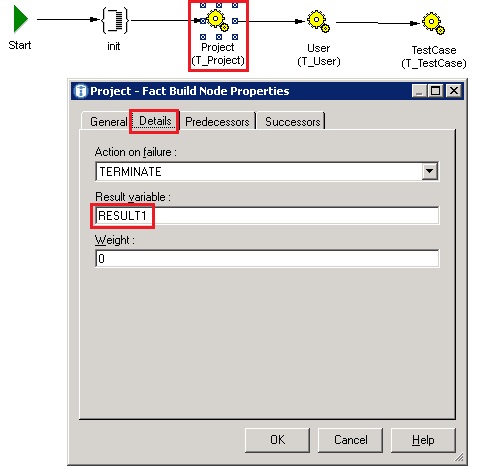

- In the workspace section, Right-click anywhere in the whitespace and select “Insert Procedure Node”. This will turn your mouse arrow into a cross. Click anywhere in the whitespace to place your procedure node.

- On the “General” tab, provide a “Business Name” (eg. “init”)

- Select the “Action” tab and enter the code shown below to set the “MODIFIED_SINCE” and “ETL_START_TIME” variables

- Select the “Predecessors” tab and select the “Start” node and deselect all other nodes.

- Select the “Successors” tab and select the first fact build in your jobstream (in my case, “Project”) and deselect all other nodes. Select “OK”.

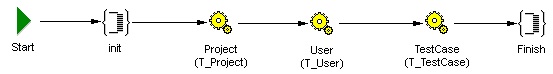

- This places the “init” procedure node in the correct position of your “Tests_ODS” jobstream

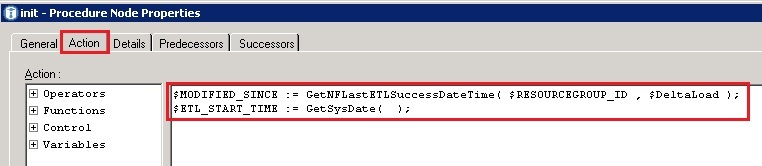

- Next, confirm that each one of your fact builds has a unique “Result variable” name specified. To do that, double-click on each one of your fact builds and select the “Details” tab. Assign each fact build a unique variable name. I recommend making them sequential as they are designed in the jobstream. In my example, my “T_Project” is the first fact build in my sequence so I set the “Result variable” name to “RESULT1”:

- Create a new procedure node as you did in step “d” and provide a business name (eg. “Finish”)

- Select the “Action” tab and enter the code shown below. This stores the date and start time of the current ETL job if all the individual fact builds succeeded.

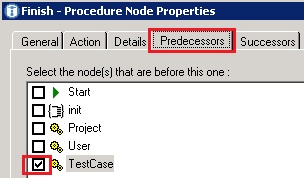

- Select the “Predecessors” tab and select the last fact build in your jobstream (in my example, it is the “TestCase”) and deselect all other nodes.

- Select the “Successors” tab and ensure that all the nodes are deselected, then select “OK”.

- Your jobstream should now look something like this:

- Create “DeltaLoad” and “FullLoad” JobStreams

- First, I will create the “DeltaLoad” JobStream. Create a new JobStream in the same directory as your current “Tests_ODS” JobStream by right-clicking on your folder (eg. “Tests”) and selecting “Insert JobStream”

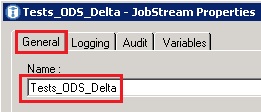

- On the “General” tab, select a name for the new JobStream (eg. “Tests_ODS_Delta”)

- Select the “Variables” tab and create a new variable as you did in Step 1b. Select “Add” and set the “Name” to “DeltaLoad” with “Type” as “Boolean” and “Expression” set to “True”. Once completed, select “OK” and you should see this:

- Select “OK” to close the “JobStream” properties window and you should now see just the “Start” node in your workspace

- Next, insert a JobStream node into your new JobStream “Tests_ODS_Delta”. In your workspace, right-click anywhere in the whitespace and select “Insert JobStream”

- Click anywhere in the whitespace to position your new JobStream node. This will take you to the “General” tab of your JobStream Properties window. Set the “Business name” to your original JobStream “Tests_ODS”. Then, select the ellipsis next to “Associated JobStream” and select your original JobStream. In my case, this is “Tests_ODS”. Then, select “OK”.

- Select the “Predecessors” tab and select the “Start” node as this should be the only option. Then, select “OK”

- Now, create the “FullLoad” JobStream. Repeat steps 2a through 2g however, this time, in step b, name the JobStream “Tests_ODS_Full” and in step c, set your “DeltaLoad” variable to “False”. Your catalog should now look like this:

- Modify the SQL queries of your fact builds to enable delta loading. I will demonstrate how to do this with the “T_TestCase” fact build however the same technique can be applied to all other fact builds.

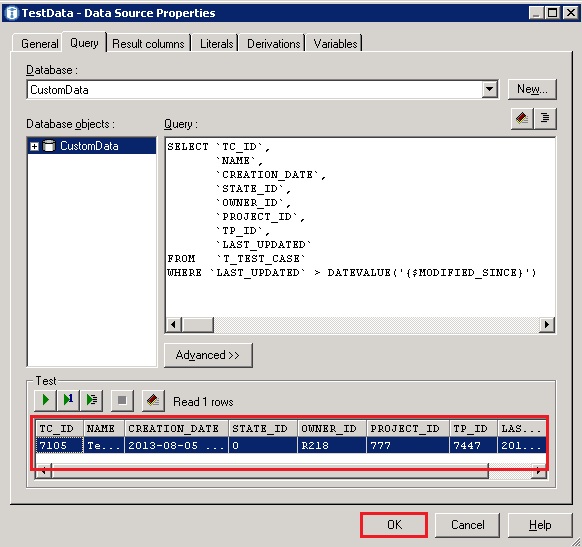

- In the “Tests” folder directory, highlight the “T_TestCase” fact build. In the workspace to the right, double-click on the data source properties (in my case, named “TestData”)

- Select the “Query” tab. You need to make 2 changes to the original query.

- Include the “LAST_UPDATED” column in the Select query.

- Add a “WHERE” clause so the ETL can do a comparison and only process the records (eg. Test Cases) whose “LAST_UPDATED” date is greater than the date of the last successful Insight ETL run.

- Test your query to validate your changes

- Select the green play button with the “1” next to it to test one row of data

- A window will appear asking for you to supply a value for the “MODIFIED_SINCE” variable. In my example, I input “2013-08-12”. After inputting a date, select “OK”.

- If the test is successful, you will see one row of data display. Select “OK” to close this window.

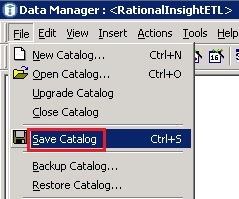

- Modify your other fact build SQL queries to enable delta load functionality and save your catalog

- Run your Delta Load JobStream

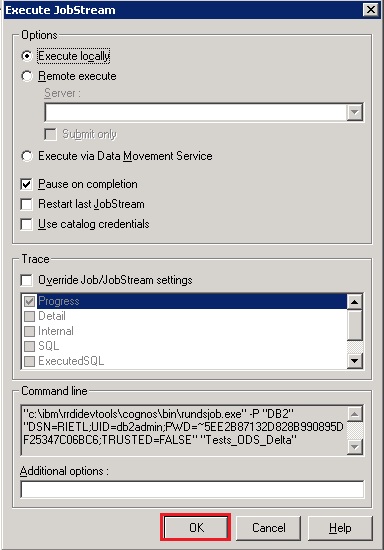

- Right-click on the “Tests_ODS_Delta” JobStream and select “Execute”

- An “Execute JobStream” window will appear. Select “OK”.

Note: Your schema names (“ODS” & “DW”) may be different. Check with your Database Administrator to obtain your schema names that are set for your Insight data warehouse. I set my “RESOURCEGROUP” and “RESOURCEGROUP_ID” variables to “TestData” but you can use whatever name you choose. The “Connection” expression must be the name that you defined for your Excel (or other data source) connection in Data Manager as mentioned in step 2 of the Prerequisites above. The “MODIFIED_SINCE” and “ETL_START_TIME” variables are intentionally left blank.

Note: You should still see a cross icon in place of your mouse arrow. To go back to using your regular mouse pointer at any time, select the arrow icon from the Data Manager tool bar. With your mouse arrow, you can drag and move the procedure and other icons in the workspace by pressing and holding on the icon and then moving the mouse.

Note: I also set the “T_User” fact build to “RESULT2” and the “T_TESTCASE” fact build to “RESULT3”. Follow a similar pattern for all your fact builds. Now that I have set the initialization procedure and labeled all fact builds correctly, the next step is to create the “Finish” procedure.

Your JobStream should now look like this:

Note: In order for the delta load to work, the ETL needs to compare the last successful ETL date with the last time a record was updated in the data source. You need to identify the data item in your data source that captures the last time each record was updated and include it in your query. In my example, I have a column in Excel named “LAST_UPDATED” for each Test Case record.

Note: The WHERE clause syntax will depend on what functions the data source supports. My example supports an Excel data source, however you will need to implement the correct function(s) to properly cast the two dates so you are comparing “apples to apples”. This will depend on the form of the date in your data source as well. For example, if your data source is an Oracle database, your WHERE clause could look like this: WHERE TO_DATE(LAST_UPDATED) >= TO_DATE(‘{$MODIFIED_SINCE}’,’yyyy-mm-dd hh24:mi:ss’)

Note: This is normal behavior when testing a fact build individually. You can input a date that simulates your last successful ETL or any valid date you choose as long as the syntax matches what is stored in your data warehouse.

Note: Once the ETL process completes, review your Data Manager logs to verify that all the fact builds completed successfully. By default, the Data Manager logs are located in InsightInstallDir/cognos/datamanager/log. Once you confirm that the Delta load job is running successfully, you can publish it to the Report server where it can be scheduled it to run. See Publishing a Job as a Data Movement Task for more information.

Summary

In this document, I demonstrated how to configure IBM Rational Insight’s Extract, Transform, Load (ETL) catalog to implement delta load functionality. Though I used a Microsoft Excel spreadsheet as my example data source, the concepts and techniques described here are applicable to any data source.

Copyright © 2014 IBM Corporation

Yes

Yes