CLM Clustering Configurations with WebSphere eXtreme Scale

Note: This 2012 article applies to the 4.0 release of the Rational solution for CLM. For current information about clustering and high availability, see Deploying for high availability in the Rational solution for CLM Information Center.

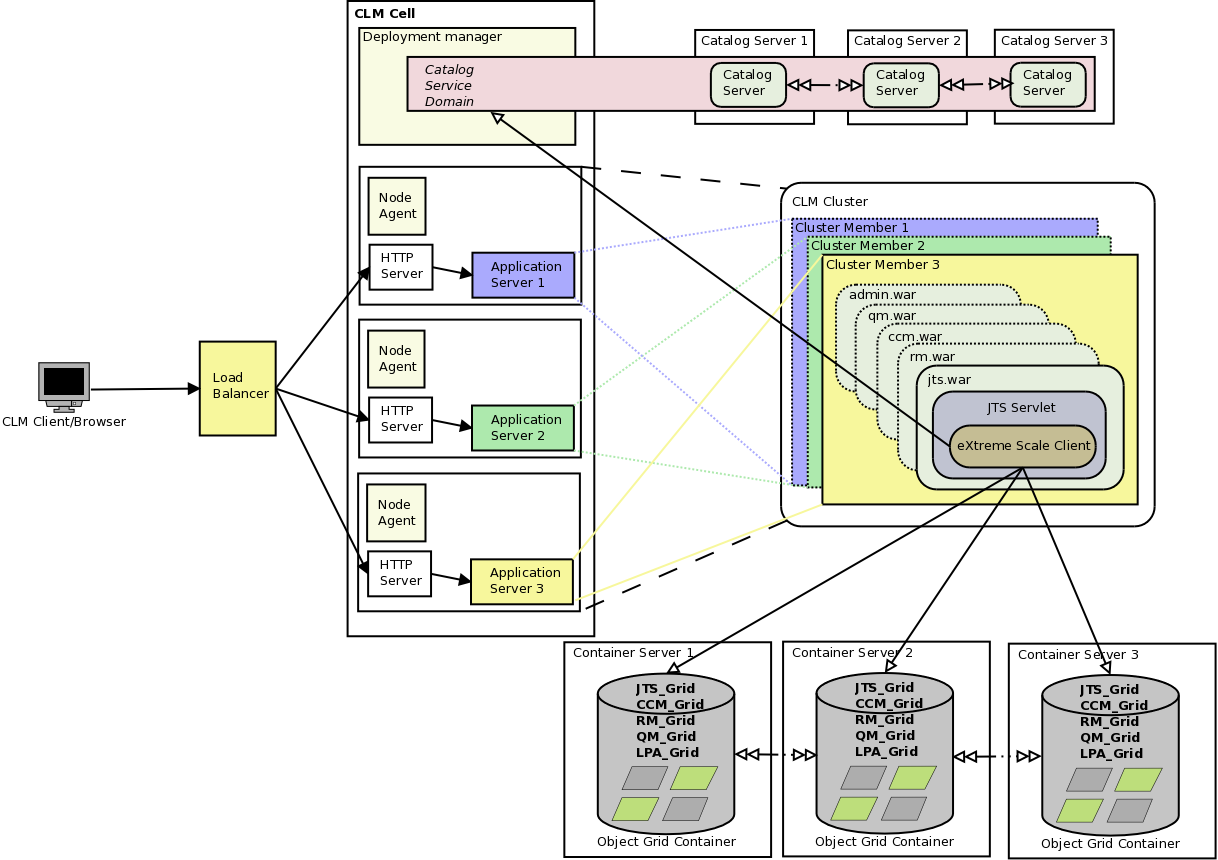

This article details the steps required to set up the CLM clustering feature to use the external WebSphere eXtreme Scale catalog and container services.The default configuration for setting up a WAS cluster, as described in the CLM 4.0 information center, uses embedded versions of the WebSphere eXtreme Scale (WXS) components. In this configuration, the WXS container server runs embedded inside of the CLM application server and the catalog service domain embedded inside of the WebSphere Application Server – Network Deployment Edition (WAS ND) deployment manager and node agents. This approach has the following advantages:

- Easy set up: Integrates the WXS configuration with the WAS ND configuration and deployment manager

- Fewer required resources: Separate servers do not need to be hosted for WXS components

- Redundancy: Built into the configuration

- Multiple connections: Multiple application servers and multiple application connections to a single WXS container server deployment, allowing for centralized configuration and performance tuning

- Appliance usage: This configuration enables the usage of a WebSphere DataPower XC10 appliance

- Performance benefit: Grid management can be offloaded to another node

- Reuse: Existing WXS server deployments can be leveraged

- Resources: Set up may require additional servers and daemons to be run

- No built-in redundancy: multiple redundant container and catalog servers need to be set up

- Complexity: Set up may require the creation of XML and property files, and command line start-up scripts

- Lack of WAS security integration: More manual security configuration is required

Embedded WXS Configuration

The CLM 4.0 information instructions are for the embedded configuration, and do not require any external server configurations. It is therefore recommended that this configuration be set up first before an attempt is made to externalize any WXS components.External WXS Catalog Server

Using an external WXS catalog server requires registering one or more external catalog servers in the WAS ND deployment manger (one that has WXS augmentation). First one or more external catalog servers need to be started either on stand-alone WXS installations, or on an installation that was integrated with WAS ND (even though we are starting the catalog server outside of the WAS environment). If more than one server is going to be started for redundancy, then a catalog service domain also needs to be configured.

In this example we will configure an external catalog service domain called clm.example.org with two catalog servers (cs1 and cs2), and then subsequently register them with WAS ND to be used by CLM. The two servers are wxs.example.org and wxs2.example.org.

- On wxs.example.org create a file called cs1cat.properties with the following contents:

listenerHost=wxs.example.org

listenerPort=2809

domainName=clm.example.org

catalogClusterEndPoints=cs1:wxs.example.org:6801:6802,cs2:wxs2.example.org:6801:6802 - On wxs2.example.org create a file called cs2cat.properties with the following contents:

listenerHost=wxs2.example.org

listenerPort=2809

domainName=clm.example.org

catalogClusterEndPoints=cs1:wxs.example.org:6801:6802,cs2:wxs2.example.org:6801:6802 - The servers must be started in parallel. Start the catalog server cs1 on wxs.example.org by executing the following command:

startOgServer.sh cs1 -serverProps cs1cat.properties

and then start the catalog server cs2 on wxs2.example.org:

startOgServer.sh cs2 -serverProps cs2cat.properties

After some time the catalog servers will finish the start-up script and return to the command prompt. - Once the catalog service domain is started we can now add the servers to the WAS ND deployment manager (that is WXS augmented).

- In the WAS ND deployment manager go to the “System Administration->WebSphere eXtreme Scale->Catalog service domains”

- Click the “New…” button to add a new Catalog Service domain called “clm.example.org”

- Create two Catalog Servers by clicking the “New Button” twice. Use the same the cs1 and cs2 listener host and ports in the “Remote server” and “Listener Port” fields.

Figure 2: External Catalog Service Domain

Note that the catalog service domain is enabled as the defaults. The default catalog service domain will be the one used by the jazz applications.

- Click “OK” to save the configuration to the master configuration.

Clicking on “clm.example.org” in “System Administration->WebSphere eXtreme Scale->Catalog service domains” should reveal the two Catalog Server Endpoints with a green status.

External WXS Container Server

An external container can be used with a WAS embedded version of the catalog server, or with an external catalog server. To keep things simple, and in order to get the basics working, transport security will be disabled and needs to be disabled if connecting to a WAS embedded catalog server on WAS ND 8.0. Information on how to set up transport security and authentication can be found in the WXS information center.

In order for CLM to use an external container instead of the embedded one, a few properties need to be added to each CLM cluster node’s application server JVM system properties (in the same place where the JAZZ_HOME property is defined, not the application .properties files):

com.ibm.team.repository.cluster.createLocalContainer = false

com.ibm.team.jfs.app.distributed.objectgrid.AbstractMapBasedObject.useAgents = false

Starting an external WXS Container server will require a few configuration files for the grids used by the CLM applications. A unique grid name for each instance of a CLM application is required. It’s a good idea to qualify the grid name with some meaningful prefix or suffix to distinguish grids being used by different application instances.

The names of the grids must be the same names used by the com.ibm.team.repository.cluster.gridName property in the CLM applications property files. In this example, we will use the following grid names for the CLM applications: Dev_Admin_Grid, Dev_CCM_Grid, Dev_JTS_Grid, Dev_QM_Grid, and Dev_RM_Grid.

Create two files – objectGrid.xml and ogDeployment.xml – using these grid names. Modify the contents provided below with the grid names used in the CLM deployment. Some tuning may also be required.

objectGrid.xml

<objectGridConfig xsi:schemaLocation="http://ibm.com/ws/objectgrid/config ../objectGrid.xsd">

<objectGrids>

<objectGrid name="Dev_Admin_Grid">

<backingMap name="jazz..*" template="true" lockStrategy="PESSIMISTIC" copyMode="NO_COPY" lockTimeout="4"/>

</objectGrid>

<objectGrid name="Dev_CCM_Grid">

<backingMap name="jazz..*" template="true" lockStrategy="PESSIMISTIC" copyMode="NO_COPY" lockTimeout="4"/>

</objectGrid>

<objectGrid name="Dev_QM_Grid">

<backingMap name="jazz..*" template="true" lockStrategy="PESSIMISTIC" copyMode="NO_COPY" lockTimeout="4"/>

</objectGrid>

<objectGrid name="Dev_RM_Grid">

<backingMap name="jazz..*" template="true" lockStrategy="PESSIMISTIC" copyMode="NO_COPY" lockTimeout="4"/>

</objectGrid>

<objectGrid name="Dev_JTS_grid">

<backingMap name="jazz..*" template="true" lockStrategy="PESSIMISTIC" copyMode="NO_COPY" lockTimeout="4"/>

</objectGrid>

</objectGrids>

</objectGridConfig>

ogDeployment.xml

<deploymentPolicy xsi:schemaLocation="http://ibm.com/ws/objectgrid/deploymentPolicy ../deploymentPolicy.xsd">

<objectgridDeployment objectgridName="Dev_Admin_Grid">

<mapSet name="jazzmaps" numberOfPartitions="17" maxSyncReplicas="1" developmentMode="true" replicaReadEnabled="true">

<map ref="jazz..*"/>

</mapSet>

</objectgridDeployment>

<objectgridDeployment objectgridName="Dev_CCM_Grid">

<mapSet name="jazzmaps" numberOfPartitions="17" maxSyncReplicas="1" developmentMode="true" replicaReadEnabled="true">

<map ref="jazz..*"/>

</mapSet>

</objectgridDeployment>

<objectgridDeployment objectgridName="Dev_QM_Grid">

<mapSet name="jazzmaps" numberOfPartitions="17" maxSyncReplicas="1" developmentMode="true" replicaReadEnabled="true">

<map ref="jazz..*"/>

</mapSet>

</objectgridDeployment>

<objectgridDeployment objectgridName="Dev_RM_Grid">

<mapSet name="jazzmaps" numberOfPartitions="17" maxSyncReplicas="1" developmentMode="true" replicaReadEnabled="true">

<map ref="jazz..*"/>

</mapSet>

</objectgridDeployment>

<objectgridDeployment objectgridName="Dev_JTS_grid">

<mapSet name="jazzmaps" numberOfPartitions="17" maxSyncReplicas="1" developmentMode="true" replicaReadEnabled="true">

<map ref="jazz..*"/>

</mapSet>

</objectgridDeployment>

</deploymentPolicy>

It’s usually desirable to run more then one container server for the sake of redundancy, so that if one fails the other can assume the load. While two or more servers are enough to ensure a high degree of availability, there is an impact on the “numberOfPartitions” mapSet attribute in the ogDeployment.xml file. The formula is:

(numberOfPartitions*(maxSyncReplicas+1))/numberOfContainerServers ≈ 10, where numberOfPartitions ∈ PRIME

The attribute maxSyncReplicas is set to “1” because this ensures 2x redundancy of all objects with the set of container servers. This also implies that even if there are more then 2 container servers, there is still only 2x redundancy. Testing has found that setting this higher has negative performance implications. As a consequence, it is possible if 2 nodes fail simultaneously, some objects and their replicas could be lost no mater how many container servers there are. This is not fatal to the Jazz applications as the user would need to retry the operation, which would reinitialize the objects from the database – a perfectly valid operation. The risk of two container servers failing simultaneously is also relatively small if container servers are deployed in a resource-independent fashion.

If there are sufficient robust container servers, losing a container server and having the client retry an operation may be a satisfactory outcome in some situations. In this case, maxSyncReplicas could be set to “0”. In such a case only one copy of an object is kept on the entire grid (across all container servers).

Increasing the number of containers has performance implications, as it allows the object grid to be spread across multiple servers, thereby decreasing the load on a given container server. Nevertheless, too many nodes could cause a high degree of chattiness due to replica synchronization (if enabled with maxSyncReplicas set to “1”). It is therefore advisable to size the container servers appropriately according to the WXS information center instructions, and ensure that each container server can handle the entire load of the object graph on its own. The number of container servers can then be scaled if it is deemed to have a performance benefit. (It is possible to size so that two servers handle the entire load if cost and risk tolerances permit. For example, if the risk of losing 50% is acceptable, then a 4-server configuration where 2 servers are required to handle the full load would be appropriate. This could result in cost savings, using 2 servers vs. 1 larger server.)

Given the number of replicas (“1”) and the number of container servers, the formula above results in the following table:

| Container servers (numberOfContainerServers) | Number of Partitions (numberOfPartitions) |

|---|---|

| 2 | 11 |

| 3 | 17 |

| 4 | 19 |

| 5 | 23 |

| 6 | 29 |

| 7 | 37 |

| 8 | 41 |

Connecting to a WAS ND embedded catalog server

In order to keep things simple, CORBA transport security will be disabled in the WAS ND deployment manager so that the external container server can connect to the catalog server without any complicated security set up. Go to the WAS ND deployment manager:

- Security -> Global Security -> RMI/IIOP security -> CSIv2 inbound communications: Change Transport from SSL-Required to SSL-Supported

- Security -> Global Security -> RMI/IIOP security -> CSIv2 outbound communications: Change Transport from SSL-Required to SSL-Supported

Now it is possible to start one or more container servers. As an example, we will start container servers on wxs.example.org and wxs2.example.org. We assume that the catalog service domain is configured on the deployment manager and two node agents. The deployment manager is clmdm.example.org, and the node agents are clmnode2.example.org and clmnode3.example.org. In the case of WAS ND embedded catalog servers, the ports the catalog servers are listening on are the bootstrap addresses (BOOTSTRAP_ADDRESS) of the deployment manager and the node agents. This information can be found in the deployment manager console.

For Deployment Manager:

- System Administration -> Deployment manager -> Ports -> BOOTSTRAP_ADDRESS (Typically 9809)

For Node agents:

- System Administration -> Node agents -> nodeagent -> Ports -> BOOTSTRAP_ADDRESS (Typically 2810)

Start the container servers using the ports and XML files modified above:

On wxs.example.org:

startOgServer.sh containerServer1 -objectGridFile objectGrid.xml -deploymentPolicyFile ogDeployment.xml -catalogServiceEndPoints clmdm.example.org:9809, clmnode2.example.org:2910, clmnode3.example.org:2910

On wxs2.example.org:

startOgServer.sh containerServer2 -objectGridFile objectGrid.xml -deploymentPolicyFile ogDeployment.xml -catalogServiceEndPoints clmdm.example.org:9809, clmnode2.example.org:2910, clmnode3.example.org:2910

External WXS Catalog and Container Server

To keep things simple and in order to get the basics working, transport security will be kept disabled (the default in this configuration). Information on how to set up transport security and authentication can be found in the WXS information center.

These instructions assume that the external catalog server uses the listener hosts and ports as specified in External WXS Catalog Server. In this case, it is quite simple. The container servers only need to be started:

On wxs.example.org:

startOgServer.sh containerServer1 -objectGridFile objectGrid.xml -deploymentPolicyFile ogDeployment.xml -catalogServiceEndPoints wxs.example.org:2809, wxs2.example.org:2809

On wxs2.example.org:

startOgServer.sh containerServer2 -objectGridFile objectGrid.xml -deploymentPolicyFile ogDeployment.xml -catalogServiceEndPoints wxs.example.org:2809, wxs2.example.org:2809

WebSphere DataPower XC10

WebSphere DataPower XC10 is a plug in appliance that has both catalog servers and container servers that can be used by a CLM cluster. The XC10 will also need the objectGrid.xml and ogDeployment files as described in the documentation. All that is required is to modify the WAS ND catalog service domain configuration to use the catalog servers hosted on the XC10. Detailed information about how to configure the XC10 can be found in the information center.

Copyright © 2012 IBM Corporation