r14 - 2020-03-12 - 14:37:37 - Main.hhuoYou are here: TWiki >  Deployment Web > DeploymentPlanningAndDesign > PerformanceDatasheetsAndSizingGuidelines > CollaborativeLifecycleManagementPerformanceAndScalabilityReportRationalQualityManager6061Ifx001

Deployment Web > DeploymentPlanningAndDesign > PerformanceDatasheetsAndSizingGuidelines > CollaborativeLifecycleManagementPerformanceAndScalabilityReportRationalQualityManager6061Ifx001

Collaborative Lifecycle Management performance report: Rational Quality Manager 6.0.6.1 iFix001

Collaborative Lifecycle Management performance report: Rational Quality Manager 6.0.6.1 iFix001

Authors: Hongyan Huo

Last updated: May 31, 2019

Build basis: Rational Quality Manager 6.0.6.1 iFix001

Introduction

This report provides the performance benchmark of Rational Quality Manager (RQM) 6.0.6.1-iFix001. New to this release, RQM performance featuring Configuration Management (CM) is evaluated against a larger scale than traditionally tested: the repository under test contains twenty local RQM components and ten million versioned test artifacts1 migrated from 6.0.6-iFix003. During the migration process to 6.0.6.1-iFix001, partitions on the database were also enabled to increase the scalability (refer to upgrade guide, release noteworthy, and the knowledge center for details for partitioning). Summary of the test coverage that is different from the previous standard performance regression report is outlined as below:- The new repository size measured by artifact counts for a Configuration Management (CM) enabled project area is 20 times bigger than traditional performance regression tests, as described in Data volume and shape

- Configuration Management (CM) capabilities, such as browsing artifacts in baselines and switching local configurations, are included in the multi-user performance test workload

- Additional database indexes are explored based on the test load to further improve performance

Disclaimer

The information in this document is distributed AS IS. The use of this information or the implementation of any of these techniques is a customer responsibility and depends on the customer’s ability to evaluate and integrate them into the customer’s operational environment. While each item may have been reviewed by IBM for accuracy in a specific situation, there is no guarantee that the same or similar results will be obtained elsewhere. Customers attempting to adapt these techniques to their own environments do so at their own risk. Any pointers in this publication to external Web sites are provided for convenience only and do not in any manner serve as an endorsement of these Web sites. Any performance data contained in this document was determined in a controlled environment, and therefore, the results that may be obtained in other operating environments may vary significantly. Users of this document should verify the applicable data for their specific environment. Performance is based on measurements and projections using standard IBM benchmarks in a controlled environment. The actual throughput or performance that any user will experience will vary depending upon many factors, including considerations such as the amount of multi-programming in the user’s job stream, the I/O configuration, the storage configuration, and the workload processed. Therefore, no assurance can be given that an individual user will achieve results similar to those stated here. This testing was done as a way to compare and characterize the differences in performance between different versions of the product. The results shown here should thus be looked at as a comparison of the contrasting performance between different versions, and not as an absolute benchmark of performance.Summary of results

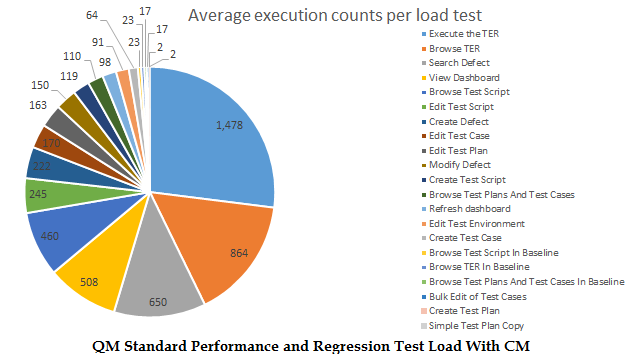

The RQM 6.0.6.1-iFix001 performance benchmark applied an automated test workload which is comprised of 21 test scenarios, as shown in the picture below, with a mixture of stream and baseline operations simulated by 1000 virtual users , see User roles, test cases and workload characterization for detailed description for each test scenario. The 1000 users were staging concurrently for one hour, generating a consistent throughput, a.k.a. page hit rate, of 8.2 pages per second. During the concurrency load, we evaluated page loading for 116 individual pages by collecting their page response times (the smaller page response time, the better performance) and server statistics metric. We observed the following performance characteristics

, see User roles, test cases and workload characterization for detailed description for each test scenario. The 1000 users were staging concurrently for one hour, generating a consistent throughput, a.k.a. page hit rate, of 8.2 pages per second. During the concurrency load, we evaluated page loading for 116 individual pages by collecting their page response times (the smaller page response time, the better performance) and server statistics metric. We observed the following performance characteristics - On the client side

- The concurrency load was carried smoothly and maintained stable during of the entire course of testing

- 96% of pages under test had average response times under 1.5 seconds. A few exceptions are those providing views to large number of artifacts in the configuration, such as Test Case Execution Records (the average page response time for opening the Browse Test Case Execution Record page aka Open TER view in a stream under test is 4.1 seconds under load). They are deemed acceptable given the large size. However, these page performance can be improved via load-based tuning, section performance tunings provides detailed information.

- For the page-by-page performance result for each test scenario, see this section: Detailed performance results.

- On the server side

- The CPU utilization on all servers were minimal

- IBM DB2 server allocated approximately 46G RAM, out of 64G physical RAM in total, to the QM database, with automatic tuning turned on by default. It left a little room for its growth at load peak, yet remained steady co-locating with the other five databases on the same machine under test.

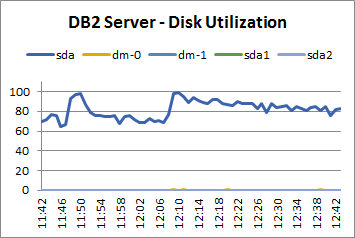

- Disk utilization on the IBM DB2 server was found to be busy due to background services such as Full Text Indexing and Asynchronous migration, however there was minor impact to the test load observed during the test.

- For more details about the system resource please see section Resource utilization and JVM Garbage collection of the test systems.

Topology, network, and data volume

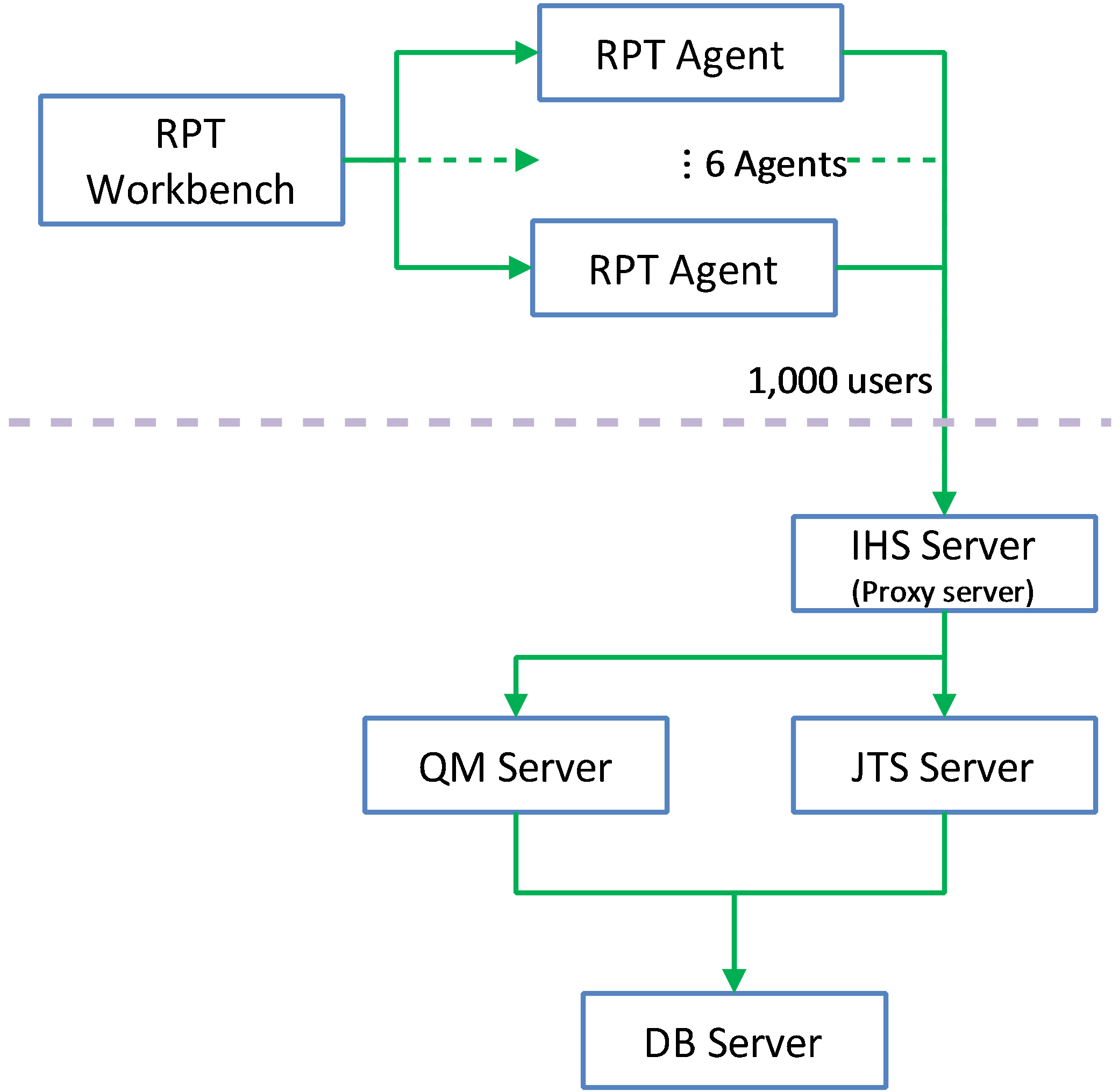

The topology under test is based on Standard Topology (E1) Enterprise - Distributed / Linux / DB2. The specifications of machines under test are listed in the table below. Server tuning details listed in Appendix - Key configuration parameters

The specifications of machines under test are listed in the table below. Server tuning details listed in Appendix - Key configuration parameters

| Function | Number of Machines | Machine Type | CPU / Machine | Total # of CPU vCores/Machine | Memory/Machine | Disk | Disk capacity | Network interface | OS and Version |

|---|---|---|---|---|---|---|---|---|---|

| Proxy Server (IBM HTTP Server and WebSphere Plugin) | 1 | IBM System x3250 M4 | 1 x Intel Xeon E3-1240 3.4GHz (quad-core) | 8 | 16GB | RAID 1 -- SAS Disk x 2 | 299GB | Gigabit Ethernet | Red Hat Enterprise Linux Server release 6.5 |

| JTS Server | 1 | IBM System x3550 M4 | 2 x Intel Xeon E5-2640 2.5GHz (six-core) | 24 | 32GB | RAID 5 -- SAS Disk x 2 | 897GB | Gigabit Ethernet | Red Hat Enterprise Linux Server release 6.5 |

| QM Server | 1 | IBM System x3550 M4 | 2 x Intel Xeon E5-2640 2.5GHz (six-core) | 24 | 32GB | RAID 5 -- SAS Disk x 2 | 897GB | Gigabit Ethernet | Red Hat Enterprise Linux Server release 6.5 |

| Database Server | 1 | IBM System x3650 M4 | 2 x Intel Xeon E5-2640 2.5GHz (six-core) | 24 | 64GB | RAID 5 -- SAS Disk x 2 | 2.4TB | Gigabit Ethernet | Red Hat Enterprise Linux Server release 6.1 |

| RPT workbench | 1 | IBM System x3550 M4 | 2 x Intel Xeon E5-2640 2.5GHz (six-core) | 24 | 32GB | RAID 5 -- SAS Disk x 2 | 897GB | Gigabit Ethernet | Red Hat Enterprise Linux Server release 6.4 |

| RPT Agents | 6 | VM image | 4 x Intel Xeon X5650 CPU (1-Core 2.67GHz) | 1 | 2GB | N/A | 30GB | Gigabit Ethernet | Red Hat Enterprise Linux Server release 6.5 |

| Network switches | N/A | Cisco 2960G-24TC-L | N/A | N/A | N/A | N/A | N/A | Gigabit Ethernet | 24 Ethernet 10/100/1000 ports |

Network connectivity

All server machines and test clients are located on the same subnet. The LAN has 1000 Mbps of maximum bandwidth and less than 0.3 ms latency in ping.Data volume and shape

- QM Database size on disk ~ 500 GB

Artifacts distribution by component

The artifacts were created by the in-house datagen utility. For the large scale repository under test in this report, we created total of 10 million artifacts1 that are spread out across 20 unique components, and for each of the component the artifact counts are distributed similarly as below: 1artifact counts are based on the data generation utility which collects the sum from each artifact type visible in the UI.- 50 test plans

- 30,000 test scripts

- 30,000 test cases

- 120,000 test case execution records

- 360,000 test case results

- 3,000 test suites

- 200 test environments

- 600 test phases

- 120 build definitions

- 1,424 execution sequences

- 3,000 test suite execution records

- 15,000 test suite execution results

- 25,000 build records

- each test plan is linked to 600 unique test cases, and 600 unique test scripts

- each test suite contains 15 test cases

Streams and baselines

The initial component, which is the target for the automation test load, contains two streams and two baselines. The rest of the components have only the initial stream created.Miscellaneous artifacts in the applications

There are 5,000 work items (defects) in the CCM project area.Methodology

Rational Performance Tester(RPT) was used to simulate the workload created using the web client. Each user completed a random use case from a set of available use cases. A Rational Performance Tester script is created for each use case. The scripts are organized by pages and each page represents a user action. The work load is role based as each of the areas defined under sequence of actions which are separated into individual user groups within an RPT schedule. The settings of the RPT schedule is shown below:

User roles, test cases and workload characterization

a. User Roles| Configuration | Use role | % of Total | Related Actions |

|---|---|---|---|

| Stream | 98 | ||

| QE Manager | 8 | Test plan create, Browse test plan and test case, Browse test script, Simple test plan copy, Defect search, View dashboard | |

| Test Lead | 19 | Edit Test Environments, Edit test plan, Create test case, Bulk edit of test cases, Full text search, Browse test script, Test Execution, Defect search | |

| Tester | 66 | Defect create, Defect modify, Defect search, Edit test case, Create test script, Edit test script, Test Execution, Browse test execution record | |

| Dashboard Viewer | 5 | View dashboard(with login and logout) | |

| Baseline | 2 | ||

| Baseline Viewer | 2 | Browse Test Case or Test Plan or Test Case Execution Record in baseline |

| Use Role | Percentage of the user role | Sequence of Operations |

|---|---|---|

| QE Manager | 1 | Test plan create:user creates test plan, then adds description, business objectives, test objectives, 2 test schedules, test estimate quality objectives and entry and exit criteria. |

| 26 | Browse test plans and test cases: user browses assets by: View Test Plans, then configure View Builder for name search; open test plan found, review various sections, then close. Search for test case by name, opens test case found, review various sections, then close. | |

| 26 | Browse test script: user search for test script by name, open it, reviews it, then closes. | |

| 1 | Simple test plan copy: user search test plan by name, then select one, then make a copy. | |

| 23 | Defect search: user searches for specific defect by number, user reviews the defect (pause), then closes. | |

| 20 | Refresh Dashboard: user views dashboard | |

| Test Lead | 8 | Edit Test Environment: user lists all test environments, and then selects one of the environments and modifies it. |

| 15 | Edit test plan: list all test plans; from query result, open a test plan for editing, add a test case to the test plan, a few other sections of the test plan are edited and then the test plan is saved. | |

| 4 | Create test case: user create test case by: opening the Create Test Case page, enters data for a new test case, and then saves the test case. | |

| 1 | Bulk edit of test cases: user searches for test cases with root name and edits all found with owner change. | |

| 0 | Full text search: user does a full text search of all assets in repository using root name, then opens one of found items. | |

| 32 | Browse test script: user search for test script by name, open it, reviews it, then closes. | |

| 26 | Test Execution: selects “View Test Execution Records”, by name, starts execution, enters pass/fail verdict, reviews results, sets points then saves. | |

| 11 | Defect search: user searches for specific defect by number, user reviews the defect (pause), then closes. | |

| Tester | 8 | Defect create: user creates defect by: opening the Create Defect page, enters data for a new defect, and then saves the defect. |

| 5 | Defect modify: user searches for specific defect by number, modifies it then saves it. | |

| 14 | Defect search: user searches for specific defect by number, user reviews the defect (pause), then closes. | |

| 6 | Edit test case: user searches Test Case by name, the test case is then opened in the editor, then a test script is added to the test case (user clicks next a few times (server size paging feature) before selecting test script), The test case is then saved. | |

| 4 | Create test script: user creates test case by: selecting Create Test Script page, enters data for a new test script, and then saves the test script. | |

| 8 | Edit test script: user selects Test Script by name. test script then opened for editing, modified and then saved. | |

| 42 | Test Execution: selects “View Test Execution Records”, by name, starts execution, enters pass/fail verdict, reviews results, sets points then saves. | |

| 7 | Browse test execution record: user browses TERs by: name, then selects the TER and opens the most recent results. | |

| Dashboard Viewer | 100 | View dashboard(with login and logout): user logs in, views dashboard, then logs out. This user provides some login/logout behavior to the workload |

| Baseline Viewer | 34 | Browse test script in baseline: user logs in, views dashboard, then logs out. This user provides some login/logout behavior to the workload |

| 33 | Browse test case execution record in baseline: user logs in, views dashboard, then logs out. This user provides some login/logout behavior to the workload | |

| 33 | Browse test plans and test cases in baseline: user browses assets by: View Test Plans, then configure View Builder for name search; open test plan found, review various sections, then close. Search for test case by name, opens test case found, review various sections, then close. |

Response time comparison

The page performance is measured as mean value (or average) of its response time in the result data. For the majority of the pages under tests, there is little variation between runs, and the mean values are close to median in the sample for the load.Detailed performance results

Average page response time breakdown

NOTE For all charts, each column represents the time for page loading (aka page response time) in milliseconds, for which value smaller is better. Browse Test Plans & Test Cases

Back to Test Cases & workload characterization Browse Test Scripts

Back to Test Cases & workload characterization

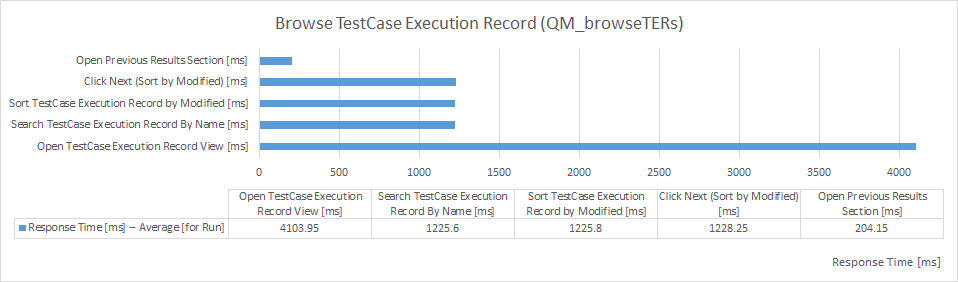

Test Execution Record Browsing

Back to Test Cases & workload characterization

Test Execution Record Browsing

Back to Test Cases & workload characterization

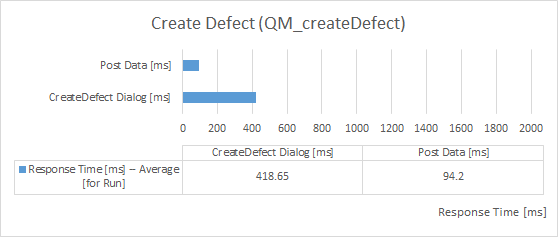

Create Defect

Back to Test Cases & workload characterization

Create Defect

Back to Test Cases & workload characterization

Create Test Plan

Back to Test Cases & workload characterization

Create Test Plan

Back to Test Cases & workload characterization Create Test Case

Back to Test Cases & workload characterization

Create Test Script

Back to Test Cases & workload characterization

Create Test Script

Back to Test Cases & workload characterization

Edit Test Case

Back to Test Cases & workload characterization

Edit Test Case

Back to Test Cases & workload characterization Edit Test Environment

Back to Test Cases & workload characterization

Edit Test Plan

Back to Test Cases & workload characterization

Edit Test Plan

Back to Test Cases & workload characterization

Edit Test Script

Back to Test Cases & workload characterization

Edit Test Script

Back to Test Cases & workload characterization

Test Execution For 4 Steps

Back to Test Cases & workload characterization

Test Execution For 4 Steps

Back to Test Cases & workload characterization

Simple Test Plan Copy

Back to Test Cases & workload characterization

Simple Test Plan Copy

Back to Test Cases & workload characterization

Bulk Edit of Test Cases

Back to Test Cases & workload characterization

Bulk Edit of Test Cases

Back to Test Cases & workload characterization

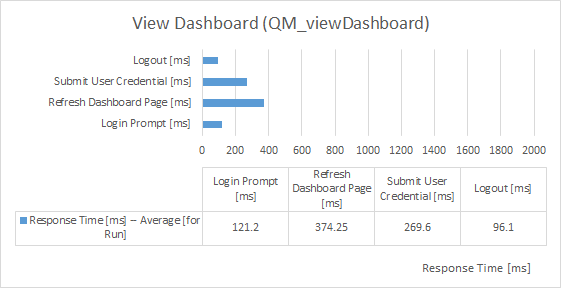

View Dashboard

Back to Test Cases & workload characterization

View Dashboard

Back to Test Cases & workload characterization Browse Test Script In Baseline

Back to Test Cases & workload characterization Browse Test Case Execution Record In Baseline

Back to Test Cases & workload characterization Browse Test Plans And Test Cases In Baseline

Back to Test Cases & workload characterization Defect Search

Back to Test Cases & workload characterization

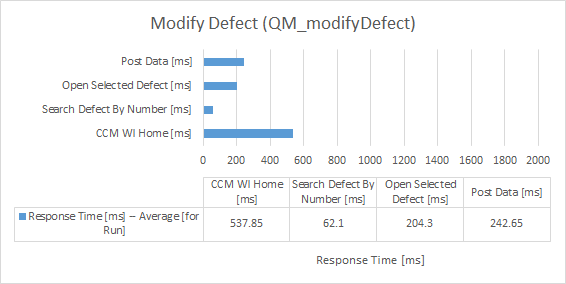

Defect Modify

Back to Test Cases & workload characterization

Defect Modify

Back to Test Cases & workload characterization

Back to Test Cases & workload characterization

RPT network transfer comparison

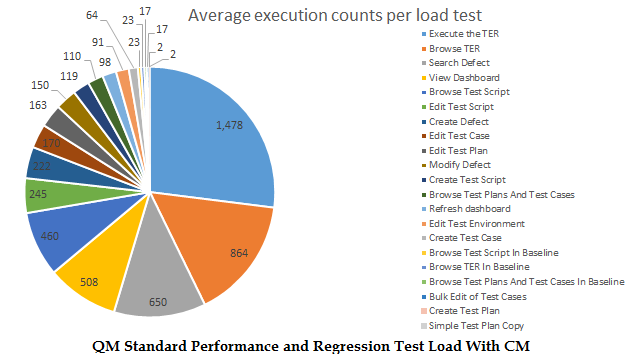

RPT script executions

RPT test scenario breakdown: average page executions during the one-hour staging period  |

Test scenario | Average execution counts per load test |

|---|---|---|

| Execute the TER (Test Execution For 4 Steps) | 1,478 | |

| Browse TER | 864 | |

| Search Defect | 650 | |

| View Dashboard | 508 | |

| Browse Test Script | 460 | |

| Edit Test Script | 245 | |

| Create Defect | 222 | |

| Edit Test Case | 170 | |

| Edit Test Plan | 163 | |

| Modify Defect | 150 | |

| Create Test Script | 119 | |

| Browse Test Plans And Test Cases | 110 | |

| Refresh Dashboard | 98 | |

| Edit Test Environment | 91 | |

| Create Test Case | 64 | |

| Browse Test Script In Baseline | 23 | |

| Browse TER In Baseline | 23 | |

| Browse Test Plans And Test Cases In Baseline | 17 | |

| Full Text Search and Open Test Suite | 0 | |

| Bulk Edit of Test Cases | 17 | |

| Create Test Plan | 2 | |

| Simple Test Plan Copy | 2 |

Resource utilization

| RQM 6.0.6.1-iFix001 1000users performance load large scale with CM - 1 hour staging | |||

| CPU |  |

Disk |  |

| Memory |  |

Network |  |

| RQM 6.0.6.1-iFix001 1000users performance load large scale with CM - 1 hour staging | |||

| CPU |  |

Disk |  |

| Memory |  |

Network |  |

Garbage collection - JVM GC Chart

For JVM parameter please refer to Appendix - Key configuration parameters Verbose garbage collection is enabled to log the GC activities. These GC logs showed very little variation between runs. There is also no discernible difference between versions. Below is one example of the output from the GC log for each application.| RQM 6.0.6.1-iFix001 1000users performance load large scale with CM - ramping and staging | |

| QM |  |

| JTS |  |

Test load based tunings

Run runstats commands post migration

6.0.6.1-iFix001 provides the flexibility of enabling the range partitions on the version table in the QM database, which intents to help increase the scalability in longer term. After migration followed by the partitioning enablement, we would ensure the statistics of the database are up to date, so that the out-of-the-box applications will more likely be optimal. For the series of tests in this report, we ran the followingdb2 reorgchk update statistics on table allFor more runstats commands please refer to the upgrade guide for details.

Apply additional indexes based on the test load

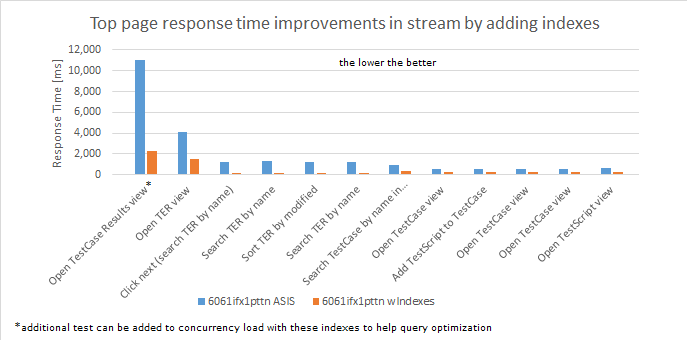

The RQM 6.0.6.1-iFix001 performance benchmark workload contains Test Case Execution-intensive usage scenarios by design, where more than 40% of the virtual users are either browsing or searching a Test Case Execution Record, or executing it in a stream. This resulted in the default Browse Test Case Execution Record (Open TER view) page being consistently loaded with high frequency, same as using the keyword in the Name column to filter the search result and sort by the Last Modified timestamp (Search TER), shown as below .

The former page (Open TER view) took more than 4 seconds under load, and the latter (Search/sort TER) 1.2 seconds for a total of 4 records returned, detailed page response time can be found in this chart. These are some of the most expensive pages in our test load. When we looked at the queries from within the database, we determined to use additional indexes to help improve both of their response times as well as the memory usage. The indexes were created on the QM DB using IBM DB2 v10.5 as below

.

The former page (Open TER view) took more than 4 seconds under load, and the latter (Search/sort TER) 1.2 seconds for a total of 4 records returned, detailed page response time can be found in this chart. These are some of the most expensive pages in our test load. When we looked at the queries from within the database, we determined to use additional indexes to help improve both of their response times as well as the memory usage. The indexes were created on the QM DB using IBM DB2 v10.5 as below

########## to improve default browse XXX pages db2 'CREATE INDEX "EXECUTION"."TER_JZ_PRJ_ARC_MOD_ITM_ST" ON "EXECUTION"."EXECUTION_RECORD" ( "JZ_DISCRIMINATOR" ASC, "PROJECT_AREA_ITEM_ID" ASC, "ARCHIVED" ASC, "MODIFIED" ASC, "ITEM_ID" ASC, "STATE_ID" ASC )' db2 'CREATE INDEX "EXECUTION"."TER_JZ_ARC_MOD_PRJ_ITM_ST" ON "EXECUTION"."EXECUTION_RECORD" ( "JZ_DISCRIMINATOR" ASC, "ARCHIVED" ASC, "MODIFIED" DESC, "PROJECT_AREA_ITEM_ID" ASC, "ITEM_ID" ASC, "STATE_ID" ASC )' ########## the following are function based indices for name search filters for large scale, only to add them to IBM DB2 v10.5 and above ################# ########## to improve searching via applying keywords in the Name filter db2 'CREATE INDEX EXECUTION.NAMECOL_UPPER_TER on EXECUTION.EXECUTION_RECORD(UPPER(NAME_COL))'The same technique can be applied to boost performance for page Browse Test Case Results, which contributes to the largest counts (or 70%) of total artifacts, in a stream. With that, not only can the test load accommodate additional test scenarios that browse/search through the similar pages involving with large set of artifacts at high payload, but also with their page response times dropped dramatically to 60%~80% or 2~8x faster, as seen in the graph below

The sample script to add these indexes can be found in the Attachments section at the bottom of the report.

The sample script to add these indexes can be found in the Attachments section at the bottom of the report.

Appendix - Key configuration parameters

| Product |

Version | Highlights for configurations under test |

|---|---|---|

| IBM HTTP Server for WebSphere Application Server | 8.5.5.9 | IBM HTTP Server functions as a reverse proxy server implemented via Web server plug-in for WebSphere Application Server.

Configuration details can be found from the CLM infocenter.

HTTP server (httpd.conf):

|

| IBM WebSphere Application Server Base | 8.5.5.9 | JVM settings:

-Xgcpolicy:gencon -Xmx24g -Xms24g -Xmn6g -Xss786K -Xcompressedrefs -Xgc:preferredHeapBase=0x100000000 -verbose:gc -Xverbosegclog:gc.log -XX:MaxDirectMemorySize=1GSDK version:

|

| IBM DB2 | AESE v10.5 fixpack 9 | |

| LDAP server | ||

| License server | N/A | |

| RPT workbench | 8.3.0.3 | Defaults |

| RPT agents | 8.3.0.3 | Defaults |

| Network | Shared subnet within test lab |

About the authors:

HongyanHuo is a performance engineer focusing on the performance test & analysis of products in the Collaborative Lifecycle Management family.Related topics: Collaborative Lifecycle Management performance report: Rational Quality Manager 6.0 release, Performance datasheets

Questions and comments:

- What other performance information would you like to see here?

- Do you have performance scenarios to share?

- Do you have scenarios that are not addressed in documentation?

- Where are you having problems in performance?

| I | Attachment | Action | Size |

Date | Who | Comment |

|---|---|---|---|---|---|---|

| |

createIndices6061_singleVtableWithPartition_sample.sh | manage | 3.2 K | 2019-05-30 - 15:47 | UnknownUser |

-

Deployment web

Deployment web

-

Planning and design

Planning and design

-

Installing and upgrading

Installing and upgrading

-

Migrating and evolving

Migrating and evolving

-

Integrating

Integrating

-

Administering

Administering

-

Monitoring

Monitoring

-

Troubleshooting

Troubleshooting

-

To do

To do

-

Under construction

Under construction

-

New

New

-

Updated

Updated

-

Constant change

Constant change

- None - stable page

- Smaller versions of status icons for inline text:

-

Contributions are governed by our Terms of Use. Please read the following disclaimer.

Dashboards and work items are no longer publicly available, so some links may be invalid. We now provide similar information through other means. Learn more here.