This is the final part of a 3-part series of blog posts about Linked Lifecycle Data (continued from Part 1 and Part 2).

Getting more from your data

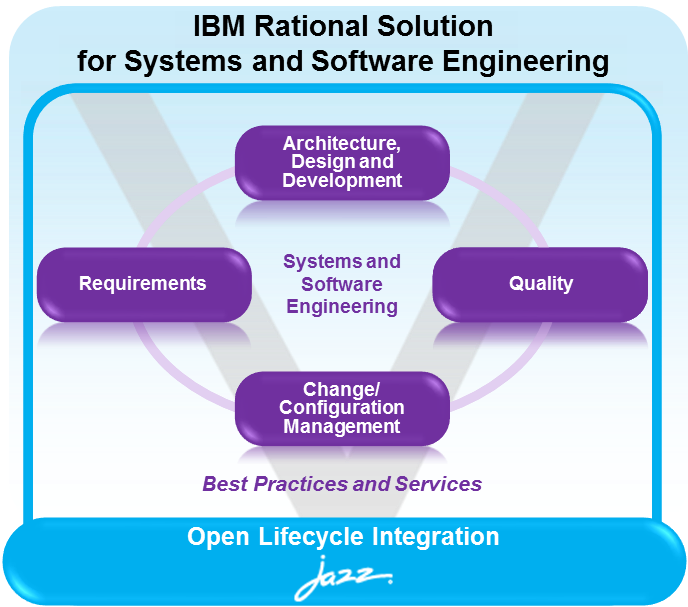

Understanding data and relationships between pieces of data is critical in successfully working on – and managing – systems and software projects.

During the course of systems and software development projects, team members create huge amounts of data. Requirements, Tests, Design models, Code, Work Items and so on. Typically very few of these artifacts exist in isolation and instead are related to one another through links with a specific and well understood meaning.

Team members and managers need to be able to quickly find and understand data, and its relationships, to effectively support a given role, whether that be a test practitioner who needs to know which requirements are tested by tests that failed on their last execution, or a manager who needs to know how the number of open defects on a given project is changing from week to week.

Problems arise because traditionally all of this information has been created and managed in silos – multiple different, often geographically disparate repositories. These repositories are typically provided by different vendors, are deployed on different platforms using different technologies, and expose their data in proprietary formats.

Relational Database approaches

With business systems, traditional relational database approaches have been used to try and work around these issues. Periodic snapshots of operational data can be taken by extracting, transforming, and loading (ETL) data from multiple sources into a data warehouse that supports historical trend based reporting. Datamarts can be created that support the pre-computation of metrics for faster execution of analytical queries.

With a data warehouse we have a single source of truth, and are able to efficiently run large and complex queries and reports that contain data created in multiple different tools.

Data warehousing brings lots of benefits, but has its downsides also: All of the business logic for mapping data to the correct relational database tables, and definition of keys for related tables to represent links are contained in the ETL jobs themselves and if we change the structure of our source data, or load data we haven’t loaded previously, then we are forced to change the data warehouse schemas and edit or write new ETLs.

Perhaps the single biggest problem though is the fact that the data in the warehouse is most often stale. Typically ETL jobs are run nightly, implying the data contained within reports run from the warehouse is up to 24 hours old. In many reporting scenarios this is acceptable, but in others (for example when a project manager needs to create a report showing work item backlog for a team meeting) reporting against live data in near real-time (5 to 15 minutes maximum) is essential.

The Linked Data way

Linked Data is natively stored in repositories called triple-stores – as the name suggests, a store for RDF triples. Recall that RDF allows us to be free of any concerns around data models and lets us make the assumption that we can represent any type of data that our systems and software engineers are creating. Now we have the opportunity to create one or more big triple-stores containing data from all of our systems and software development tools — as well as tools managing other related data like CAD drawings, BoM (Bills of Materials), financial data and so on. Such indices can be updated in near real-time, and can act as our web of data for visualization and reporting. They would provide a live cache of important lifecycle data reflecting the truth of the underlying data sources that own and manage the data.

When working against such an index, we can expect the type of live, large, complex, cross-product, cross-domain queries that we previously could not create (or at least within an acceptable window of time) to be efficiently created. Without an index, to execute the same query from live data, we would have to make many requests to several repositories that are potentially geographically scattered. Using a relational data warehouse will offer us the same advantages in producing complex cross product queries and reports, but the data will be stale by an amount of time equal to the delta between report execution and the last ETL job.

Experience also shows that most products (data sources) are optimized for operational APIs and very seldom for query and reporting. Like the advantages we get from using a data warehouse for querying where staleness of data is not an important consideration, bringing the data into a reporting index (triple-store) will give users access to an interface and language (SPARQL) optimized for querying. The data source itself will benefit from this as it has to service far less non-operational requests.

Real-time insight

It is these types of queries and reports combining data from multiple domains that allow us to answer the type of questions that can give us real (and real-time) insight into our development projects. Linked Lifecycle Data with common vocabularies (such as those defined at OSLC) also allow us to answer questions without caring about the origin of data. Questions such as which requirements are not covered by tests can now be answered without knowing or caring whether the requirements data originates from Rational DOORS, or Rational Requirements Composer or if the test case data comes from Rational Quality Manager or somewhere else. We have a name for the ability to answer these complex cross-domain questions against live data: “Lifecycle Query”.

Powerful possibilities

By building on an architectural platform leveraging Linked Data and Lifecycle Query, we could provide extremely powerful visualization, navigation, organization and query functions that help systems and software engineers better understand the large, complex data and relationship sets that they are working with. This understanding and visibility could greatly improve collaboration and make it easier for practitioners to follow processes and implement practices, and make it easier for projects and programs to be effectively managed. Tailored views could be easily created at any level of abstraction to better support the tasks being undertaken by a given user in their particular role. Understanding the impact of change across the engineering lifecycle could be simply facilitated. Ultimately this could help organizations develop systems and software with improved productivity, increased efficiency, and lower risk – with the added benefit of protecting investment in existing domain tooling of choice.

Benjamin Williams

Senior Product Manager, Rational Systems Platform

bwilliams@uk.ibm.com

I enjoyed your articles!

Few questions for open discussions:

– is there any interaction between OSLC and KDM standard from OMG ?

– if you have “ontologies” to describe the concepts and relations in each domain (Project Management, Requirements, Design, Configuration Management, Quality…), how do you merge all those ontologies in one ?

– when will we be able to bring software code in such Big Data repository ?

Congratulations for this suite of articles! I am convinced that the Jazz Team is on track to push forward the art of System Engineering!