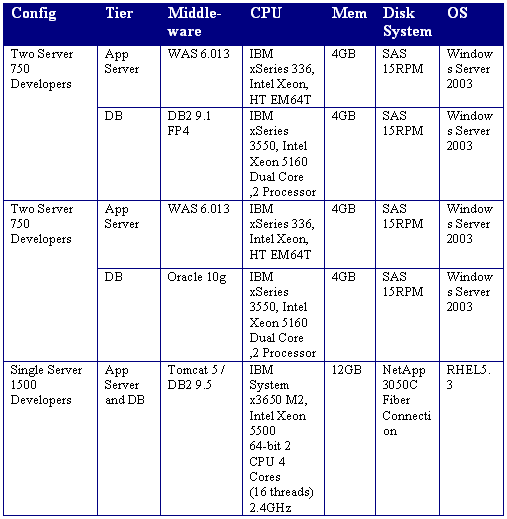

Although we are still collecting and running performance tests on Rational Team Concert 2.0, I’d like to share some of the preliminary data with you. These are the configurations that we’ve tested so far:

Of the additional configurations to test, we want to find a middle point between the Xeon 5500, which would support ~1000 Developers, and on the upper end we are going to turn the single server 1500 Developer configuration into a dual-tier configuration and push the 2000-2500 mark. Although I sometimes get the feeling that we could spend the next year testing all the permutations of disks, RAM, OS, and databases, we are going to stick with a small set of reference configurations that should help you plan your deployments.

What is a user?

Not surprisingly, a Developer puts a lot more load on the server than a Contributor. Although Contributors may create work items, browse plans, and dashboards, their footprint on the server is rather insignificant compared to your continuous build farm and army of Developers delivering changes at all hours of the day. As an example, on our server we uploaded 1.48GB of file changes and over 20GB of build results in 2 weeks, whereas there were only 100MB of work item updates and queries.

We’ve been focused on simulating Developers in the lab, and the configurations shown above reflect this. We expect to be able to handle thousands of Contributors in addition to the Developer counts.

Calibrating the test harnesses is hard

In order to simulate thousands of Developers, we had to figure out how a single Developer impacts the server. How often, for example, does a Developer deliver changes, suspend change sets, run a personal build, create new repository workspaces?

We started with the top 20 most expensive and most called services that are outlined in the previous testing blog, and then we wrote a set of test harnesses for builds, source control, work items, reports, and planning. After running the harnesses, we would take the self-hosting data we have and for which we know the number of Developers per server, and run the numbers through a complex set of spreadsheets. The main numbers we wanted to show are:

- Is the calibration reasonable? Are we calling the right operations at the right rate for this number of users?

- Is the average response time flat over our test period?

- How does the average response time compare to our highly tuned self-hosting server?

- Is the server healthy (no more than avg 50% CPU, expected memory usage)?

It’s amazing how we initially over-estimated most write operations; in the first source control run, a Developer was delivering once a minute, which is 1000% more than in practice. The problem is that we’ve got hundreds of possible service calls covering all the domains we support in Team Concert, and when you tweak one task, it skews the others.

As an example of the test harness calibration, we had to decide how many builds 1500 users would run. As a starting point we measured the builds being run for 100 Developers on our existing servers – over 300 builds a day. After a lot of discussions we came up with the following formula to calibrate builds for N Developers:

- Start with an average team size of 7 (a team in our context can be a single deliverable or part of a larger product)

- A team will run 2 continuous builds a day.

- Product teams (25 Developers) will run one integration build a day.

- Continuous builds are lighter weight, and usually don’t load all the source code from scratch. We configured them to build from a 7-10MB disk footprint stream.

- Integration builds usually build a lot more. We picked a 300MB stream.

When you vary the number of users with the variables from above, for 1500 users we have to simulate 857 continuous builds a day and 40 integration builds. This comes out to 71 continuous and 3 integration builds an hour! It may be a bit high, but the fact is that once you understand how important builds are to your rhythm, you just can’t get enough of them.

Needless to say, after a lot of experimenting and spreadsheet analysis we agreed to a standard set of harnesses for build, source control, work items, and collaboration (e.g. feeds, change events).

Data sizes

We can’t talk about scalability without considering the size of the repository. To date, we are using our internal self-hosting repository as the starting point for the tests. But we are also building up much larger repositories as we simply let the test harnesses run. As a result, we don’t really have a 1500 user database just yet, so we are being conservative on our user counts as a result. Hopefully as we start to let the test harnesses run for longer periods, they should help us reach the “1,000,000 work items” mark and the “1,000,000 files” mark pretty soon.

Bloopers

It wasn’t all rosy. We’ve found several good bugs during the testing and have fixed a good number. But the most notable were actually bugs in the tests themselves.

- We forgot to auto-prune build results. So, after a week of different test runs on the same database, we wondered why the database grew so large so quickly. It turns out that we had 9,367 build results on the server. In practice, when you create a build you only keep released builds plus a smaller number of continuous. We changed the test harness to turn on the auto-prune feature in build results, which is the default for new build definitions.

- Some of the test harnesses use hyper-active Developers, and we were suspending change sets but never resuming them. Again, after the week some test users had 400-500 suspended change sets each. Although this doesn’t happen much in practice and we didn’t intend on testing this, we found that discarding them in the UI took 30 minutes, and many of the operations that update the Pending Changes view slowed down.

Conclusion

Our test results on these configurations are positive: the CPU is in the 40-55% range at peak, our response times are generally flat over the test run, and we still have a couple exceptions that need investigation. But over the 200 services we measured, only 3-4 are showing small increases in the 1500 user runs. The memory usage is stable and the response times as compared to our internal deployment are very close.

I hope this partial data was interesting, and we should have the more “official” guides available when 2.0 ships this summer. We would also like to post our test harnesses over the summer/fall so that others can simulate what we’ve done in their own environments.

Cheers,

Jean-Michel Lemieux

Team Concert PMC

Jazz Source Control Lead

—

Note: Each IBM Rational Team Concert installation and configuration is unique. The data reported in this document are specific to the product software, test configuration, workload and operating environment that were used. Data obtained in other environments or under different conditions may vary significantly.

(11 votes, average: 4.91 out of 5)

(11 votes, average: 4.91 out of 5)

This is great material. Thanks JM.

I agree, this is good material. Are there similar metrics available for RQM?

Good stuff JM! Was your test made up of LAN only users or did you simulate remote access as well?